Loading...

Loading...

Loading...

Loading...

Loading...

Loading...

Loading...

Loading...

Loading...

Loading...

Loading...

Loading...

Loading...

Loading...

Loading...

Loading...

Loading...

Loading...

Loading...

Loading...

Loading...

Loading...

Loading...

Loading...

Loading...

Loading...

Loading...

Loading...

Loading...

Loading...

Loading...

Loading...

Loading...

Loading...

Loading...

Loading...

Loading...

Loading...

Loading...

Loading...

Loading...

Loading...

Loading...

Loading...

Loading...

Loading...

Loading...

Loading...

Loading...

Loading...

Loading...

Loading...

Loading...

Loading...

Loading...

Loading...

Loading...

Loading...

Loading...

Loading...

Loading...

Loading...

Loading...

Loading...

Loading...

Tips and best practices to upgrade the SDK while avoiding risk of data loss

If you wish to get started and install the coherence Unity SDK, please refer to the page.

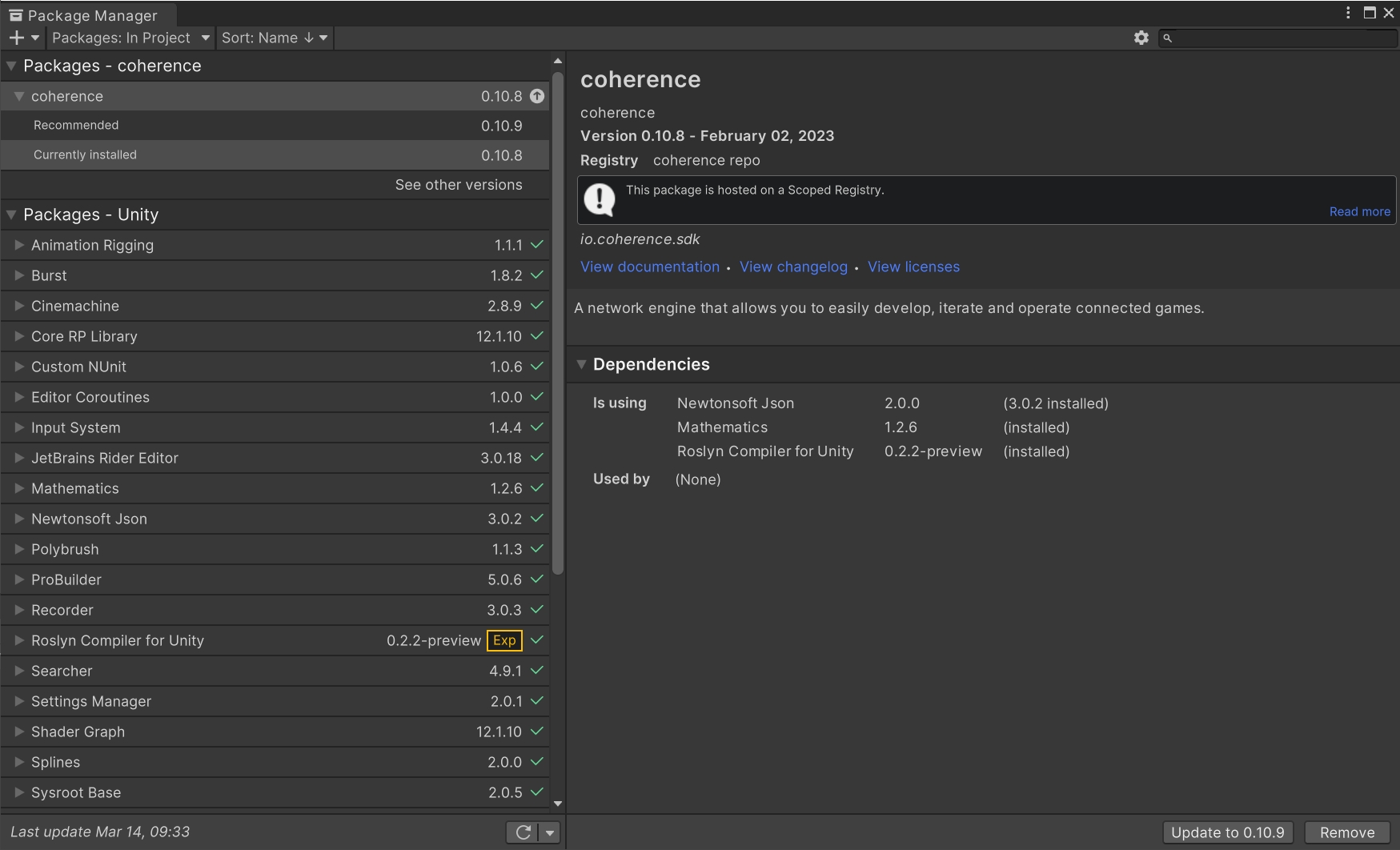

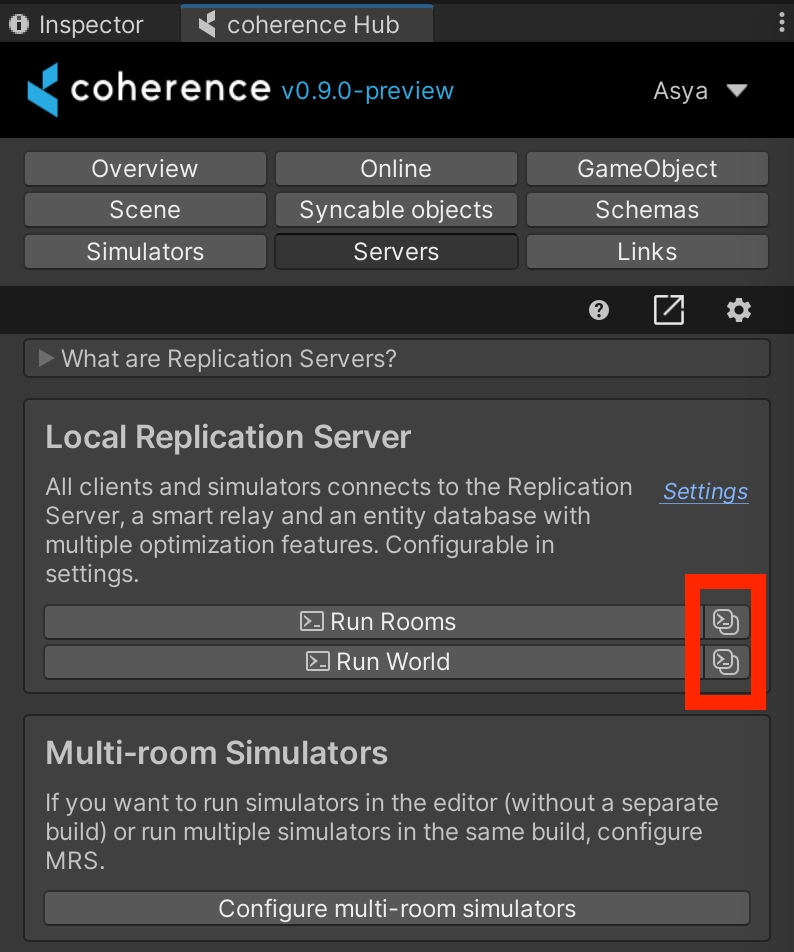

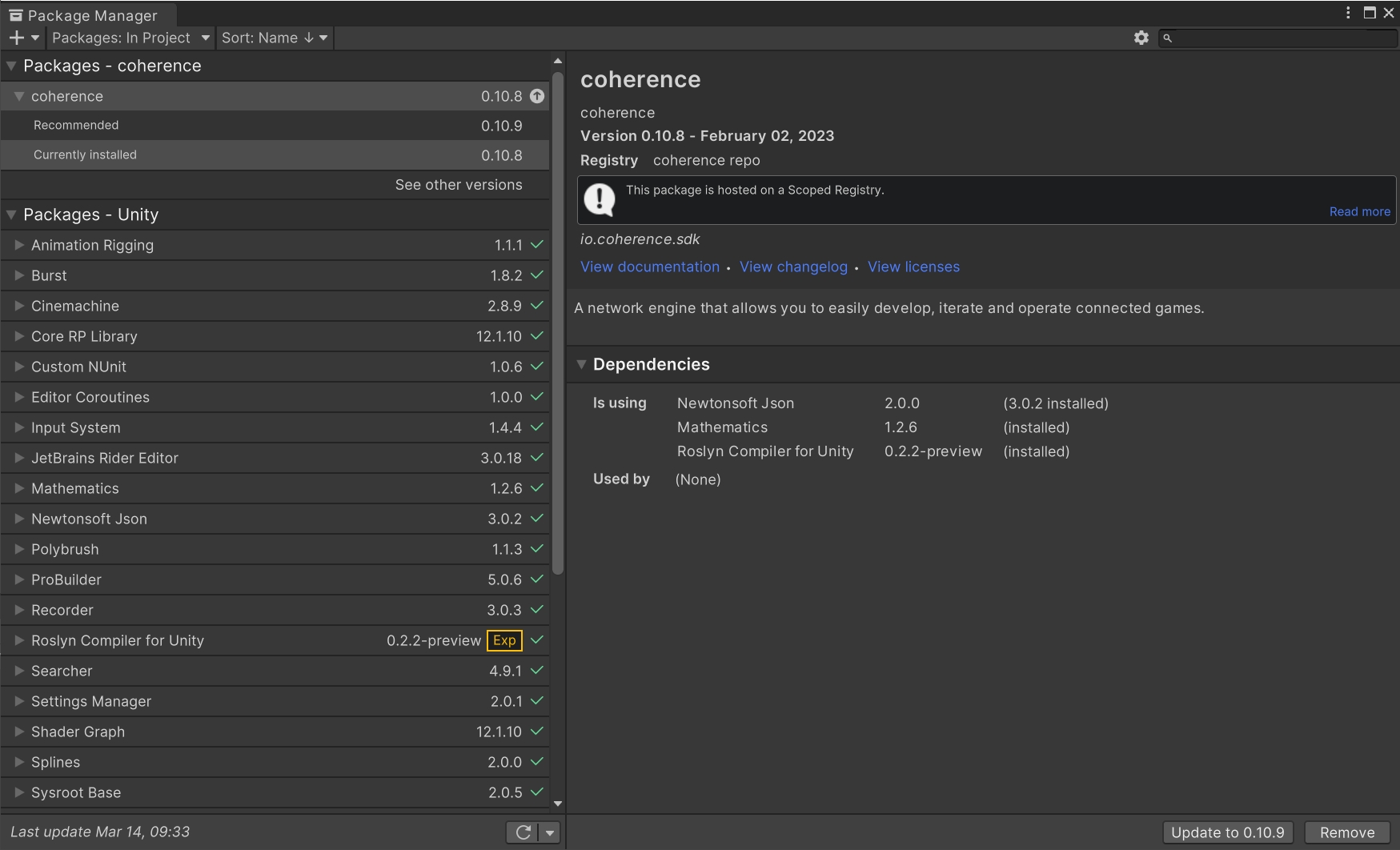

Open Unity Package Manager under Window > Package Manager and check the Packages - coherence section. It will state the currently installed version and if there are any recommended updates available:

When upgrading coherence, please do it one major or minor version at a time (considering semantic versioning, so MAJOR.MINOR.PATCH).

For example, if your project is on 0.7 and would like to upgrade to 1.0, upgrade from 0.7 to 0.8, then from 0.8 to 0.9, and so on. Always back up your project before upgrading, to avoid any risk of losing your data.

Versions prior to 0.9 are considered legacy. We don't provide a detailed upgrade guide for those versions.

Refer to the specific upgrade guides provided as subsections of this article.

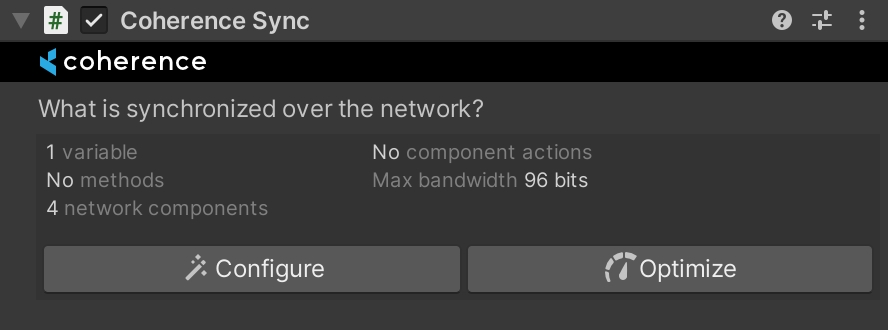

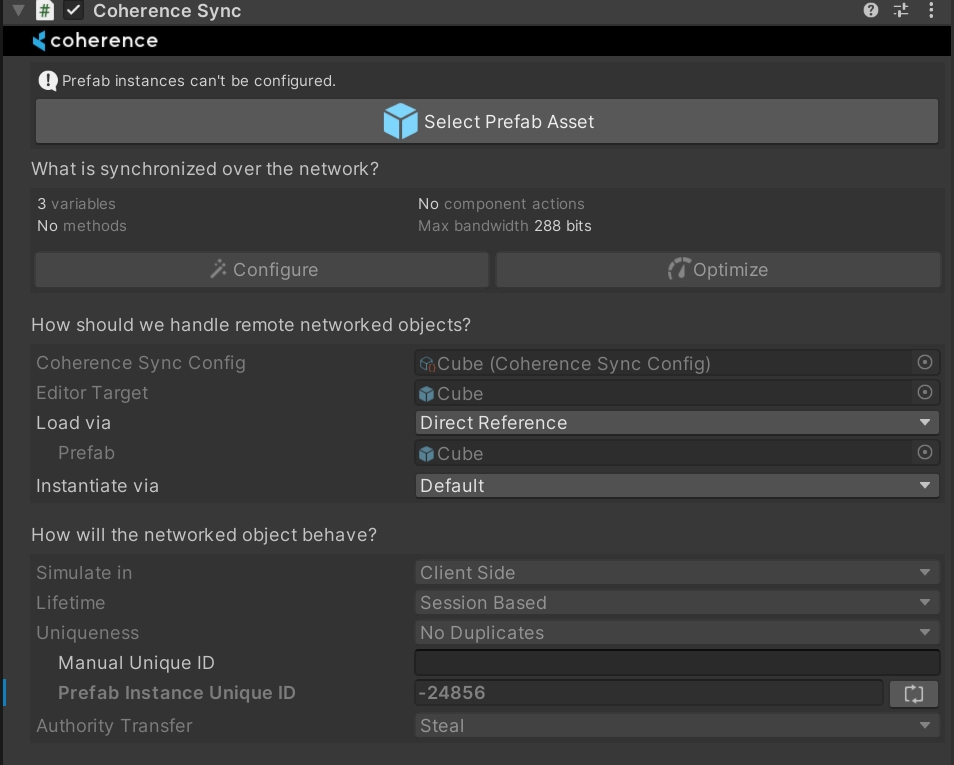

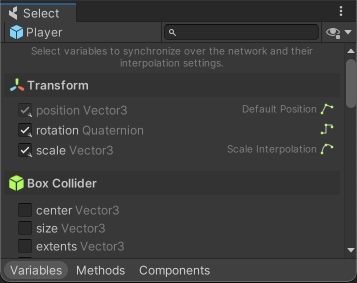

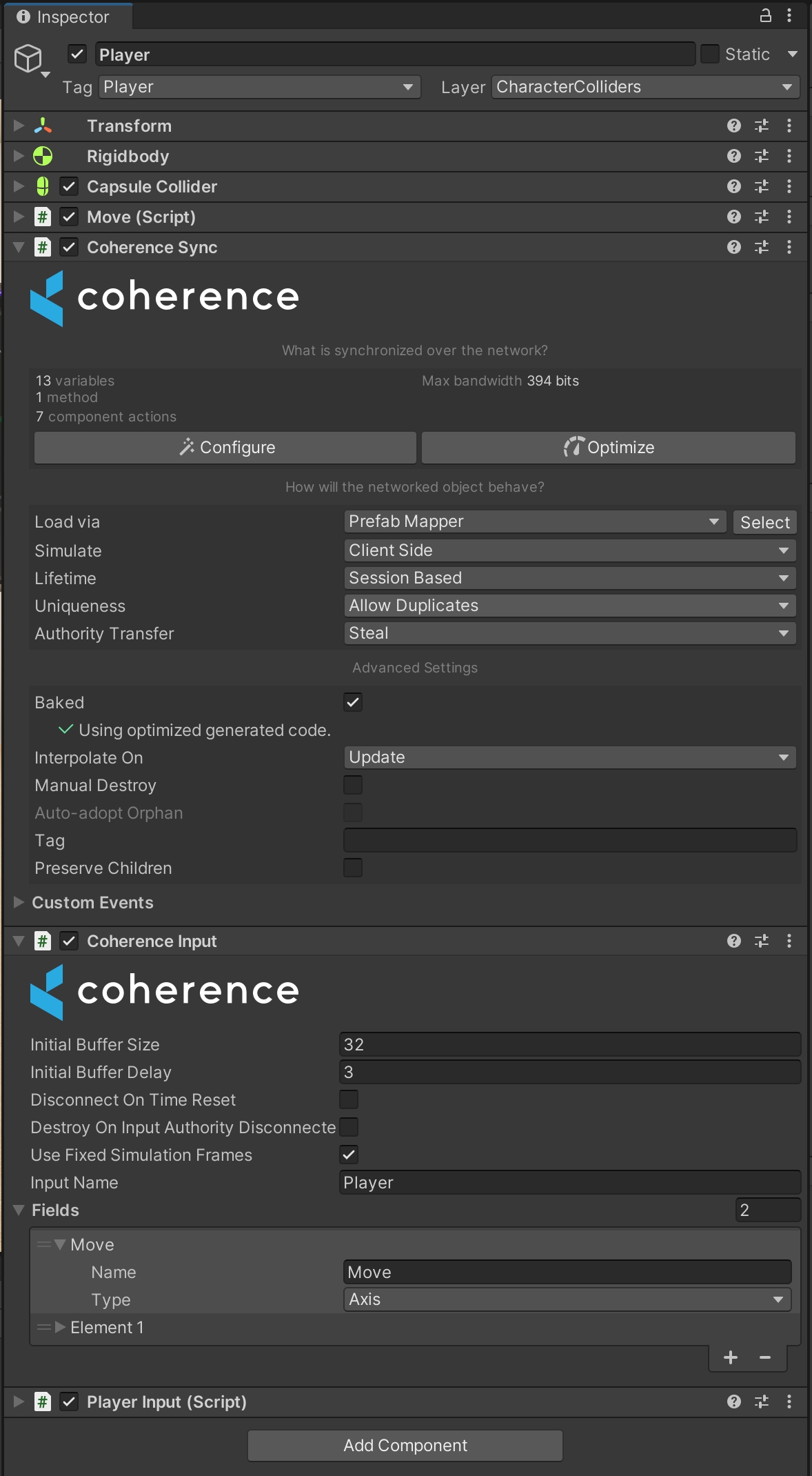

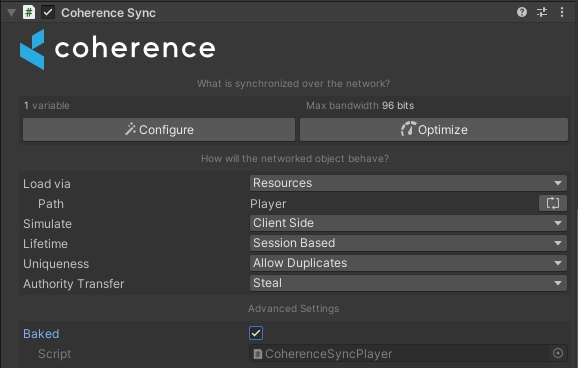

Defines a network entity and what data to sync from the GameObject. Anything that needs to be synchronized over the network can use a CoherenceSync component. You can select data from your GameObject hierarchy that you'd like to sync across the network.

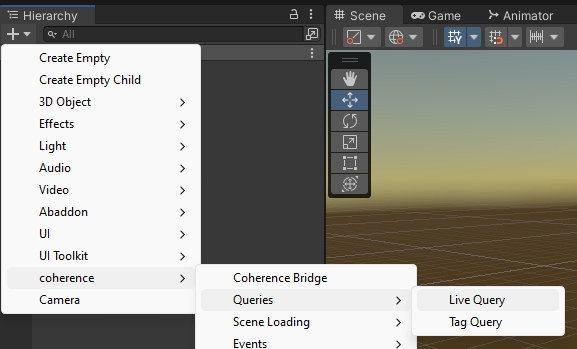

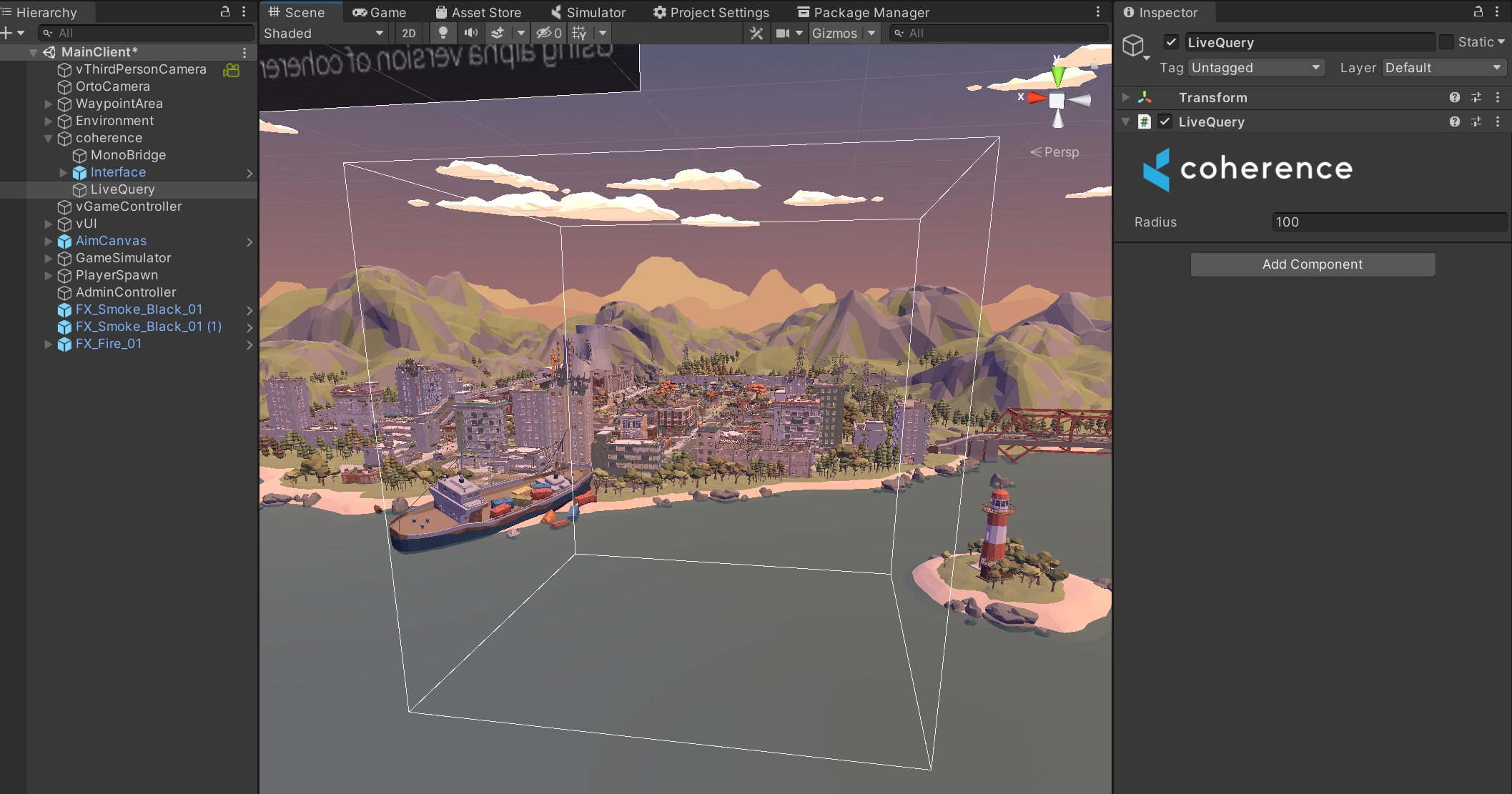

Queries an area of interest, so that you can read/write across the network on the desired location. In our Starter Project, the LiveQuery position is static with an extent large enough to cover the entire playable level. If the World was very large and potentially set over multiple Simulators, the LiveQuery could be attached to the playable character or camera.

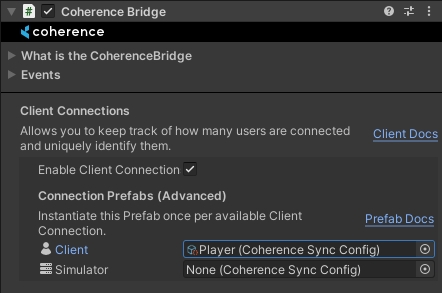

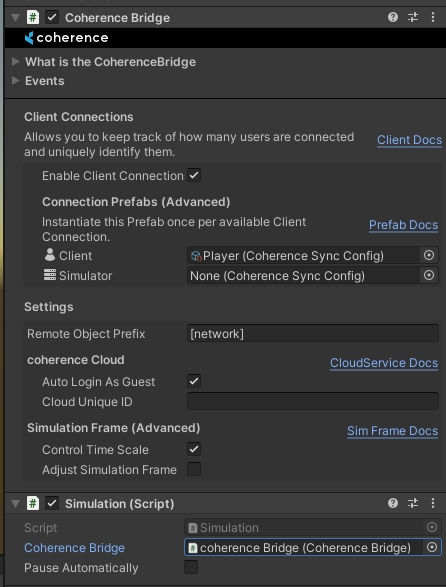

Handles the connection between the coherence transport layer and the Unity scene.

Enables a Simulator to take control of the state authority of a Client's CoherenceSync, while retaining input authority.

This component is added by CoherenceSync on .

Refer to the section at any time to find solutions to issues found during or after upgrading.

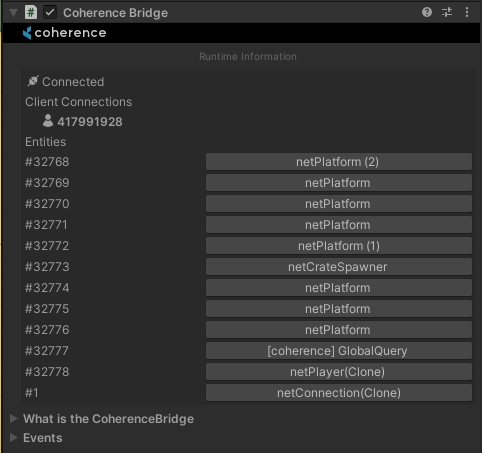

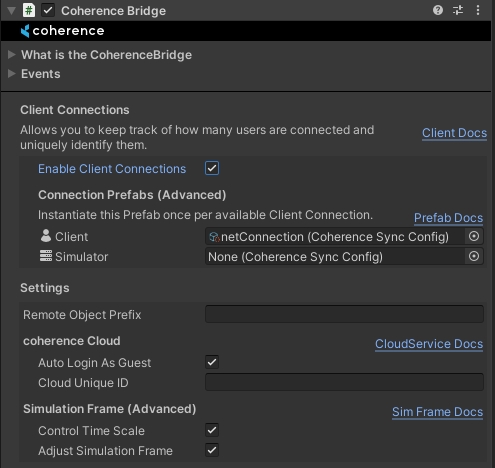

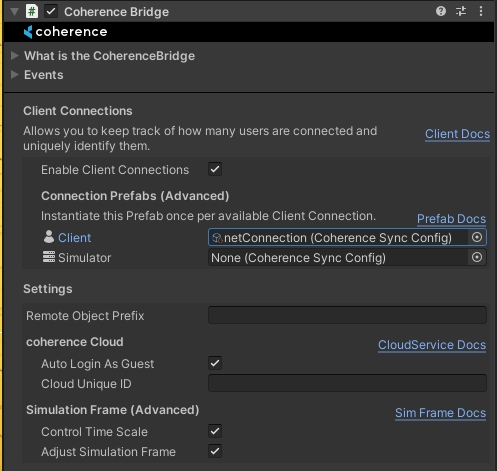

The Bridge establishes a connection between your scene and the coherence Replication Server. It makes sure all networked entities stay in sync.

When you place a GameObject in your scene, the Bridge detects it and makes sure all the synchronization can be done via the CoherenceSync component.

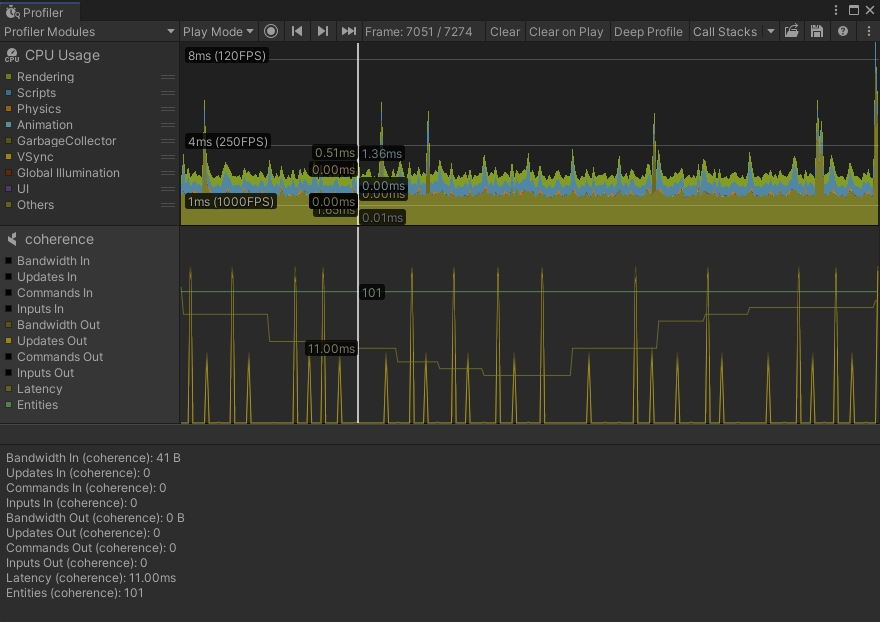

At runtime, you can inspect which Entites the Bridge is currently tracking.

A Bridge is associated with the scene it's instantiated on, and keeps track of Entities that are part of that scene. This also allows for multiple connections at the same time coming from the game or within the Unity Editor.

You can use CoherenceBridgeStore.TryGetBridge to get a CoherenceBridge associated with a scene:

The CoherenceBridge offers a couple of Unity Events in its inspector where you can hook your custom game logic:

This event is invoked when the Replication Server state has been fully synchronized, it is fired after OnConnected.

For example, if you connect to a ongoing game that has five players connected, when this event is fired all the entities and information of all the other players will already be synchronized and available to be polled.

This event is invoked the moment you stablish a connection with the Replication Server, but before any synchronization has happened.

Following the previous example, if you connect to an ongoing game that has five players connected, when this event is fired, you won't have any entities or information available about those five players.

This event is invoked when you disconnect from a Replication Server. In the parameters of the event you will be given a ConnectionCloseReason value that will explain why the disconnection happened.

This event is invoked when you attempt to connect to a Replication Server, but the connection fails, you will be returned a ConnectionException with information about the error.

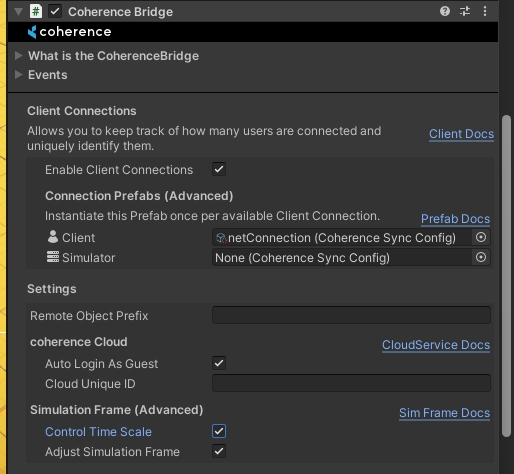

The Client Connections system allows you to keep track of how many users are connected and uniquely identify them, as well as easily send server-wide messages.

You can read more about the Client Connections system here.

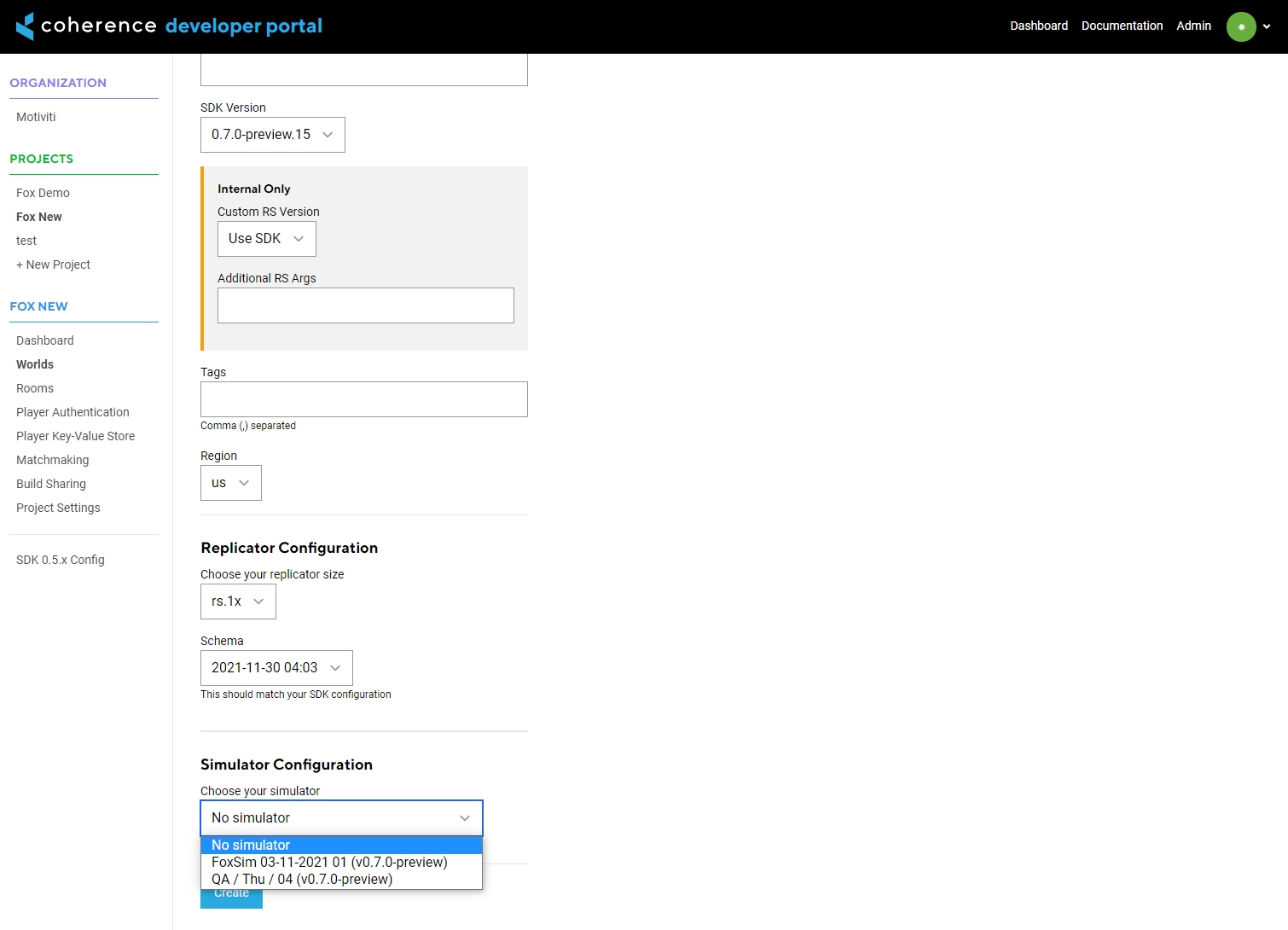

If you have a Developer Portal account, you can connect to Worlds or Rooms hosted in coherence Cloud. You can use the CloudService instance from CoherenceBridge to fetch existing Worlds or create or fetch existing Rooms, after you fetch a valid World or Room, you can use the JoinWorld or JoinRoom methods to easily connect your client.

You can read more about the Unity Cloud Service here.

Currently, the maximum number of persistent Entities supported by the Replication Server is 32 000. This limit will be increased in the near future.

using Coherence.Entity; -> using Coherence.Entities;

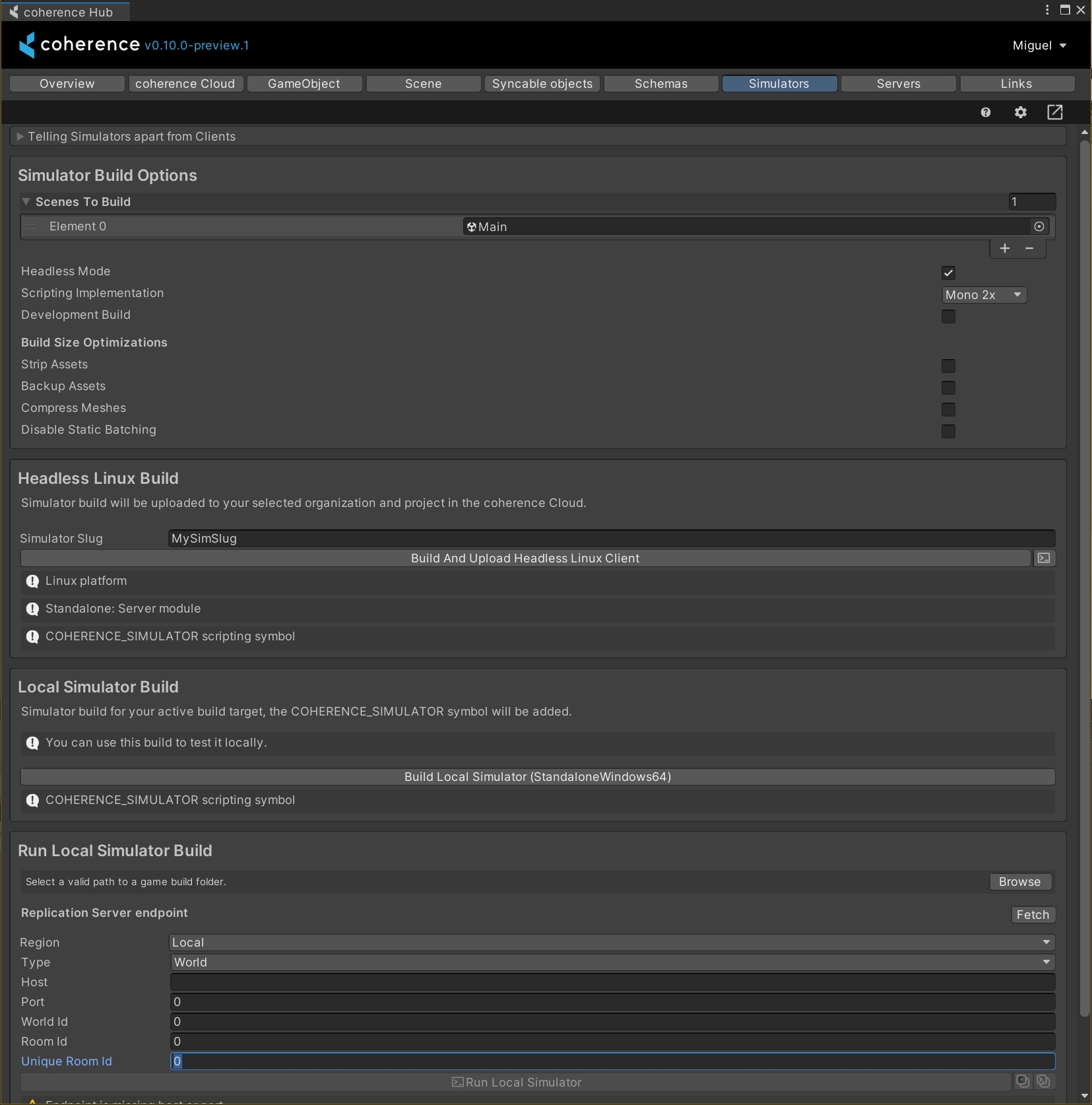

It is recommended to switch to Assets strategy before upgrading. If you upgrade while using the Source Generator, you might see an error popup, guiding you to switch back to Assets. After the upgrade and a clean Bake, you can switch back to Source Generators baking strategy.

This page describes the order of various coherence events and scripts in relation to Unity's main loop.

Check out ScriptExecutionOrder.

Additionally, take a look at your project's Script Execution Order settings by opening Edit > Project Settings and selecting the Script Execution Order category. See this Unity manual article for more details.

Depending on the reason for a disconnection the onDisconnected event can be raised from different places in the code, including LateUpdate.

When a Prefab instance with CoherenceSync is created at runtime, it will be fully synchronized with the network in the OnEnable method of CoherenceSync. This means that you can expect your custom Components to have fully resolved synchronized values and authority state in your Awake method. It occurs in the following order:

Awake() is called

Internal initialization.

OnEnable() is called

Synchronize with a new or existing Network Entity.

OnBeforeNetworkedInstantiation event is invoked.

Initial component updates are applied (for entities you have no authority over).

OnNetworkedInstantiation event is invoked.

OnStateAuthority or OnStateRemote (for authority or non-authority instances respectively) event is invoked.

Awake() is called

At this point, if you get the CoherenceSync component, you can expect networked variables and authority state to be fully resolved.

Instead of hard referencing Prefabs in your scripts to instantiate them, you can reference a CoherenceSyncConfig and instantiate your local Prefab instances through our API. This will utilize the internal INetworkObjectProvider and INetworkObjectInstantiator interfaces to load and instantiate the Prefab in a given networked scene (a scene with a CoherenceBridge component in it).

You can also hard reference the Prefab in your script, and use our extensions to instantiate the Prefab easily using the internal INetworkObjectInstantiator interface implementation. The main difference is that the previous approach doesn't need a Prefab hard reference, and you won't have to change the code if the way that the Prefab is loaded into memory changes (for example, if you go from Resources to load it via Addressables).

coherence can sync the following types out of the box:

bool

int

uint

byte

char

short

ushort

float

string

Vector2

Vector3

Quaternion

GameObject

Transform

RectTransform

CoherenceSync

SerializeEntityID

byte[]

long

ulong

Int64

UInt64

Color

double

RectTransform is still in an experimental phase - use at your own discretion!

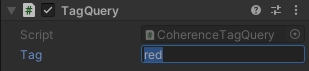

In addition to the LiveQuery, coherence also supports filtering objects by tag. This is useful when you have some special objects that should always be visible regardless of World position.

For a guide on how to use TagQuery, see .

How to parent CoherenceSync objects to each other

Out of the box, coherence offers several options to handle parenting of networked entities. While some workflows are automatic, others require a specific component to be added.

Generally there is a distinction if the parenting happens at runtime vs. edit time, and whether the two entities are direct parent-child, or have a complex hierarchy. See below for each case.

At runtime:

CoherenceSyncs as a direct child: when you create a parent-child relationship of CoherenceSync objects at runtime.

Deeply-nested CoherenceSyncs: when you create a complex parent-child relationship of CoherenceSync objects at runtime.

At edit time:

Nesting connected Prefabs assets: the developer prepares several connected Prefabs and nests them one to another before entering Play Mode. This covers both Prefabs in the scene and in the assets.

Notable API changes include:

Coherence.Network is removed now. OnConnected and OnDisconnected events are moved to IClient and have changed a signature:

BridgeStore.GetBridge is now obsolete, and will be replaced with

BridgeStore.TryGetBridge.

CoherenceSync.isSimulated is now obsolete, and will be replaced with CoherenceSync.HasStateAuthority.

CoherenceSync.RequestAuthority is now obsolete, and will be replaced with CoherenceSync.RequestAuthority(AuthorityType).

This page lists changes in coherence 1.0.0 version which might affect existing projects when upgrading from a 0.10.x version to 1.0.0.

Version 1.0.0 includes breaking changes to the baked scripts, which means your current ones will cause compilation errors. It is recommended to delete your current baked scripts under Assets/coherence/baked before performing a coherence Unity SDK update.

For automatic migrations to run smoothly, we recommend not having any Prefab with missing component scripts that have the CoherenceSync component attached to it. These Prefabs will stop Unity from saving assets and automatic data migrations might get interrupted because of it.

There have been a number of updates to the old CoherenceMonoBridge, which have resulted in a rebranding of the component to CoherenceBridge.

If your custom scripts have references to the class CoherenceMonoBridge, you will have to rename all these references to CoherenceBridge.

The script asset GUID and the public API of the component remain unchanged, so asset references to CoherenceMonoBridge components should remain intact.

In coherence 1.0.0, we have revamped our asset management systems to make them more scalable and customizable, both in Editor and in runtime. Read more about the new implementations and the possibilities in the Asset Management article.

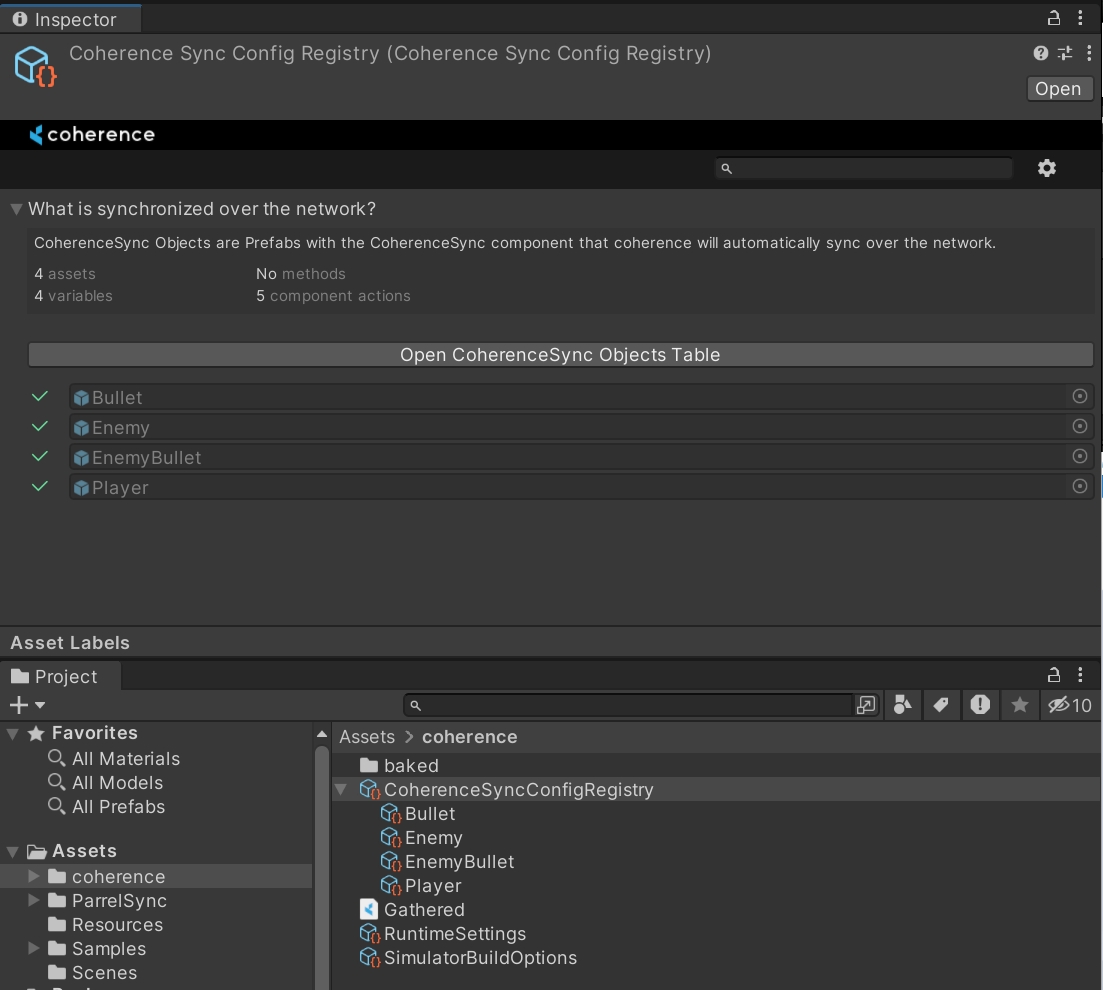

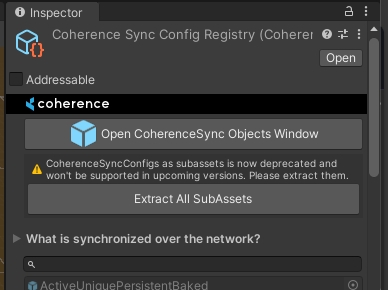

Upon upgrading the SDK, a new CoherenceSyncConfigRegistry asset will be automatically created in Assets/coherence/CoherenceSyncConfigRegistry.asset and populated with sub-assets for each of your Prefabs that have a CoherenceSync component attached.

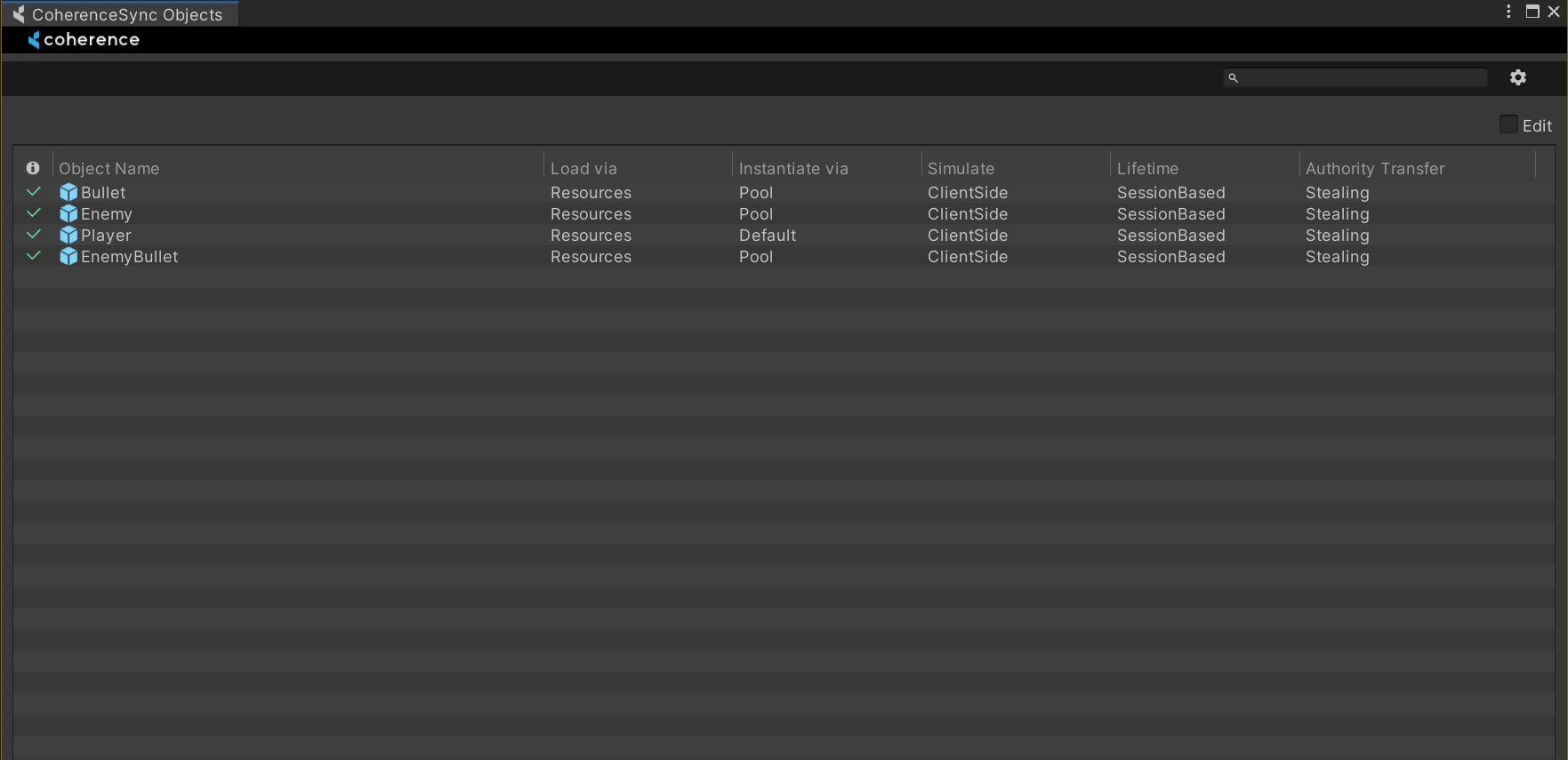

If the automatic migration completed successfully, you can browse all your networked Prefabs in the new CoherenceSync Objects window found under the coherence menu item:

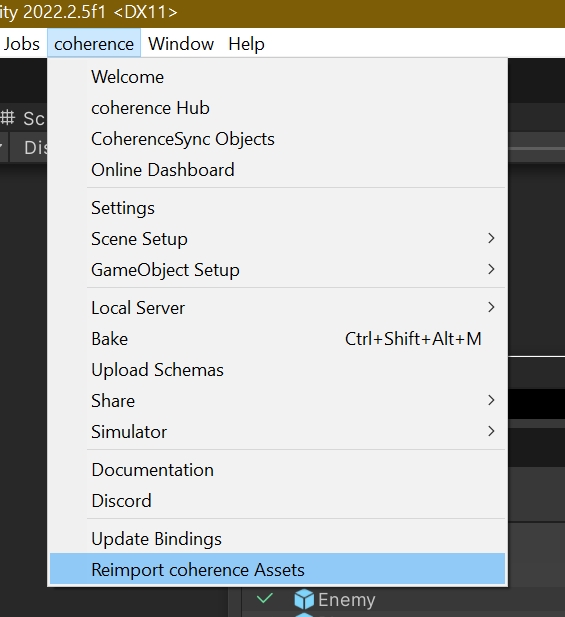

If something has gone wrong during the automatic migration, you can restart the process by deleting the current CoherenceSyncConfigRegistry asset, and selecting the Reimport coherence Assets option under the coherence menu item. This will automatically create a config entry for each of your CoherenceSync Prefabs:

In previous versions of coherence, Prefabs with the CoherenceSync component would be automatically synced over the network in the Start method, and they would stop being synced only when destroyed. This prevented us from supporting things like object pooling and reusing the same CoherenceSync instance to represent different network entities across its lifetime.

As a result, CoherenceSync instances are now automatically synced and unsynced with the network in the OnEnable and OnDisable methods of the MonoBehaviour. This means you can disable the GameObject instance in the hierarchy and it will stop being synced, you can also keep the local visual representation by only disabling the CoherenceSync component.

You can learn more about the CoherenceSync lifetime cycle in the Order Of Execution page.

This change may or may not affect your current project. If you wish to use the new Object Pooling feature (or make your own!), you might need to upgrade your custom components logic to accommodate the new paradigm.

The Play API was the public API we offered to connect with your coherence Cloud project in runtime. It has been deprecated in favor of a new non-static API called CloudService, with separation of a ReplicationServerRoomsService so you can talk to self-hosted Room Replication Servers with a lot more customization and without having to use the coherence Cloud.

The Play API includes the following classes:

PlayResolver

PlayClient

Play

WorldsResolverClient

RoomsResolverClient

If you were using PlayResolver static calls, you can now access CloudService instance from CoherenceBridge instead, and you will be able to use the CloudService.Worlds or CloudService.Rooms instances to fetch Worlds or to create, delete or fetch available Rooms respectively.

CloudService offers callback-based methods and an async variant for all of the available requests.

You can learn more about the Cloud Service API under the Unity Cloud Service section.

No Domain Reload is a Unity Editor option that allows users to enter Play mode very fast without having to recompile all your code. This wasn't properly supported in earlier versions of coherence, but now it is!

You can find this option under Edit > Project Settings > Editor > Enter Play Mode Settings.

Keep in mind that you might have custom implementations in your project that prevents you from using this option successfully, the most common are:

Static state and initializers: since your app domain isn't reloaded when entering Play mode, that means static contexts aren't reset either, which might cause issues.

SerializeReference instances: similar to the previous one, SerializeReference instances won't be recreated when entering Play mode, so you will have to make sure that the state of SerializeReference instances are properly reset on application quit.

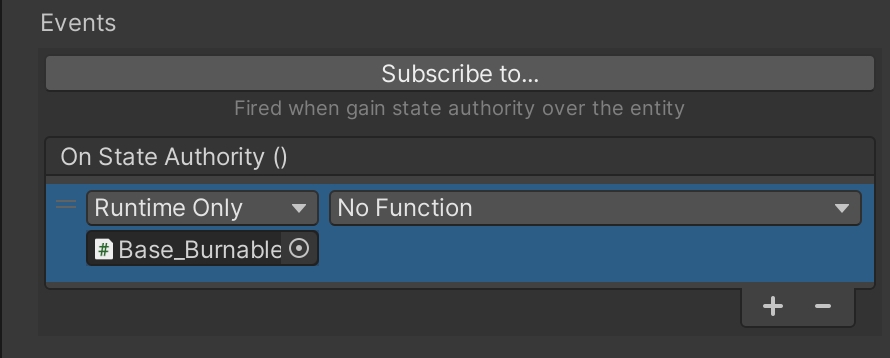

In previous releases of coherence, this event would be fired strictly for CoherenceSync prefab instances created by coherence, which means it would be fired for instances you didn't have authority over.

In 1.0, this event will be fired for every instance of CoherenceSync that starts being synchronized over the network.

If you wish to hook behaviour to non-authority instances, please use the OnStateRemote event instead, which is fired when a CoherenceSync instance is non-authoritative.

In 1.0, there has been two major changes to Client Connection instances.

Client Connection instances now live in the DontDestroyOnLoad scene. Since now we support Scene Transitioning, and Client Connections instances are managed by coherence, they need to survive the destruction of the Scene they were in.

References to the Client Connection Prefab in the CoherenceBridge Component have been changed from hard referencing a Unity Prefab, to referencing a CoherenceSyncConfig asset. If you were using a Unity Prefab reference, it still exists serialized but is deprecated. If you wish to stop using the Unity Prefab reference, please delete the CoherenceBridge Component and add it again.

For more information, see .

For detailed documentation of the updated CoherenceSync component, see .

The CLI tools have been updated, especially the ones that handle Simulators. To learn more about this, see utilities.

In this page we will learn about how coherence handles loading CoherenceSync Prefabs into memory and instantiating them when a new remote entity appears in the network. You will also learn how you can hook your own asset loading and instantiation systems seamlessly.

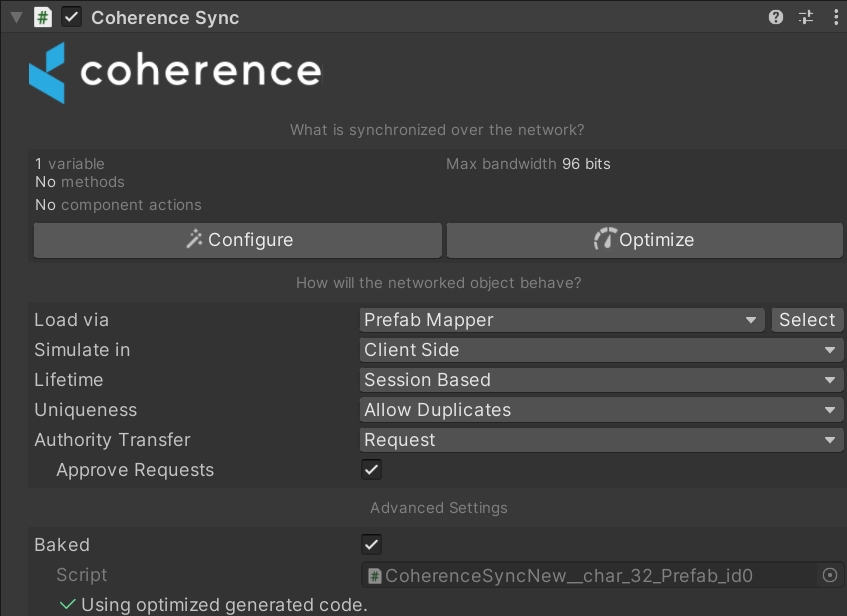

Whenever you start synchronizing one of your prefabs, either by adding the CoherenceSync component manually or clicking the Sync with coherence toggle in the prefab inspector, coherence will automatically create a CoherenceSyncConfig object that will be added to the CoherenceSyncConfigRegistry asset found in the Assets/coherence folder.

This CoherenceSyncConfig object allows us to do the following:

Hard reference the prefab in Editor, this means that whenever we have to do postprocessing in synced prefabs, we don't have to do a lookup or load them from Resources.

Serialize the method of loading and instantiating this prefab in runtime.

Soft reference the prefab in Runtime with a GUID, this means we can access the loading and instantiating implementations without having to load the prefab itself into memory.

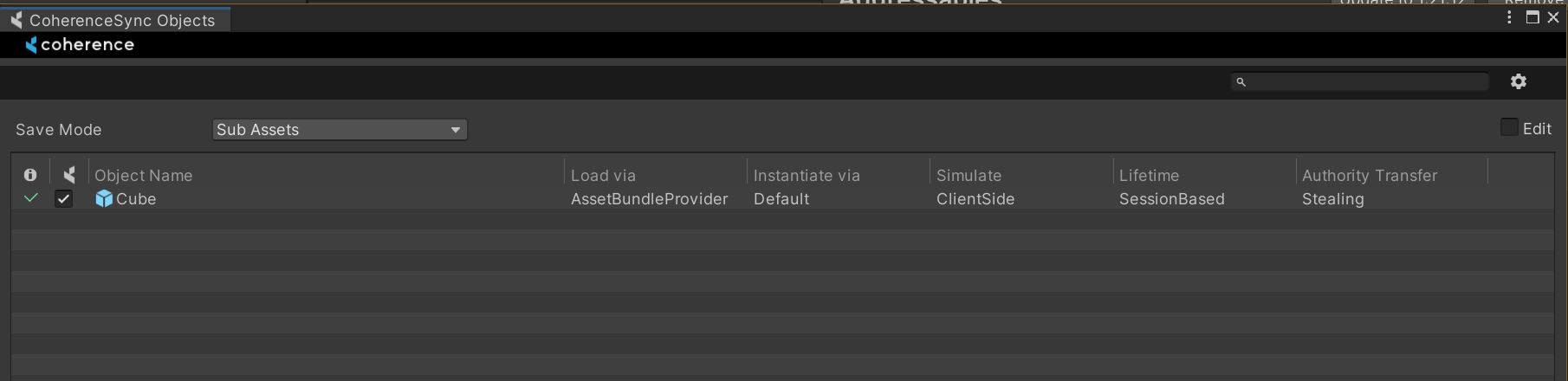

All your CoherenceSync prefabs will have a related CoherenceSyncConfig object, you can inspect all your prefabs in the CoherenceSync Objects window, found under the coherence => CoherenceSync Objects menu item:

You can also manually inspect your CoherenceSyncConfig objects by selecting the CoherenceSyncConfigRegistry asset in Assets/coherence/CoherenceSyncConfigRegistry.asset:

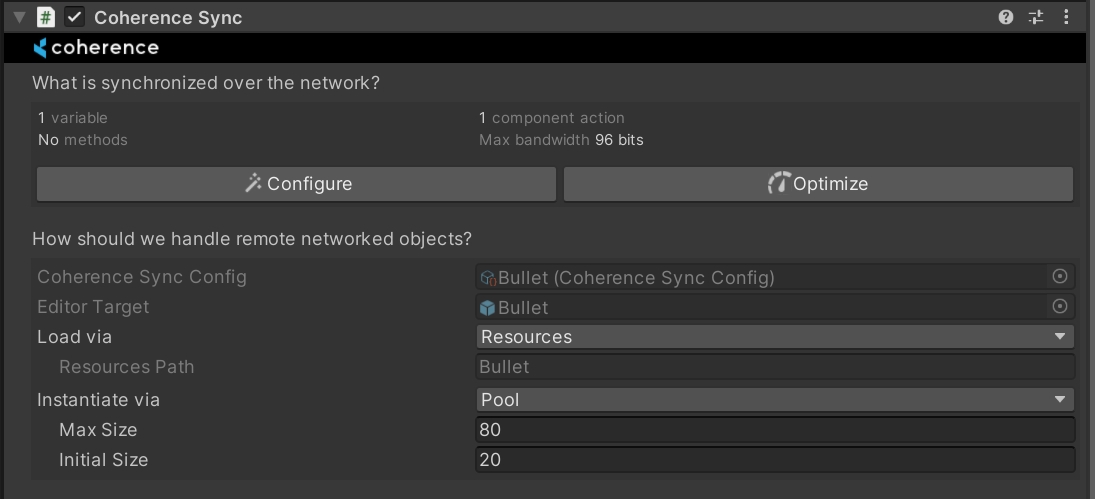

You can also find your related CoherenceSyncConfig in the inspector of the CoherenceSync component, you can directly edit your Config from here:

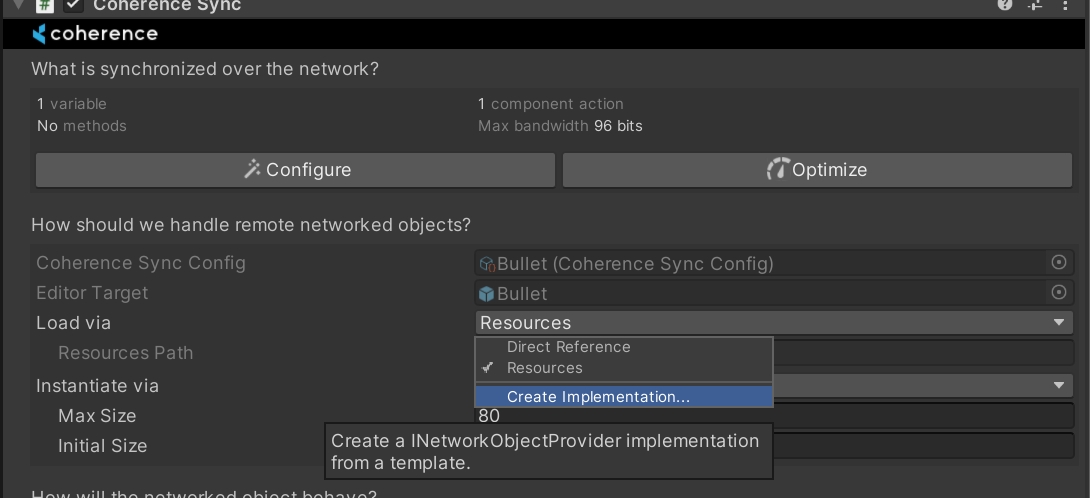

This option allows you to specify how this prefab will be loaded into memory in runtime, we support three default implementations, or you can create your own. The three default implementations are Resources, Direct Reference or Addressables, these three will be automatically managed by coherence and you won't have to worry much about them.

Resources loader will be used if your prefab is inside a Resources folder, if you wish to use any other type of loading method, you will be prompted to move the prefab outside of the Resources folder.

This loader will be used if your prefab is outside of a Resources folder, and the prefab is not marked as Addressable. This means that we will need to hard reference your prefab in the CoherenceSyncConfig, which means it will always be loaded into memory from the moment you start your game.

Addressables

This option is only available if you have the Addressables Unity Package installed.

This loader will be used if your prefab is marked as an Addressable asset, and it will be soft referenced using Addressables AssetReference class.

You can implement the INetworkObjectProvider interface to create your custom implementations that will be used by coherence when we need to load the prefab into memory.

Custom implementations can be Serializable and have your own custom serialized data.

Implementations of this interface will be automatically selectable via the Load via option in the CoherenceSyncObject asset.

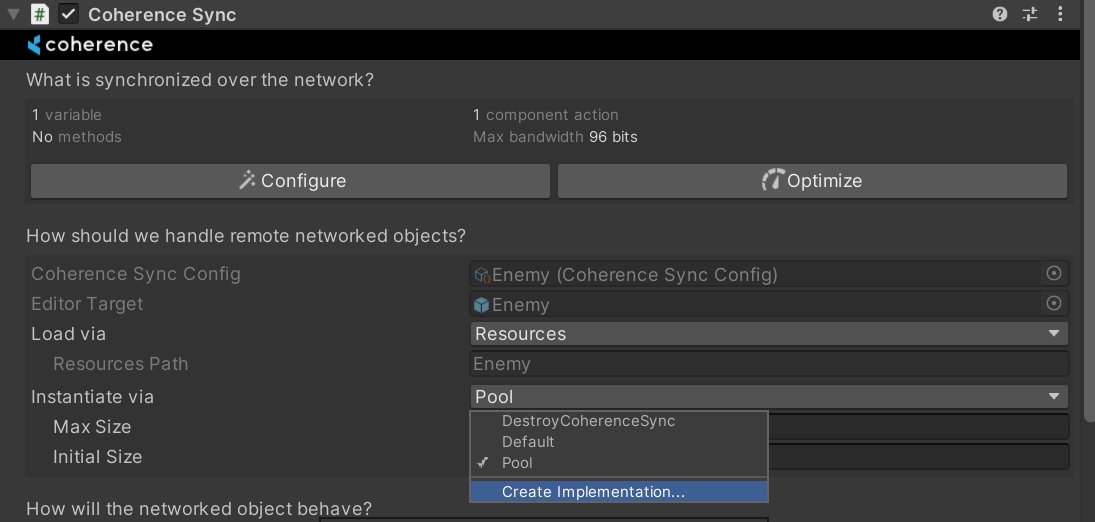

This option allows you to specify how this prefab will be instantiated in runtime, we support three default implementations, or you can create your own. The three default implementations are Default, Pooling or DestroyCoherenceSync.

This instantiator will create a new instance of your prefab, and when the related network entity is destroyed, this prefab instance will also be destroyed.

This instantiator supports object pooling, instead of always creating and destroying instances, the pool instantiator will attempt to reuse existing instances. It has two options:

Max Size: maximum size of the pool for this prefab, instances that exceed the limit of the pool will be destroyed when returned.

Initial Size: coherence will create this amount of instances on app startup.

This instantiator will create a new instance for your prefab, but instead of completely destroying the object when the related network entity is destroyed, it will destroy or disable the CoherenceSync component instead.

You can implement the INetworkObjectInstantiator interface to create your custom implementations that will be used by coherence when we need to instantiate a pefab in the scene.

Custom implementations can be Serializable and have your own custom serialized data.

Implementations of this interface will be automatically selectable via the Instantiate via option in the CoherenceSyncObject asset.

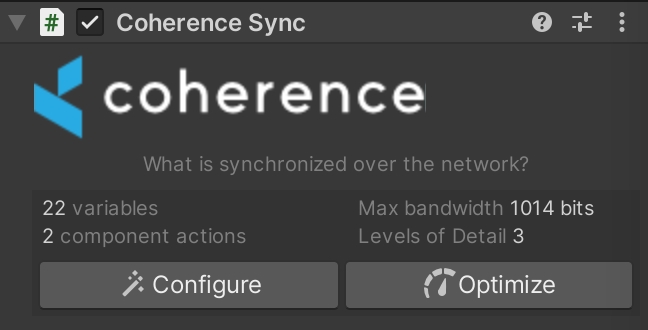

The CoherenceSync component will help you prepare an object for network synchronization. It also exposes an API that allows us to manipulate the object during runtime.

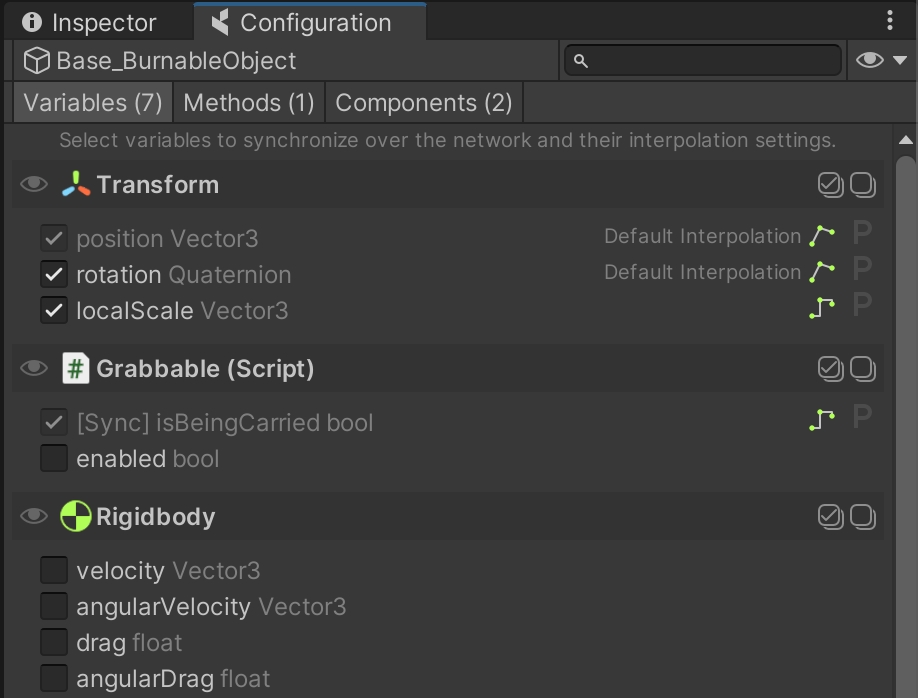

CoherenceSync will query all public variables and methods on any of the attached components, for example Unity components such as Transform, Animator, etc. This will include any custom scripts, including third-party Asset Store packages that you may have downloaded.

Refer to the Prefab setup page to learn how to configure your Prefab to network state changes.

Notifying State Changes

It is often useful to know when a synchronized variable has changed its value. It can be easily achieved using the OnValueSyncedAttribute. This attribute lets you define a method that will be called each time a value of a synced member (field or property) changes in the non-simulated version of an entity.

Let's start with a simple example:

Whenever the value of the Health field gets updated (synced with its simulated version) the UpdateHealthLabel will be called automatically, changing the health label text and printing a log with a health difference.

This comes in handy in projects that use authoritative Simulators. The Client code can easily react to changes in the Player entity state introduced by the Simulator, updating the visual representation (which the Simulator doesn't need).

The OnValueSyncedAttribute requires using baked mode.

Remember that the callback method will be called only for a non-simulated instance of an Entity. Use on a simulated (owned) instance requires calling the selected method manually whenever the value of a given field/member changes. We recommend using properties with a backing field for this.

The OnValueSynced feature can be used only on members of user-defined types, that is, there's no way to be notified about a change in the value of a Unity type member, like transform.position. This might however change in the future, so stay tuned!

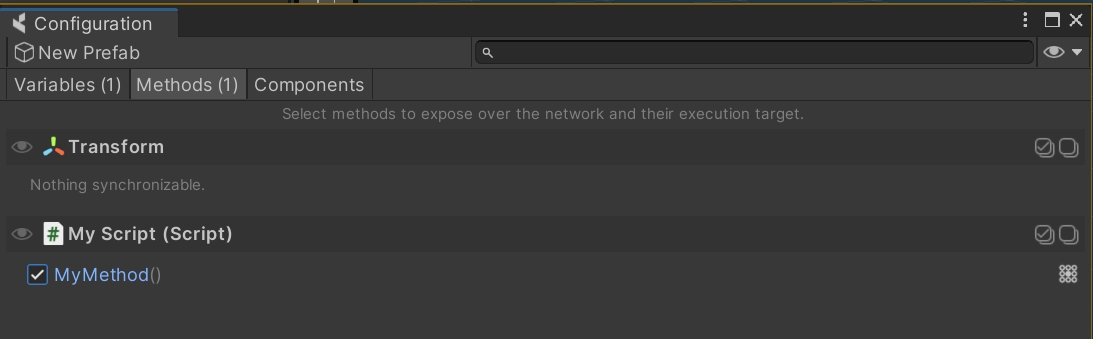

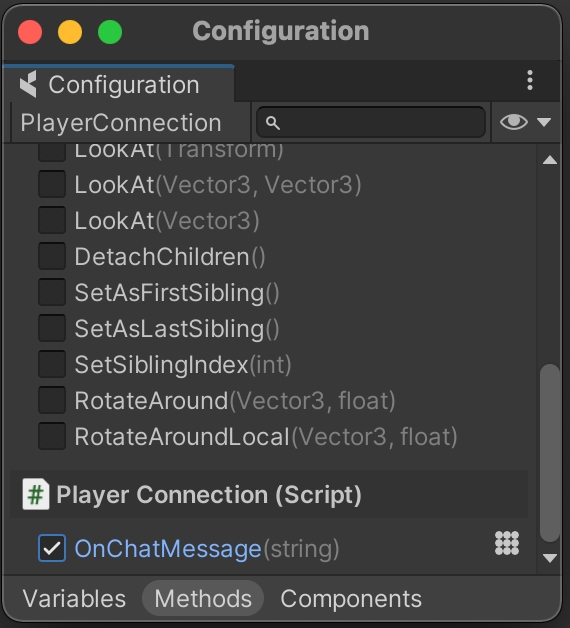

Commands are network messages sent from one CoherenceSync to another CoherenceSync. Functionally equivalent to RPCs, commands bind to public methods accessible on the GameObject hierarchy that CoherenceSync sits on.

In the design phase, you can expose public methods the same way you select fields for synchronization: through the Configure window on your CoherenceSync component.

By clicking on the method, you bind to it, defining a command. The grid icon on its right lets you configure the routing mode. Commands with a Send to Authority Only mode can be sent only to the authority of the target CoherenceSync, while ones with the Send to All Instances can be broadcasted to all clients that see it. The routing is enforced by the Replication Server as a security measure, so that outdated or malicious clients don't break the game.

To send a command, we call the SendCommand method on the target CoherenceSync object. It takes a number of arguments:

The generic type parameter must be the type of the receiving Component. This ensures that the correct method gets called if the receiving GameObject has components that implement methods that share the same name.

Example: sync.SendCommand<Transform>(...)

If there are multiple commands bound to different components of the same type (for example, your CoherenceSync hierarchy has five Transforms, and you create a command for Transform.SetParent on all of them), the command is only sent to the first one found in the hierarchy which matches the type.

The first argument is the name of the method on the component that we want to call. It is good practice to use the C# nameof expression when referring to the method name, since it prevents accidentally misspelling it, or forgetting to update the string if the method changes name.

Alternatively, if you want to know which Client sent the command, you can add CoherenceSync sender as the first argument of the command, and the correct value will be automatically filled in by the SDK.

The second argument is an enum that specifies the MessageTarget of the command. The possible values are:

MessageTarget.All – sends the command to each Client that has an instance of this Entity.

MessageTarget.AuthorityOnly – send the command only to the Client that has authority over the Entity.

MessageTarget.Other - sends the command to every Entity other than the one SendCommand is called on.

Mind that the target must be compatible with the routing mode set in the bindings, i.e. Send to authority will allow only for the MessageTarget.AuthorityOnly while Send to all instances allows for both values.

Also, it is possible that the message never sends as in the case of a command with MessageTarget.Other sent from the authority with routing of Authority Only.

The rest of the arguments (if any) vary depending on the command itself. We must supply as many parameters as are defined in the target method and the schema.

Here's an example of how to send a command:

If you have the same command bound more than once in the same Prefab hierarchy, you can target a specific MonoBehaviour when sending a message, you can do so via the SendCommand(Action action) method in CoherenceSync.

Additionally, if you want to target every bound MonoBehaviour, you can do so via the SendCommandToChildren method in CoherenceSync.

We don't have to do anything special to receive the command. The system will simply call the corresponding method on the target network entity.

If the target is a locally simulated entity, SendCommand will recognize that and not send a network command, but instead simply call the method directly.

While commands by default carry no information on who sent them in order to optimise traffic, you can create commands that include a ClientID as one of the parameters. Then, on the receiving end, compare that value with a list of connected Clients.

Another useful way to access ClientID is via CoherenceBridge, like this:

You can create your own implementation for these IDs or, more simply, use coherence's built-in Client Connections feature.

Sometimes you want to inform a bunch of different CoherenceSyncs about a change. For example, an explosion impact on a few players. To do so, we have to go through the instances we want to notify and send commands to each of them.

In this example, a command will get sent to each CoherenceSync under the state authority of this Client. To make it only affect CoherenceSyncs within certain criteria, you need to filter to which CoherenceSync you send the command to, on your own.

Some of the primitive types supported are nullable values, this includes:

Byte[]

string

Entity references: CoherenceSync, Transform, and GameObject

Refer to the supported types page.

In order to send one of these values as a null (or default) we need to use special syntax to ensure the right method signature is resolved.

Null-value arguments need to be passed as a ValueTuple<Type, object> so that their type can be correctly resolved. In the example above sending a null value for a string is written as:

(typeof(string), (string)null)

and the null Byte[] argument is written as:

(typeof(Byte[]), (Byte[])null)

Mis-ordered arguments, type mis-match, or unresolvable types will result in errors logged and the command not being sent.

When a null argument is deserialized on a client receiving the command, it is possible that the null value is converted into a non-null default value. For example, sending a null string in a command could result in clients receiving an empty string. As another example, a null Byte[] argument could be deserialized into an empty Byte[0] array. So, receiving code should be ready for either a null value or an equivalent default.

When a Prefab is not using a baked script there are some restrictions for what types can be sent in a single command:

4 entity references

maximum of 511 bytes total of data in other arguments

a single Byte[] argument can be no longer than 509 bytes because of overhead

Some network primitive types send extra data when serialized (like Byte arrays and string types) so gauging how many bits a command will use is difficult. If a single command is bigger than the supported packet size, it won't work even with baked code. For a good and performant game experience, always try to keep the total command argument sizes low.

When a Client receives a command targeted at AuthorityOnly but it has already transferred an authority of that entity, the command is simply discarded.

Entity references let you set up references between Entities and have those be synchronized, just like other value types (like integers, vectors, etc.)

To use Entity references, simply select any fields of type GameObject, Transform, or CoherenceSync for syncing in the Configuration window:

The synchronization works both when using reflection and in baked sync scripts.

Entity references can also be used as arguments in Commands.

It's important to know about the situations when an Entity reference might become null, even though it seems like it should have a value:

A client might not have the referenced entity in its LiveQuery. A local reference can only be valid if there's an actual Entity instance to reference. If this becomes a problem, consider switching to using the CoherenceNode component or Parent-Child relationships of prefabs, which ensures that that Entity stays part of the query.

The owner of the Entity reference might sync the reference to the Replication Server before syncing the referenced Entity. This will lead to the Replication Server storing a null reference. If possible, try setting the Entity references during gameplay when the referenced Entities have already existed for a while.

Cyclic references are undefined behavior for now. Therefore multiple entities created on the same Client that reference each other might never get synced properly. This is also holds true for references that exist through intermediate entities (A has reference to B has reference to C has reference A - cyclic).

In any case, it's important to use a defensive coding style when working with Entity references. Make sure that your code can handle missing Entities and nulls in a graceful way.

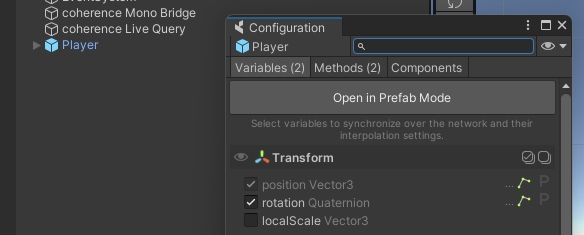

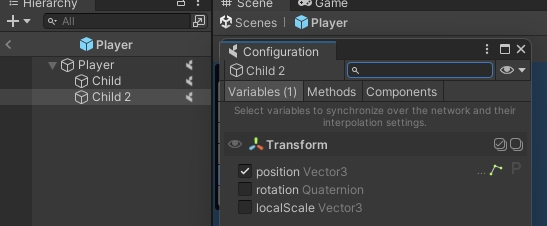

Binding to variables and methods within the hierarchy

If a synced Prefab has a hierarchy, you can synchronize variables, methods and component actions for any of the child GameObjects within its hierarchy.

Note: on this page we cover children GameObjects or nested Prefabs that don't have their ownCoherenceSync.

If a child object has a CoherenceSync of their own, they become an independent network entity. For that, see the Parenting section.

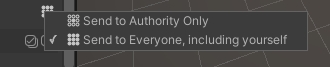

When the Configure window is open it will show the variables, methods and component actions available for synchronization for your currently selected GameObject.

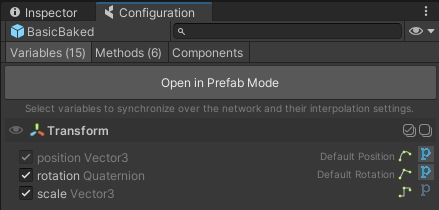

First, make sure to be editing the Prefab in Prefab Mode:

Once in Prefab Mode and with the Configure window open, shift the selection to any of the GameObjects that belong to the hierarchy.

The Configure window will be updated automatically, showing you everything that is available to be synchronized on the child GameObject:

That's it!

Syncing properties, methods and component actions on child GameObjects doesn't require any different flow than what you usually do for the root object. They all get collected and networked as part of one single network entity.

After your changes to GameObject, don't forget to Bake again to rebuild the netcode for the entity.

Make sure to not destroy child GameObjects that have synced properties, or you will receive a warning in the Console. To destroy a synced object, always remove the root.

(you can totally destroy children that don't have any synced property)

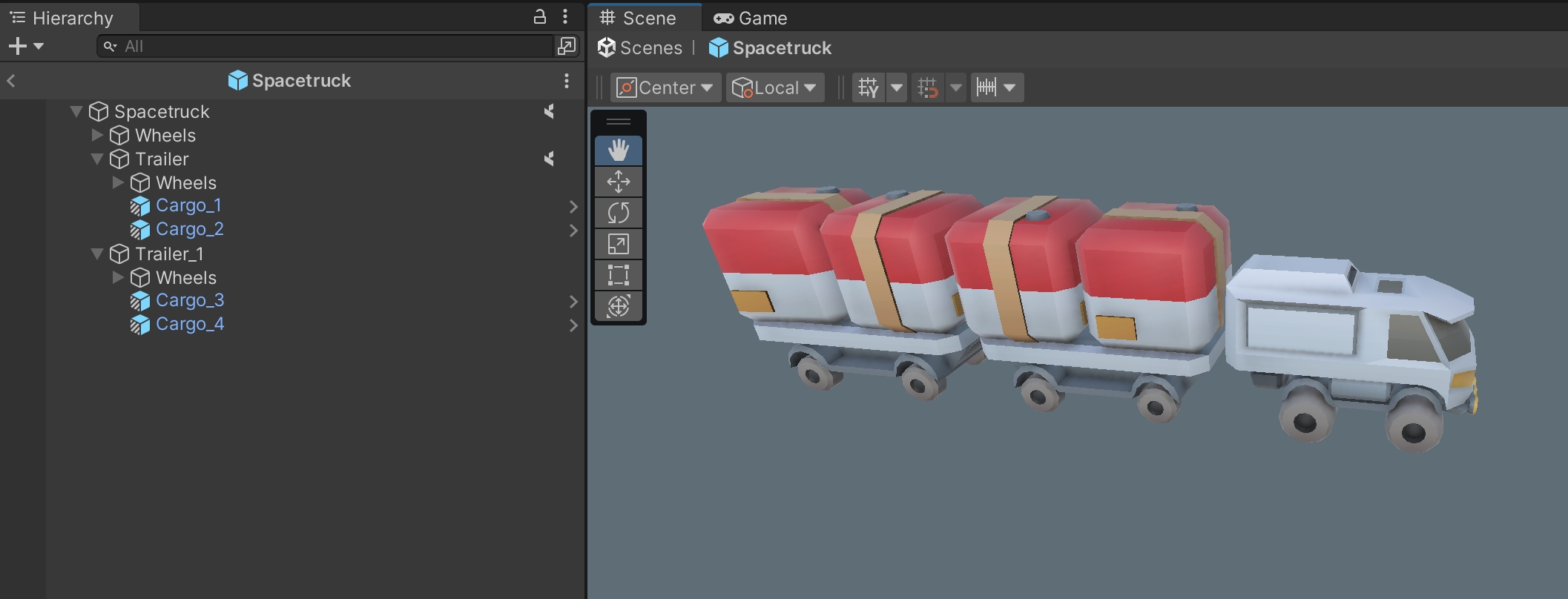

Preparing nested connected Prefabs at edit time

coherence supports all Prefab-related Unity workflows, and nesting is one of them. It can make a lot of sense to prepare multiple networked Prefabs, parent them to each other, and either place them in the scene, or save them as a complex Prefab, ready to be instantiated. This page covers these cases.

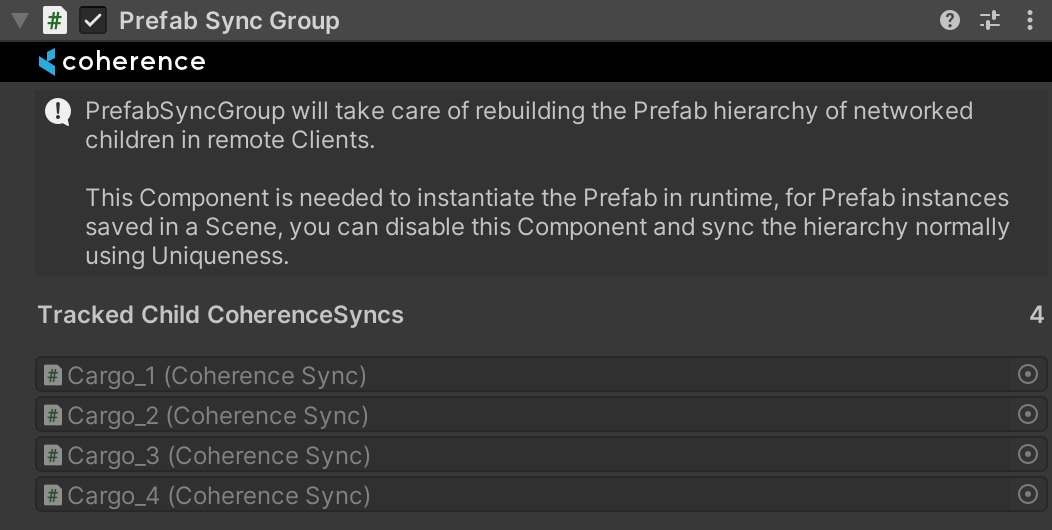

When preparing a networked Prefab that contains another networked Prefab, one extra component is needed to allow coherence to sync the whole hierarchy: PrefabSyncGroup.

For instance, let's suppose we have a vehicle in an RTS that can carry cargo, and it comes with cargo pre-loaded when it's instantiated:

In this example Spacetruck is a synced Prefab, with 4 instances of the synced Prefab Cargo nested within. To make this work, we add a PrefabSyncedGroup to the root:

The component keeps track of child Prefabs that are also synced Prefabs. Now, whenever Spacetruck is instantiated, PrefabSyncGroup makes sure to take 4 instances of Cargo and link the Prefab instances to the correct network entities.

So to recap:

The outermost Prefab needs CoherenceSync and PrefabSyncGroup.

The child Prefabs need CoherenceSync and, optionally, CoherenceNode.

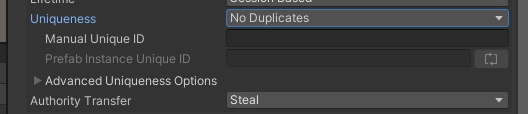

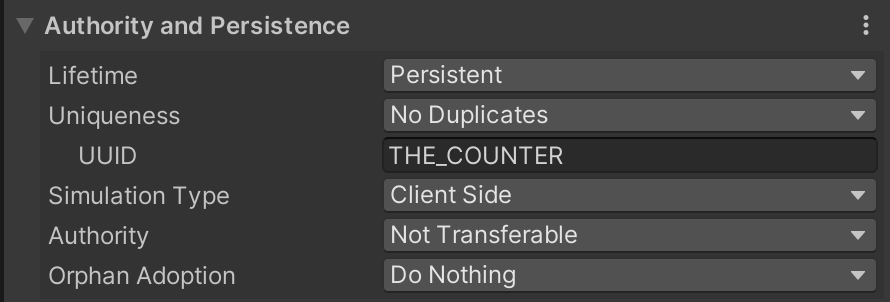

When preparing such a Prefab, you need to set the Uniqueness property to No Duplicates. This ensures that, once multiple Clients connect and open the same scene, the synced Prefabs contained within are not spawned on the network multiple times.

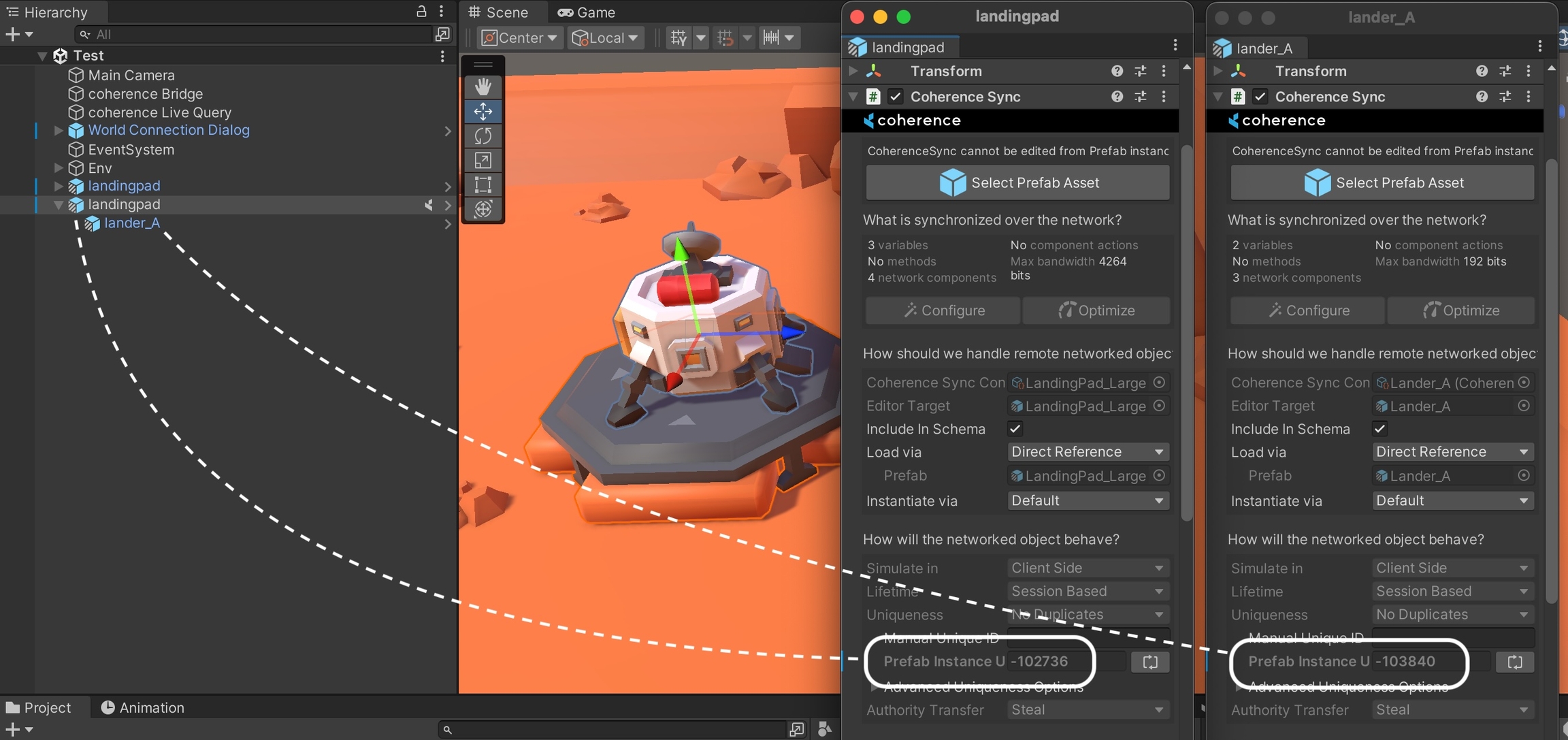

Let's suppose we have a networked Prefab that represents a structure in an RTS (a LandingPad) that can be pre-placed in the scene. This structure also contains a networked vehicle Prefab (a Lander). This Prefab is synced as an independent network entity because at runtime it can detach, change ownership, be destroyed, etc.

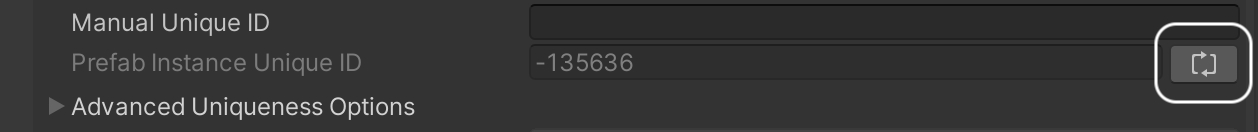

To achieve this, all we need to do is ensure that both Prefabs are set to be unique. When we drag-and-drop the LandingPad Prefab into the scene, coherence automatically assigns a randomly-generated Prefab Instance Unique ID as an override. This number identifies these particular instances of these two Prefabs in the scene.

With this setting, we don't need to do anything else for these compound Prefabs to work.

To recap:

The outermost Prefab needs its Uniqueness set to No Duplicates. Optionally, you can add PrefabSyncGroup to enable runtime-instantiation.

Any child Prefab also needs its Uniqueness set to No Duplicates. It also needs a CoherenceNode if it's parented deep in the hierarchy.

An important thing to keep in mind when working with compound Prefabs in the scene: when you add a new nested synced Prefab to an existing one that has already been placed in the scene a few times, the Prefab Instance Unique ID for these instances will initially be the same.

For this reason, once you play the game, you might see all children disappear (except one). That is normal: coherence thinks that all these network entities are the same, because they have the same uniqueness ID.

You need to ensure that these new children have an overriden and unique ID on each instance in the scene. To do so, click on the button next to the Prefab Instance Unique ID for each child that needs it:

CoherenceSync is a component that should be attached to every networked GameObject. It may be your player, an NPC or an inanimate object such as a ball, a projectile or a banana. Anything that needs to be synchronized over the network and turned into an Entity.

Once a CoherenceSync is added to a Prefab, you can select which individual public properties you would like to sync across the network, expose methods as Network Commands, and configure other network-related properties.

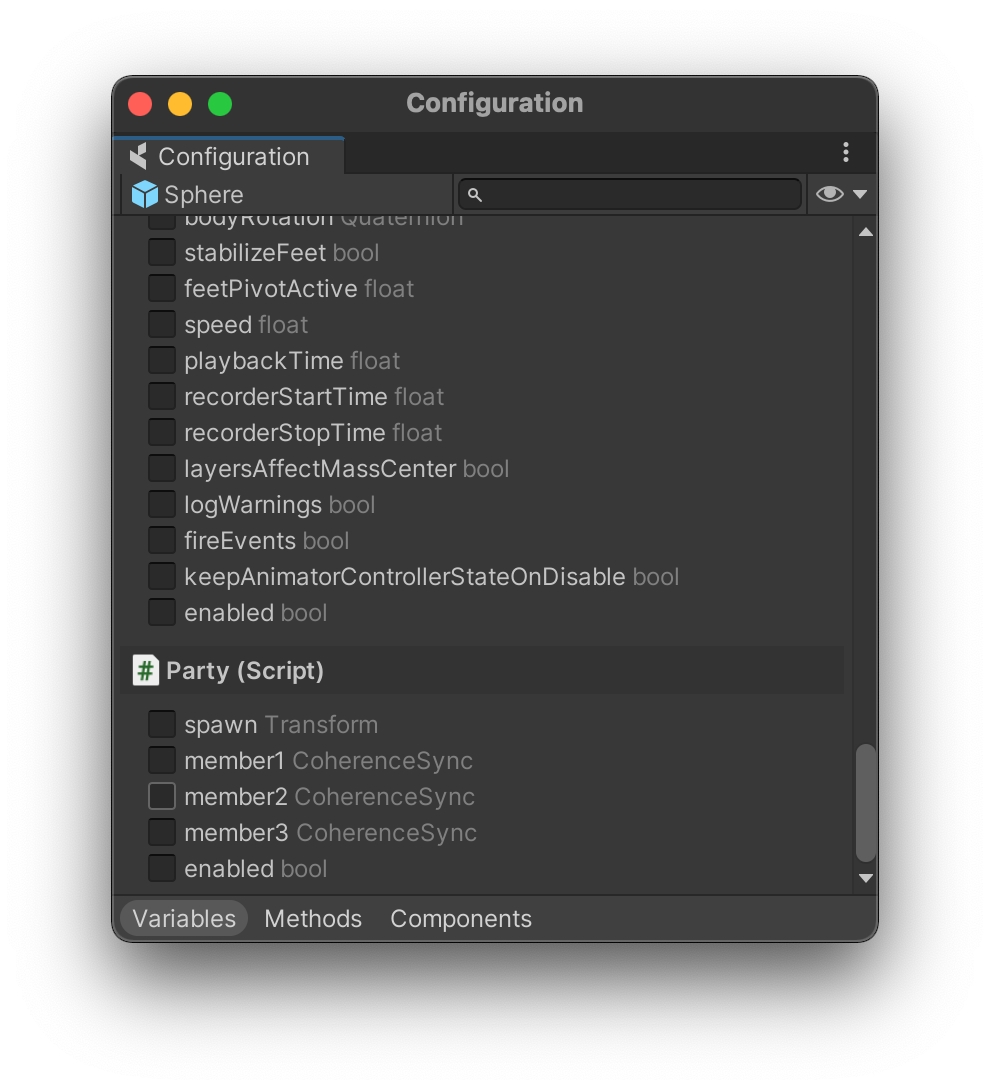

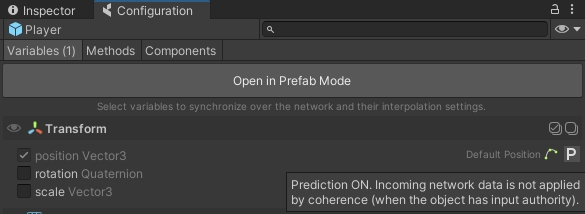

To start syncing variables, open the Configure window that you can access from the CoherenceSync's Inspector.

Any components attached to the GameObject with CoherenceSync that have public variables will be shown here and can be synced across the network.

Network Commands are public methods that can be invoked remotely. In other networking frameworks they are often referred to as RPCs (Remote Procedure Calls).

To mark a method as a Command, you can do it from the Configure window in the same way described above when syncing properties by going to the second tab labelled "Commands".

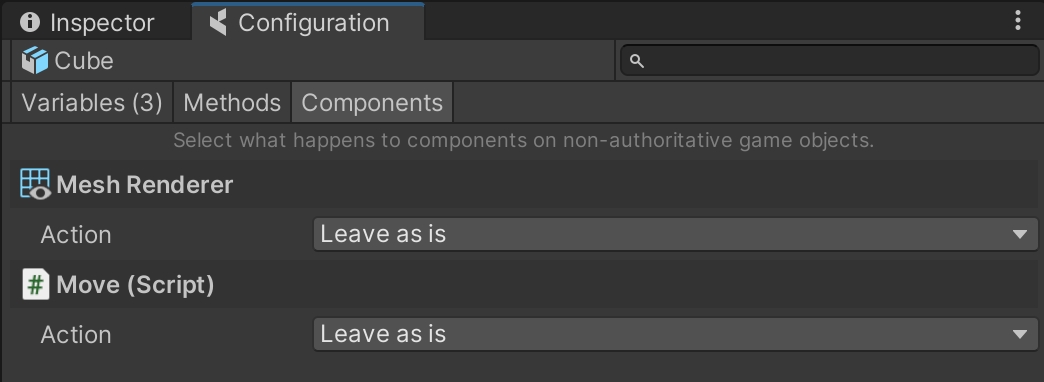

When an entity is instantiated in the network, other Clients will see it but they won't have authority on it. It is then important to ensure that some components behave differently when an entity is non-authoritative.

To quickly achieve this, you can leverage Component Actions, which are located in the Components tab of the Configure window:

(You can notice in the screenshot above how the isBeingCarried property is synced in code, and displays the [Sync] tag in front of its name.)

The two workflows can be used together, even on the same Prefab!

When you create a networked GameObject, you automatically become the owner of that GameObject. That means only you are allowed to update its values, or destroy it. But sometimes it is necessary to pass ownership from one Client to another. For example, you could snatch the football in a soccer game or throw a mind control spell in a strategy game. In these cases, you will need to transfer ownership over these Entities from one Client to another.

When an authority transfer request is performed, an Entity can be set up to respond in different ways to account for different gameplay cases:

Not Transferable - Authority requests will always fail. This is a typical choice for player characters.

Steal - Authority requests always succeed.

Request - This option is intended for conditional transfers. The owner of an Entity can reply to an authority request by either accepting or denying it.

Approve Requests - The requests will succeed even if no event listener is present.

Note that for Request, a listener to the event OnAuthorityRequested needs to be provided in code. If not present, the optional parameter Approve Requests can be used as a fallback. This is only useful in corner cases where the listener is added and removed at runtime. In general, you can simply set the transfer style to Steal and all requests will automatically succeed.

Any Client or Simulator can request ownership by invoking the RequestAuthority() method on the CoherenceSync component of a Network Entity:

A request will be sent to the Entity's current owner. They will then accept or deny the request, and complete the transfer. If the transfer succeeds, the previous owner is no longer allowed to update or destroy the Entity.

When a Client disconnects, all the Network Entities created by that Client are usually destroyed. If you want any Entity to stay after the owner disconnects, you need to set Entity lifetime type of that Prefab to Persistent.

Session Based - the Entity will be removed on all other Clients, when the owner Client disconnects.

Orphaned Entities

By making the GameObject persistent, you ensure that it remains in the game world even after its owner disconnects. But once the GameObject has lost its owner, it will remain frozen in place because no Client is allowed to update or delete it. This is called an orphaned GameObject.

In order to make the orphaned GameObject interactive again, another Client needs to take ownership of it. To do this, one can use APIs (specifically, Adopt()) or – more conveniently – enable Auto-adopt orphan on the Prefab.

Allow Duplicates - multiple copies of this object can be instantiated over the network. This is typical for bullets, spell effects, RTS units, and similar repeated Entities.

No Duplicates - ensures objects are not duplicated by assigning them a Unique ID.

Manual Unique ID - You can set the Unique ID manually in the Prefab, only one Prefab instance will be allowed at runtime, any other instance created with the same UUID will be destroyed.

Prefab Instance Unique ID - When creating a Prefab instance in the Scene at Editor time, a special Prefab Instance Unique ID is assigned, if the manual UUID is blank, the UUID assigned at runtime will be the Prefab Instance ID.

Manual ID vs. Prefab Instance ID

To understand the difference between these two IDs, consider the following use cases:

Manager: If your game has a Prefab of which there can only be 1 in-game instance at any time (such as a Game Manager), assign an ID manually on the Prefab asset.

Multiple interactable scene objects: If you have several instances of a given Prefab, but each instance must be unique (such as doors, elevators, pickups, traps, etc.), each instance created in Editor time will have a auto-generated Prefab Instance Unique ID. This will ensure that when 2 players come online, they only bring one copy of any given door/trap/pickup, but each of them still replicates its state across the network to all Clients currently in the same scene.

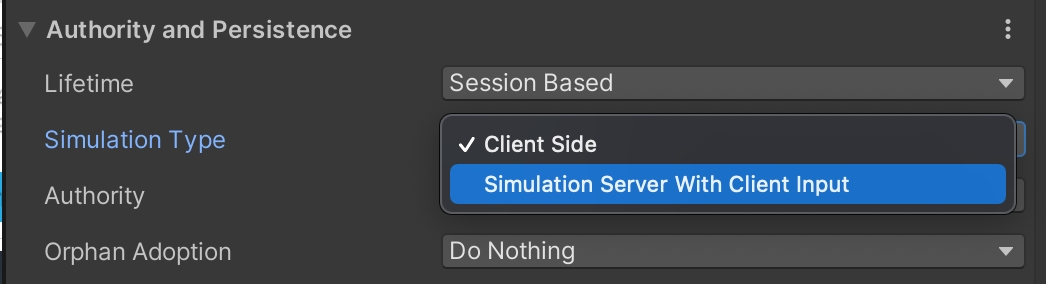

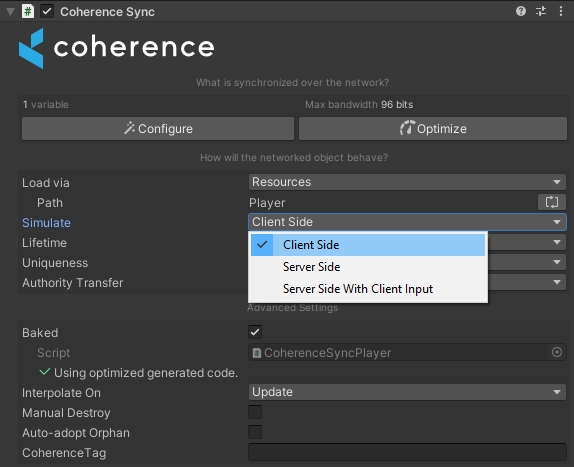

Defines which type of network node (Client or Simulator) can have authority over this Entity.

Client Side - The Entity is by default owned by the Client that spawns it. It can be also owned by a Simulator.

Server Side - The Entity can't be owned by a normal Client, but only by a "server" (in coherence called Simulator).

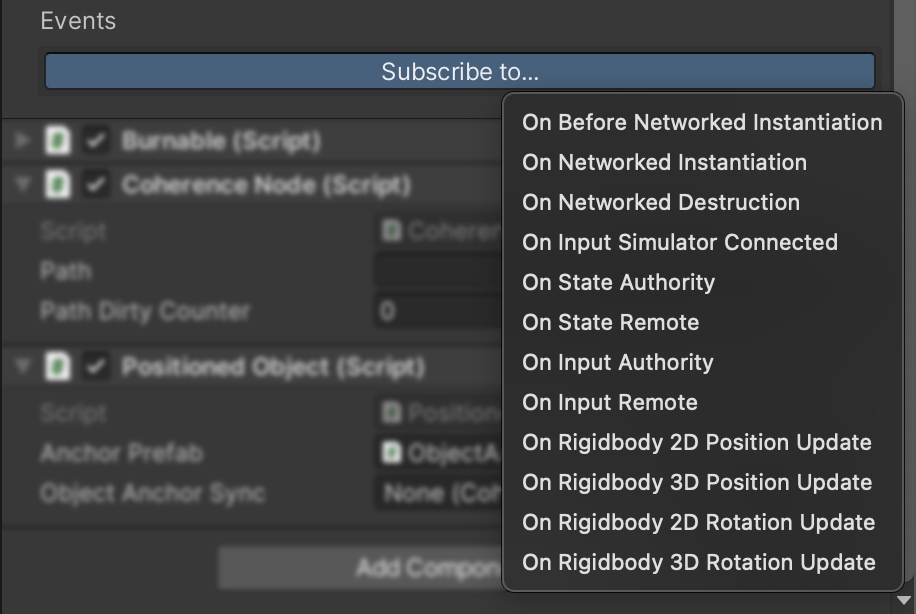

You can hook into the events fired by the CoherenceSync to conveniently structure gameplay in response to key moment of the component's lifecycle. Events are initially hidden, but you can reveal them using the button at the bottom of the Inspector called "Subscribe to...".

You can also subscribe to these events in code.

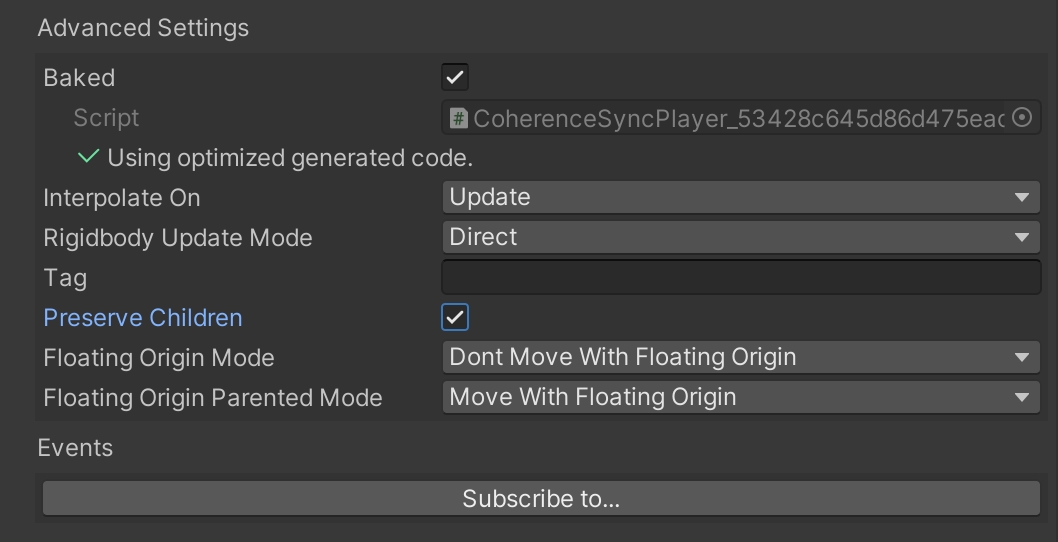

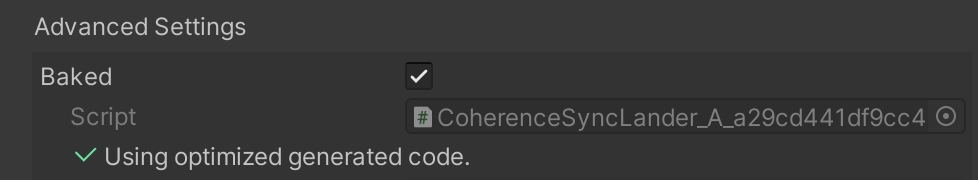

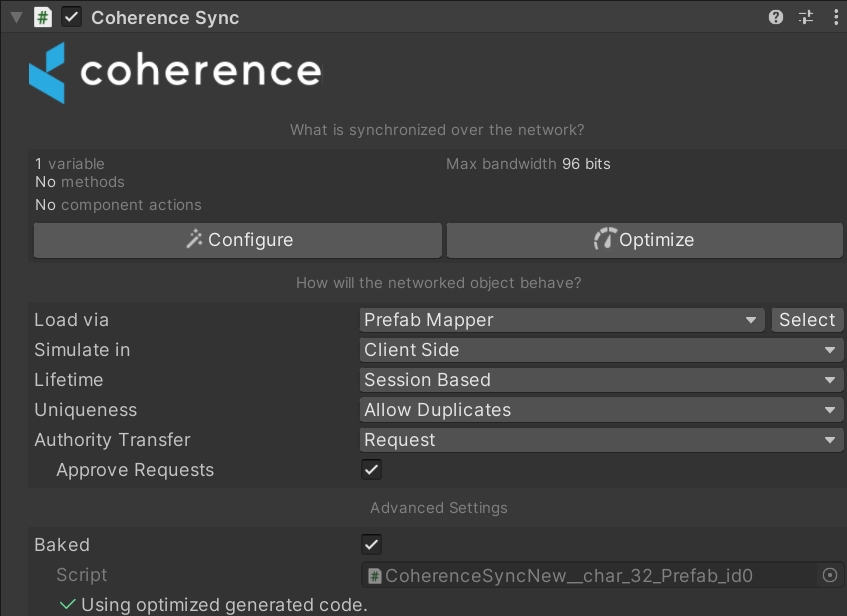

When CoherenceSync variables/components are sent over the network, by default, Reflection Mode is used to sync all the data at runtime. Whilst this is really useful for prototyping quickly and getting things working, it can be quite slow and unperformant. A way to combat this is to bake the CoherenceSync component, creating a compatible schema and then generating code for it.

The schema is a file that defines which data types in your project are synced over the network. It is the source from which coherence SDK generates C# struct types (and helper functions) that are used by the rest of your game. The coherence Replication Server also reads the schema file so that it knows about those types and communicates them with all of its Clients efficiently.

The schema must be baked in the coherence Settings window, before the check box to bake this Prefab can be clicked.

When the CoherenceSync component is baked, it generates a new file in the baked folder called CoherenceSync<AssetIdOfThePrefab>. This class will be instantiated at runtime, and will take care of networked serialization and deserialization, instead of the built-in reflection-based one.

CoherenceSync direct parent-child relationships at runtime

Objects with the CoherenceSync component can be connected at runtime to other objects with a CoherenceSync component to form a direct parent-child relationship.

For example, an item of cargo can be parented to a vehicle, so that they move together when the vehicle is in motion.

Keep in mind that on this page we deal with direct parenting of two CoherenceSync GameObjects. If it's not practical to parent a network entity directly to the root of another, see instead how to .

When an object has a parent in the network hierarchy, its transform (position and orientation) will update in local space, which means its transform is relative to the parent's transform.

A child object will only be visible in a LiveQuery if its parent is within the query's boundaries.

Parenting network entities directly doesn't require any extra work. Any parenting code (i.e. Unity's own transform.SetParent() will work out of the box, without any need for additional action.

You can add and remove parent-child relationships at runtime – even from the Unity editor, by drag-and-drop.

If the child object is using LODs, it will base its distance calculations on the world position of its parent. For more info, see the documentation.

When the parent CoherenceSync is destroyed, by default its CoherenceSync children get destroyed together with it. This can be changed via the Preserve Children option on the parent, under Advanced Settings:

When Preserve Children is enabled, if the authority destroys or disables the parent entity, child entities get unparented instead of being destroyed together with the parent. Those children will now reside at the root of the Scene hierarchy.

Out of the box, coherence can use C# reflection to sync data at runtime. This is a great way to get started but is very costly performance-wise and has a number of limitations on what features can be used through this system.

For optimal runtime performance and a complete feature set, we need to create a schema and perform code generation specific to our project. coherence calls this mechanism Baking.

Learn more about schemas in the section.

Click on the coherence > Bake menu item.

This will go through all indexed CoherenceSync GameObjects (Resources folders and Prefab Mapper) in the project and generate a schema file based on the selected variables, commands and other settings. It will also take into account any that have been added.

For every Prefab with a CoherenceSync component attached, the baking process will generate a C# baked script specifically tuned for it.

Check .

When baking, the generated code will output to Asset/coherence/baked.

You can version the baked files or ignore them, your call.

If you work on a larger game or team, where you use continuous integration, chances are you are better off including the baked files on your VCS.

Since baked scripts access your code, changing networked variables or commands with coherence will get you into compilation errors.

When you configure your Prefab to network variables, and then bake, coherence generates baked scripts that access your code directly, without using reflection. This means that whenever you change your code, you might break compilation by accident.

For example, if you have a Health.cs script which exposes a public float health; field, and you toggle health in the Configure window and bake, the generated baked script will access your component via its type, and your field via field name.

Like so:

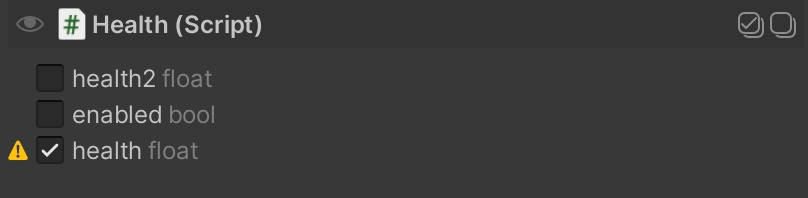

If you decide you want to change your component name (Health) or any of your bound fields (health), Unity script recompilation can fail. In this example, we will be removing health and adding health2 in its place.

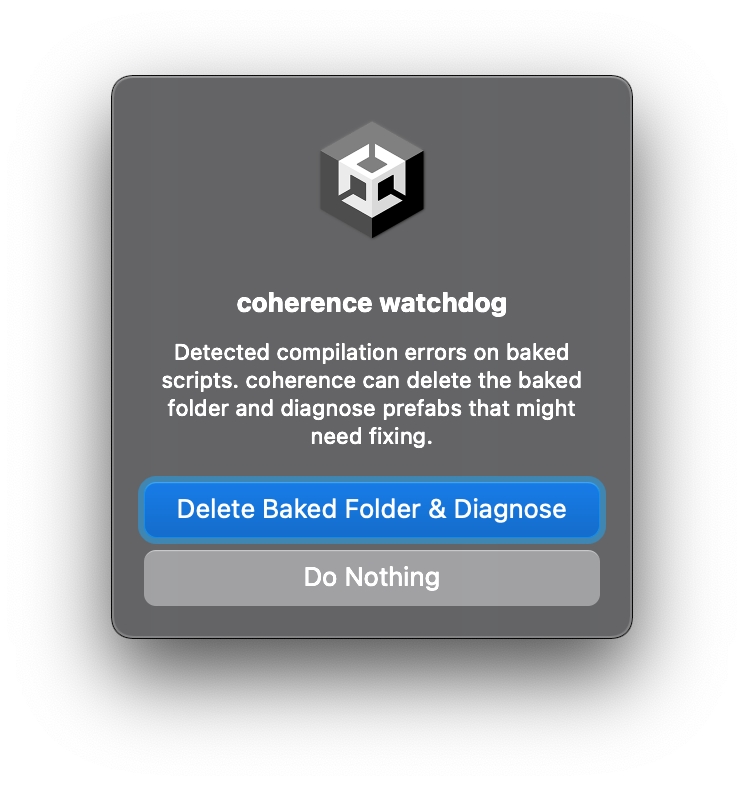

When baking via assets, the watchdog is able to catch compilation problems related with this, and offer you a solution right away.

It will suggest that you delete the baked folder, and then diagnose the state of your Prefabs. After a few seconds of script recompilation, you will be presented with the Diagnosis window.

In this window, you can easily spot variables in your Prefabs that can't be resolved properly. In our example, health is no longer valid since we've moved it elsewhere (or deleted it).

From here, you can access the Configure window, where you can spot the problem.

Now, we can manually rebind our data: unbind health and bind health2. Once we do, we can now safely bake again.

Remember to bake again after you fix your Prefabs.

Once the baked code has been generated, Prefabs will automatically make use of it. If you want to switch a particular Prefab to reflection code, you can do so in the Inspector of its CoherenceSync, by unchecking the Baked checkbox:

Please note that if the nested Prefabs are more than one level under the root object, you still need to add a CoherenceNode component to the child ones (in the example above, Cargo), to enable .

When dealing with synced Prefabs that are hand-placed in the scene before connecting, such as level design elements like interactive doors, you need to ensure that they are seen as "unique". This is also covered in the , but it's worth talking about it in the context of nested synced Prefabs.

Like for runtime-instantiated Prefabs, keep in mind that if the Lander is nested in the hierarchy, it will also need a CoherenceNode component.

If you plan to also instantiate this Prefab at runtime, you can add a PrefabSyncGroup to the root as described in the . This makes the Prefab work when instantiated at runtime, while the uniqueness takes care of copies in the scene.

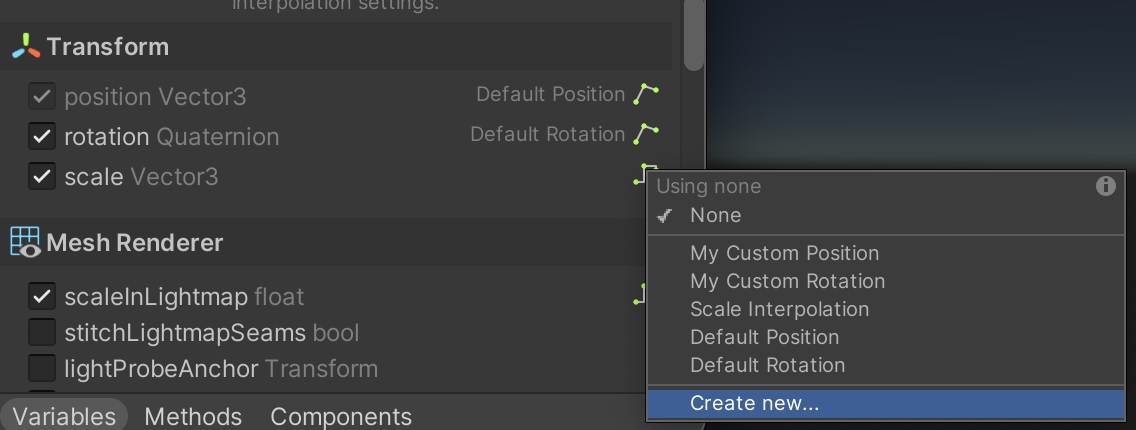

To start syncing a property, just use the checkbox. Optionally, choose how it is on the right.

For more info, refer to the page about .

The sections above describe UI-based workflows to sync variables and commands. We also offer a code-based workflow, which leverages directly from within code.

You can also create your own, .

Persistence - Entities with this option will persist as long as the Replication Server is running. For more details, see .

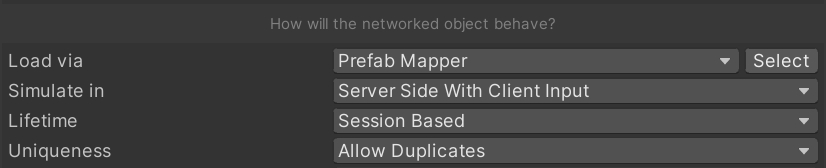

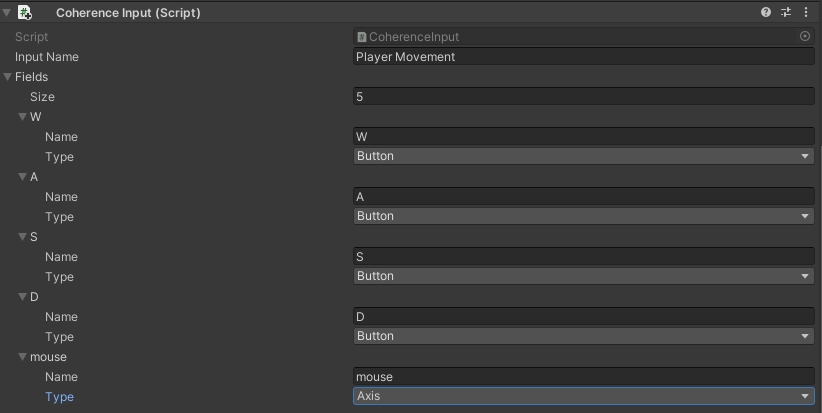

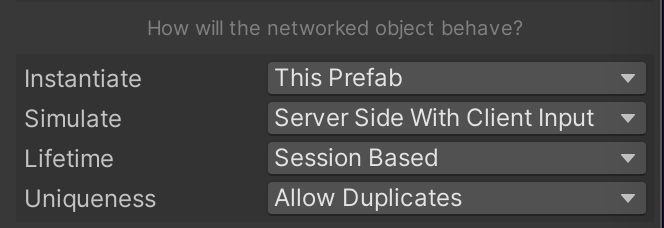

Server Side with Client Input - This automatically adds a CoherenceInput component. Ownership is split: a Simulator holds State Authority, while a Client has Input Authority. See for more info.

Once revealed, you can use them just like regular :

You might also want to check out the CoherenceSync instance lifecycle section at the bottom of the article.

You can find more information on the page about .

For an example of direct child CoherenceSync components parenting and unparenting at runtime, check out the First Steps sample project, specifically .

Baked scripts will be located in Assets/coherence/baked.

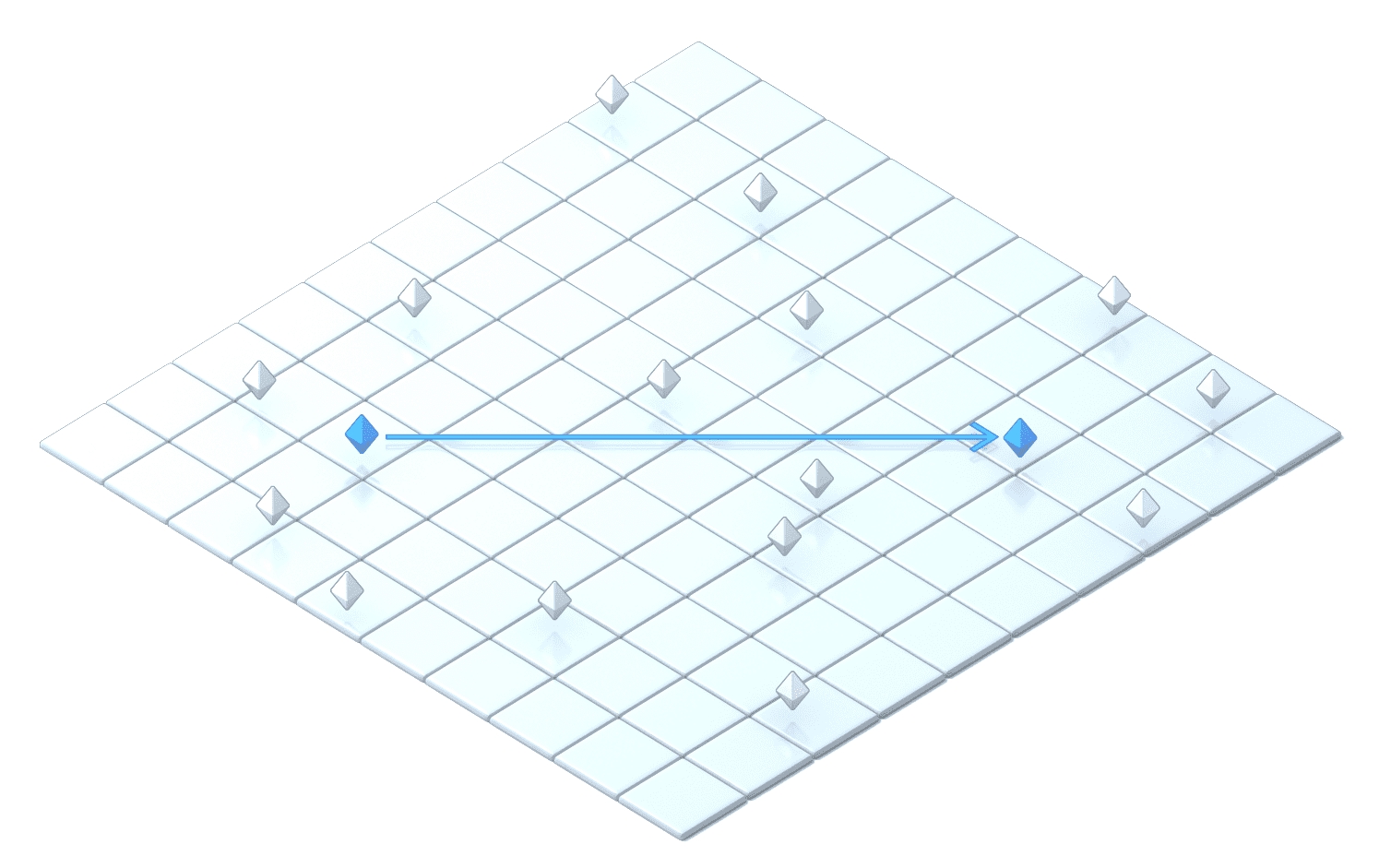

The way you get information about the World is through LiveQueries. We set criteria for what part of the World we are interested in at each given moment. That way, the Replicator won’t send information about everything that is going on in the Game World everywhere, at all times.

Instead, we will just get information about what’s within a certain area, kind of like moving a torch to look around in a dark cave.

For a guide on how to use LiveQuery, see Areas of Interest.

More complex areas of interest types are coming in future versions of coherence.

Aside from configuring your CoherenceSync bindings from within the Configure window, it's possible to use the [Sync] and [Command] C# attributes directly on your scripts. Your prefabs will get updated to require such bindings.

Mark public fields and properties to be synchronized over the network.

It's possible to migrate the variable automatically, if you decide to change its definition:

Mark public methods to be invoked over the network. Method return type must be void.

It's possible to migrate the command automatically, if you decide to change the method signature:

Note that marking a command attribute only marks it as programmatically usable. It does not mean it will be automatically called over the network when executed.

You still need to follow the guidelines in the Messaging with Commands article to make it work.

Even though coherence provides Component Actions out of the box for various component, you can implement your own Component Actions in order to give designers on the team full authoring power on network entities, directly from within the Configure window UI.

Creating a new one is simply done by extending the ComponentAction abstract class:

Your custom Component Action must implement the following methods:

OnAuthority This method will be called when the object is spawned and you have authority over it.

OnRemote This method will be called when a remote object is spawned and you do not have authority over it.

It will also require the ComponentAction class attribute, specifying the type of Component that you want the Action to work with, and the display name.

For example, here is the implementation of the Component Action that we use to disable Components on remote objects:

Extending what can be synced from the Configure window

This is an advanced topic that aims to bring access to coherence's internals to the end user.

The Configure window lists all variables and methods that can be synced for the selected Prefab. Each selected element in the list is stored in the Prefab as a Binding with an associated Descriptor, which holds information about how to access that data.

By default, coherence uses reflection to gather public fields, properties and methods from each of the Prefab's components. You can specify exactly what to list in the Configure window for a given component by implementing a custom DescriptorProvider. This allows you to sync custom component data over the network.

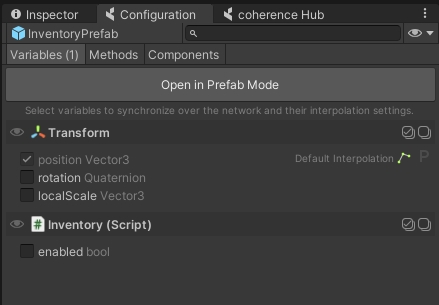

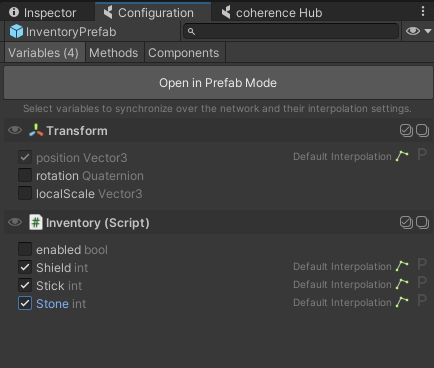

Take this player inventory for example:

Since the inventory items are not immediately accessible as fields or properties, they are not listed in the Configure window. In order to expose the inventory items so they can be synced across the network, we need to implement a custom DescriptorProvider.

DescriptorProviderThe main job of the DescriptorProvider is to provide the list of Descriptors that you want to show up in the Configure window. You can instantiate new Descriptors using this constructor:

name: identifying name for this Descriptor.

ownerType: type of the MonoBehaviour that this Descriptor is for.

bindingType: type of the ValueBinding class that will be instantiated and serialized in CoherenceSync, when selecting this Descriptor in the Configure window.

required: if true, every network Prefab that uses a MonoBehaviour of ownerType will always have this Binding active.

If you need to serialize additional data with your Descriptor, you can inherit from the Descriptor class or assign a Serializable object to Descriptor.CustomData.

Here is an example InventoryDescriptorProvider that returns a Descriptor for each of the inventory items:

To specify how to read and write data to the Inventory component, we also need a custom binding implementation.

BindingA Descriptor must specify through the bindingType which type of ValueBinding it is going to instantiate when synced in a CoherenceSync. In our example, we need an InventoryBinding to specify how to set and get the values from the Inventory. To sync the durability property of the inventory item, we should extend the IntBinding class which provides functionality for syncing int values.

We are now ready to sync the inventory items on the Prefabs.

Creating complex hierarchies of CoherenceSyncs at runtime

While the basic case of direct parent-child relationships between CoherenceSync entities is handled automatically by coherence, more complex hierarchies (with multiple levels) need a specific component.

An example of such a hierarchy would be a synced Player Prefab with a hierarchical bone structure, where you want to place an item (e.g. a flashlight) in the hand:

Player > Shoulder > Arm > Hand

To prepare the child Prefab that you want to parent at runtime, add the CoherenceNode component to it (in addition to its CoherenceSync). In the example above, that would be the flashlight you want your player to be able to pick up. No additional changes are required.

This setup allows you to place instances of the flashlight Prefab anywhere in the hierarchy of the Player (you could even move it from one hand to the other, and it would work).

You don't need to input any value in the fields of the CoherenceNode. They are used at runtime, by coherence, automatically.

To recap, for deep-nesting network entities to work, you need two things:

The parent: a Prefab with CoherenceSync that has some hierarchy of child transforms (these child transforms are not networked entities themselves).

The child: another connected Prefab with CoherenceSync and CoherenceNode.

One important constraint for using CoherenceNode is that the hierarchies have to be identical on all Clients.

Example: if on Client A an object is parented to Player > Shoulder > Arm > Hand, the hierarchy on Client B needs to be exactly: Player > Shoulder > Arm > Hand.

Removing or moving an intermediate child (such as Shoulder or Arm) would lead to undesirable results, and desynchronisation.

Position and rotation

Similarly to the above, intermediate children objects need to have the same position and rotation on all Clients. If not, that would lead to desync because the parented entity doesn't track the position of its parent object(s).

If you plan to move these intermediate children, then we suggest to sync the position and/or rotation of those objects as part of the containing Prefab.

Following the previous example, if an object is parented to Player > Shoulder > Arm > Hand, you might want to mark the position and rotation of Shoulder, Arm and Hand as synced, as part of the prefab Player.

This way if any of them moves, the movement will be replicated correctly on all clients, and the object parented to Hand will also look correct.

Keep in mind that there is no penalty for synching positions of objects that never or rarely move, because the position is not synched every frame if it hasn't changed.

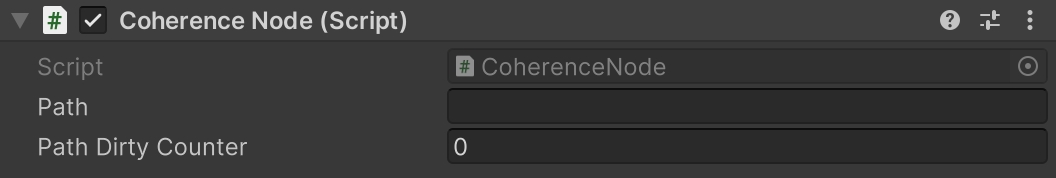

This section is only useful to you if you want to understand deeply how CoherenceNode works under the hood.

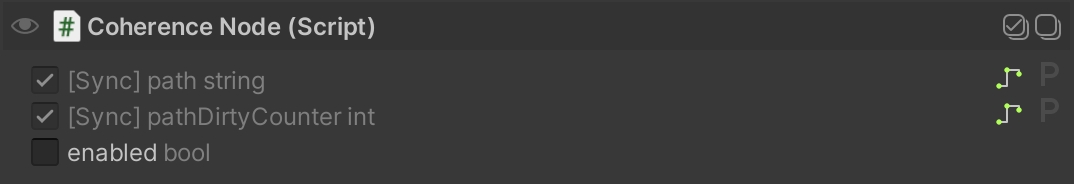

CoherenceNode works using two public fields which are automatically set to sync using the [Sync] attribute.

The path variable describes where in the parent's hierarchy the child object should be located. It is a string consisting of comma-separated indexes. Every one of these indexes designates a specific child index in the hierarchy. The child object which has the CoherenceNode component will be placed in the resulting place in the hierarchy.

The pathDirtyCounter variable is a helper variable used to keep track of the applied hierarchy changes. In case the object's position in the parent's hierarchy changes, this variable will be used to help settle and properly sync those changes.

For an example of a CoherenceSync parenting and unparenting at runtime in a deep hierarchy, check out the First Steps sample project, lesson 5.

Before upgrading, back up your project to avoid any data loss.

APIs marked as depracted on 1.0 and earlier are removed on 1.2. Make sure your project is not using them before upgrading.

Sample UI

coherence / Explore Samples / Connection Dialog(s)

CoherenceSyncConfigManager

Source Generator baking strategy

Falls back to Assets baking strategy

Some components like the CoherenceBridgeSender don't exist anymore. If your project was using any of these legacy components, you might see warnings on the Console window while in Play Mode. You'll want to find the affected GameObjects and remove the missing component references. This is specially important for Prefabs, since missing components affect the ability to save them.

There has been a major refactor on how we deal with the registry.

First off, we're deprecating the registry holding configs as sub-assets. This has been the default up to 1.1, but on 1.2, it has been changed to store the configs in the Assets/coherence/CoherenceSyncConfigs folder.

For 1.2, we have avoided manual migration of existing subassets. You can trigger this operation from the registry inspector (Assets/coherence/CoherenceSyncRegistry). We don't automate this operation, since the extraction changes the asset GUIDs of the configs, which could lead to missing references. This is specially true if your project is referencing the configs directly, instead of going through the registry. Keep this in mind when you decide to extract existing subassets.

It is important that you perform the forementioned migration as soon as possible, since SDK will be deprecating reading from subassets in upcoming releases.

The APIs provided have changed too. Check CoherenceSyncConfigRegistry, CoherenceSyncConfigUtils and CoherenceSyncUtils. Exposed functionality has been documented.

If your project was using any registry-related APIs, you might see compilation errors on upgrade, since the APIs have changed considerably. Check the classes mentioned above.

When the package imports, if there are any compilation errors, Unity won't do a domain reload. This means the previous package is still in memory and executing, but the contents of the package (might) have changed drastically. This can yield numerous errors and warnings on the Console, that will fade away once the compilation (followed by a domain reload) is completed.

If, after compilation, you are still experiencing issues using the SDK (errors hitting the Bake button, CoherenceSync throwing exceptions or warnings that weren't there before the upgrade, etc), please reach out to us on our Discord or the Community forum.

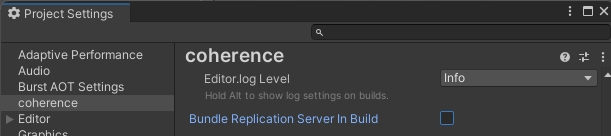

In this update, bundling the Replication Server has been revamped to work with Streaming Assets. On the Settings window, instead of different toggles per platform, there's now one unified toggle:

For the full list of supported binding types, see .

,

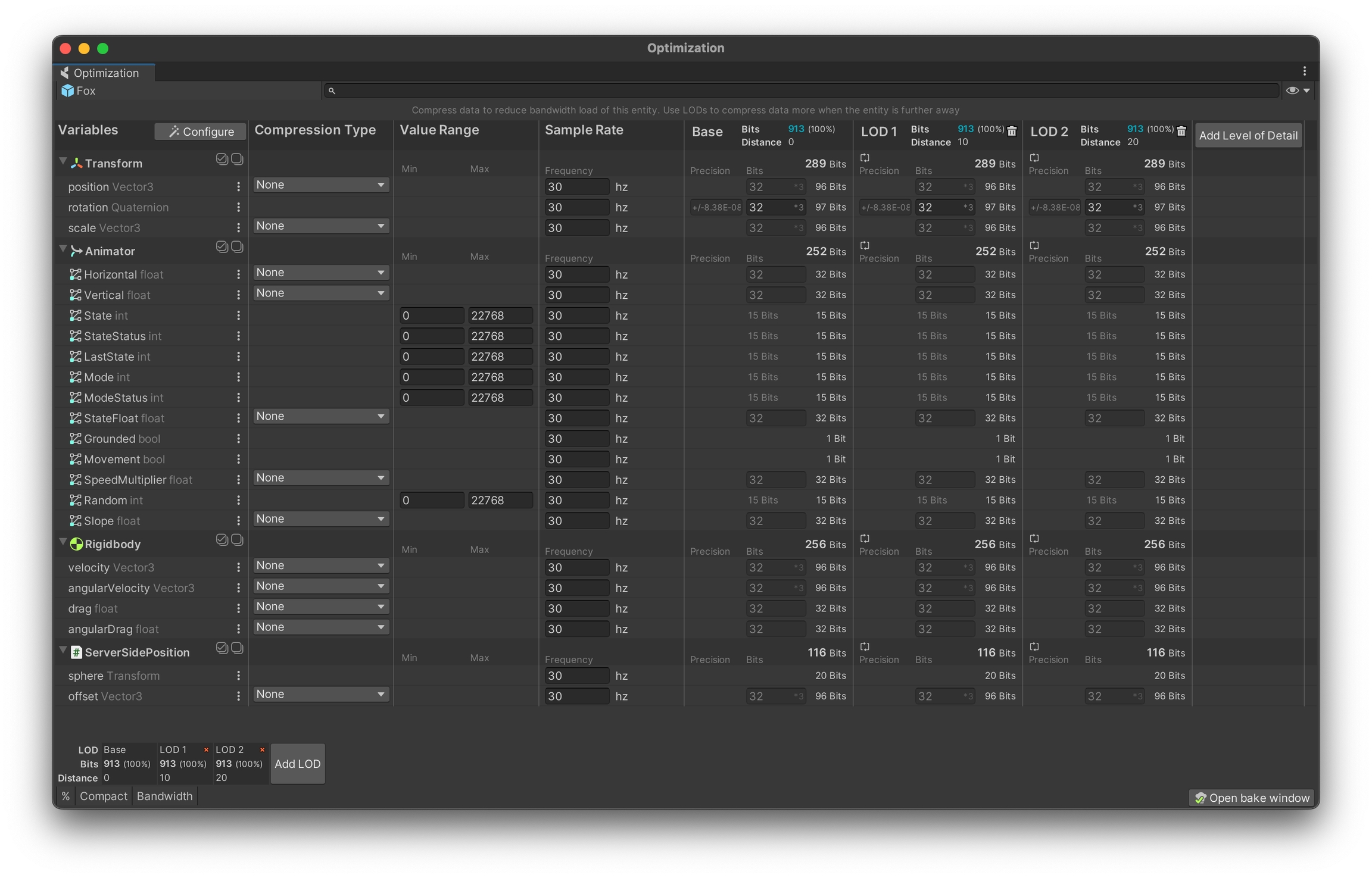

No matter how fast the internet becomes, conserving bandwidth will always be important. Some Game Clients might be on poor quality mobile networks with low upload and download speeds, or have high ping to the Replication Server and/or other Clients, etc.

Additionally, sending more data than is required consumes more memory and unnecessarily burdens the CPU and potentially GPU, which could add to performance issues, and even to quicker battery drainage.

In order to optimize the data we are sending over the network, we can employ various techniques built into the core of coherence.

Delta-compression (automatic). When possible, only send differences in data, not the entire state every frame.

Compression and quantization (automatic and configurable). Various data types can be compressed to consume less bandwidth that they naturally would.

Simulation frequency (configurable). Most Entities do not need to be simulated at 60+ frames per second.

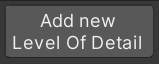

Levels of detail (configurable). Entities need to consume less and less bandwidth the farther away they move from the observer.

Area of interest. Only replicate what we can see.

Networked entities can be simulated either on a Game Client ("Client authority") or a Simulator ("Server authority"). Authority defines which Client or Simulator is allowed to make changes to the synced properties of an Entity, and in general defines who "runs the gameplay code" for that Entity. An Entity is any networked GameObject.

To learn more about authority, check out this short video:

When an Entity is created, the creator is assigned authority over the Entity. Authority can be then transferred at a later point between Clients – or even between Clients and Simulators, or between Simulators. Regardless, only one Client or Simulator can be the authority over the Entity at any given time.

Client authority is the easiest to set up initially, but it has some drawbacks:

Higher latency. Because both Clients have a non-zero ping to the Replication Server, the minimum latency for data replication and commands is the combined ping (Client 1 to Replication Server and Replication Server to Client 2).

Higher exposure to cheating. Because we trust Game Clients to simulate their own Entities, there is a risk that one such Client is tampered with and sends out unrealistic data.

In many cases, especially when not working on a competitive PvP game, these are not really issues and are a perfectly fine choice for the game developer.

Client authority does have a few advantages:

Easier to set up. No Client vs. Server logic separation in the code, no building and uploading of Simulation Servers, everything just works out of the box.

Cheaper. Depending on how optimized the Simulator code is, running a Simulator in the cloud will in most cases incur more costs than just running a Replication Server (which is comparatively very lean).

Having one or several Simulators taking care of the important World simulation tasks (like AI, player character state, score, health, etc.) is always a good idea for competitive PvP games. In this scenario, the Simulator has authority over key game elements, like a "game manager", a score-keeping object, and so on.

Running a Simulator in the cloud next to the Replication Server (with the ping between them being negligible) will also result in lower latency.

In addition to key gameplay objects, the player character can also be simulated on the Server, with the Client locally predicting its state based on inputs. You can read more about how to achieve that in the section about CoherenceInput and Server-authoritative setup.

Peer-to-peer support (without a Replication Server) is planned in a future release. Please see the Peer-to-peer page for updates.

Even if an entity is not currently being simulated locally (the client does not have authority), we can still affect its state by sending a network command or even requesting a transfer of authority.

Authority over an Entity is transferrable, so it is possible to move the authority between different Clients or even to a Simulator. This is useful for things such as balancing the simulation load, or for exchanging items. It is possible for an Entity to have no Client or Simulator as the authority - these Entities are considered orphaned and are not simulated.

In the design phase, CoherenceSync objects can be configured to handle authority transfer in different ways:

Request. Authority transfer may be requested, but it may be rejected by the current authority.

Steal. Authority will always be given to the requesting party on a FCFS ("first come first serve") basis.

Disabled. Authority cannot be transferred.

Note that you need to set up Auto-adopt Orphan if you want orphans to be adopted automatically when an Entity's authority disconnects, otherwise an orphaned Entity is not simulated. Auto-adopt is only allowed for persistent entities.

When using Request, an optional callback OnAuthorityRequested can be set on the CoherenceSync behaviour. If the callback is set, then the results of the callback will override the Approve Requests setting in the behaviour.

The request can be approved or rejected in the callback.

Requesting authority is very straight-forward.

RequestAuthority returns false if the request was not sent. This can be because of the following reasons:

The sync is not ready yet.

The entity is not allowed to be transferred becauseauthorityTransferType is set to NonTransferable.

There is already a request underway.

The entity is orphaned, in which case you must call Adopt instead to request authority.

The request itself might fail depending on the response of the current authority.

As the transfer is asynchronous, we have to subscribe to one or more Unity Events in CoherenceSync to learn the result.

These events are also exposed in the Custom Events section of the CoherenceSync inspector.

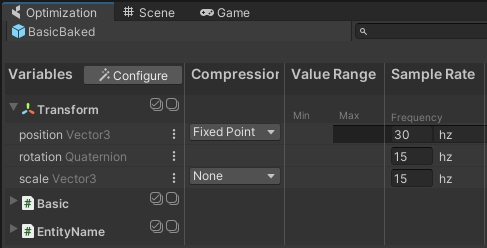

Without a special configuration, Entity data is captured at the highest possible frequency and sent to the Replication Server. This often generates more data than is needed to efficiently replicate the Entity's state across the network.

On a Simulator, we can limit the framerate globally using Unity's built-in static variable targetFrameRate.

coherence will automatically limit the target framerate of uploaded Simulators to 30 frames per second. We plan to make it possible to lift this restriction in the future. Check back for updates in the next couple of releases.

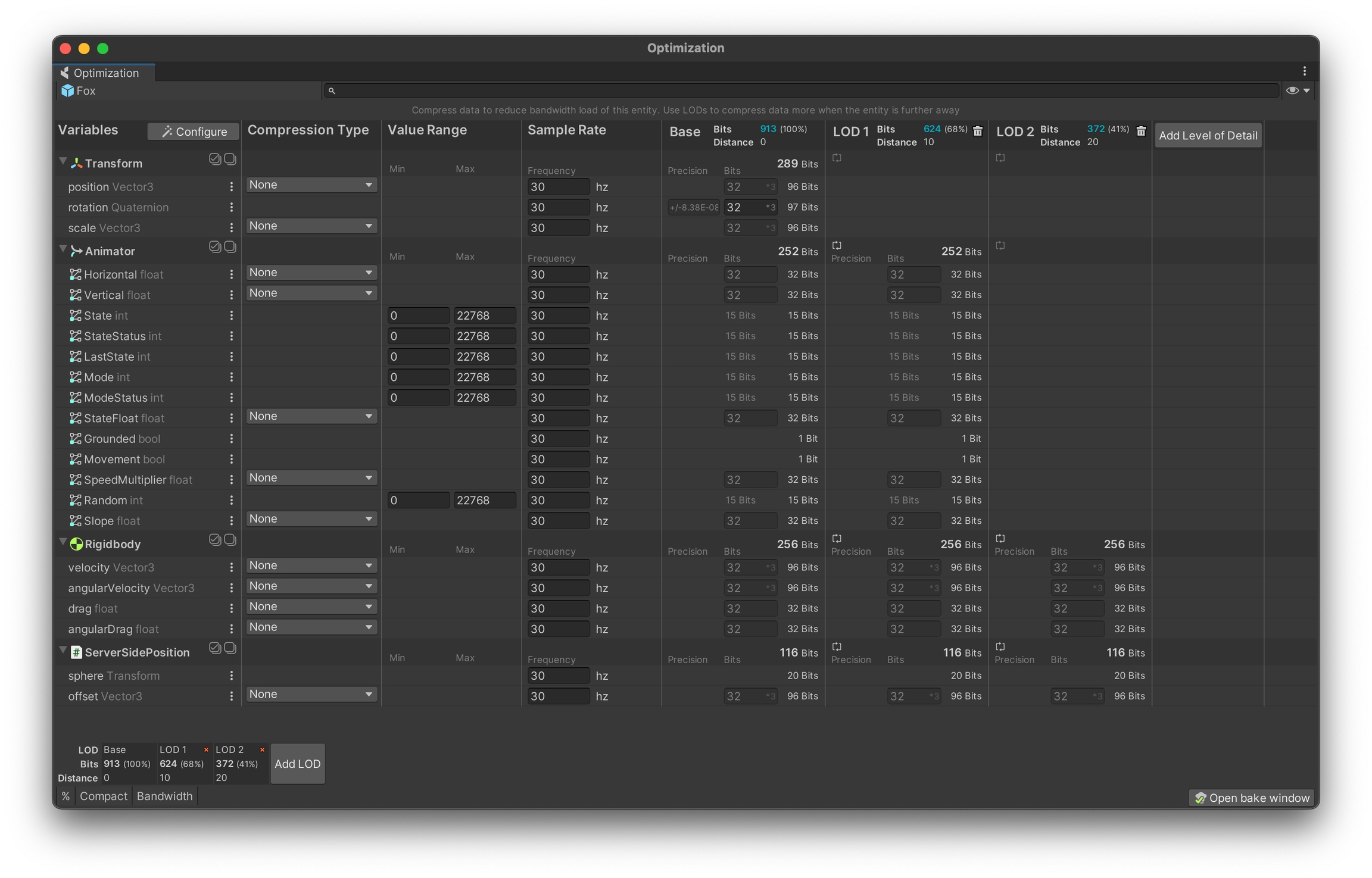

Replication frequency can be configured for each binding individually in the Prefab Optimize window. The Sample Rate controls how many times per second values are sampled and synced over the network.

Since the default packet send frequency of the Replication Server is 20Hz, sample rates above that value won't have any benefits unless you increase the Replication Server send frequency, too. See here how to .

High sample rates increase replication accuracy and reduce latency, but consume more bandwidth. The upper limit at which samples can be quantized is 60hz, so sample rates beyond that are generally not recommended. It is not possible to change sampling frequency at runtime.

Values that don't change over time do not consume any bandwidth. Only bindings with updated values will be synced over the network.

Support for requests based on is coming soon.

Also because of their asynchronous nature, clients can receive for entities that they have already transferred. Such commands are dropped.

Scenes or levels are a common feature of Unity games. They can be loaded from Unity scenes, custom level formats, or even be procedurally generated. In networked games, players should not be able to see entities that are in other scenes. To address this, coherence's scene feature gives you a simple way of controlling what scene you're acting in.

Each Coherence scene is represented by an integer index. You can map this index to your scenes or levels in any way you find appropriate. Projects that don't use scenes will implicitly put all their entities into scene 0.

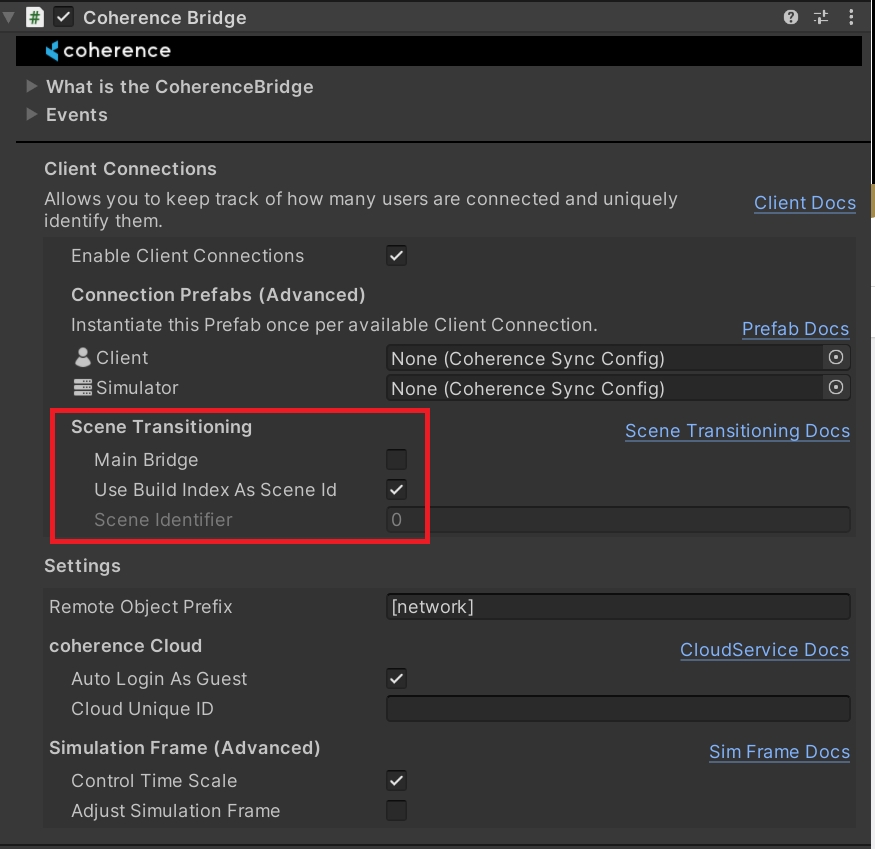

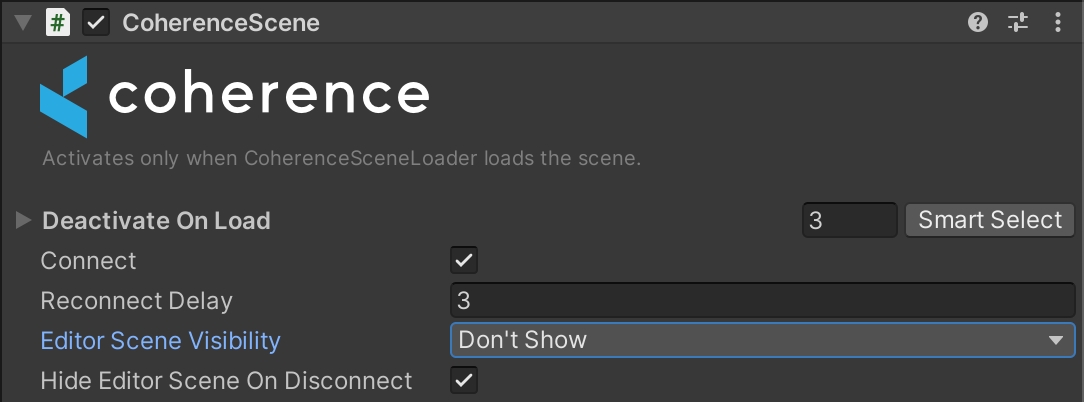

Since the connection to the Replication Server is done through the component, it means that if you switch Scenes, the current CoherenceBridge that holds the connection to the Replication Server will be destroyed.

In order to keep a CoherenceBridge with its connection alive between Scene changes, you will have to set it as Main Bridge in the Component inspector:

These are the options related to Scene transitions:

Main Bridge: This CoherenceBridge instance will be saved as DontDestroyOnLoad and its connection to the Replication Server will be kept alive between Scene changes. All other CoherenceBridge components that are instantiated from this point forward will update the target Scene of the Main Bridge, and destroy themselves afterwards.

Use Build Index as Scene Id: Every Scene needs a unique identifier over the network. This option will automate the creation of this ID by using the Scene Build Index (from the Build Settings window).

Scene Identifier: If the previous option is unchecked, then you will be able to manually set a Scene Identifier of your own (restricted to unsigned integers).

Using these options will automate Scene transitions.

The only requirement is having a single CoherenceBridge set as Main (the first one that your game will load). The rest of the Scenes you want to network should also have a CoherenceBridge component, but not set as main.

These options require no extra code on your part.

A client connection and all the entities it has authority over are always kept in the same coherence scene. Clients cannot have authority over entities in other scenes. This implies two things:

When a client changes scene, it will bring along any entities it has authority over.

If an entity changes ownership via authority transfer, it will be moved to the new owner's scene.

An entity that does not have an owner is an orphan. Orphaned entities will stay in the scene where their previous owner left them.

Note that Unity will destroy all game objects not marked as DontDestroyOnLoad whenever a new Unity scene is loaded (non-additively). If the client has authority over any of those entities at that point, coherence will replicate that destruction to all other clients. If that is undesirable and you need to leave entities behind, make sure that authority has been lost or transferred before loading the new Unity scene. You can of course also mark them as DontDestroyOnLoad, which will bring them along to then new scene.

Since this process involves a bit of logic that has to be executed over several frames, coherence provides a LoadScene helper method (co-routine) on CoherenceSceneManager. Here's an example of how to use it:

It is not possible to move entities to other scenes without the client connection also moving there. Additionally, you can't currently query for entities in other scenes.

Both of these limitations are planned to be addressed in future versions of coherence.

If your project isn't a good fit for the automatic scene transitioning support described above, it is possible to use a more manual approach. There are a few important things to take care of in such a setup:

If you ever load another Unity scene, the CoherenceBridge that connects to the server needs to be kept alive, or else the client will be disconnected. A straightforward way of doing this is to call Unity's DontDestroyOnLoad method on it. This creates two problems when replicating entities from other Clients:

The bridge instantiates remote entities into the scene where it is currently located. To override this behaviour, set the InstantiationScene property on your CoherenceBridge to the desired scene.

Any new CoherenceSync instances will look for the bridge in the same scene that they are located. If the bridge is moved to the DontDestroyOnLoad scene, this lookup will fail. You can use the static CoherenceSync.BridgeResolve event to solve this problem (see the code sample in the next section). Alternatively, if you have a reference to a Scene, you can register the appropriate bridge for entities in that scene with CoherenceBridgeStore.RegisterBridge before it is loaded.

Additionally, coherence queries (e.g. CoherenceLiveQuery) also look for their bridge in their own scene, so you might have to set its bridgeResolve event too.

If you load levels via your own level format, or by loading Unity scenes additively, it is quite possible that you can skip some of the steps above.

The only thing strictly necessary for coherence scene support is to call

CoherenceBridge.SceneManager.SetClientScene(uint sceneIndex);

so that the Replication Server knows in which scene each Client is located.

Here's a complete code sample of how to use all the above things together:

coherence only replicates animation parameters, not state. Latency can create scenarios where different Clients reproduce different animations. Take this into account when working with Animator Controllers that require precise timings.

Unity Animator's parameters are bindable out of the box, with the exception of triggers.

While coherence doesn't officially support working with multiple AnimatorControllers, there's a way to work around it. As long as the parameters you want to network are shared among the AnimatorControllers you want to use, they will get networked. Parameters need to have the same type and name. Using the example above, any AnimatorController featuring a Boolean Walk parameter is compatible, and can be switched.

If this approach to keeping the connection alive is not a good fit for your game, see in the second part of this document.

In the CoherenceBridge inspector you will find all the options related to handling Scene transitions. First thing to know is that must be enabled for this feature to work.

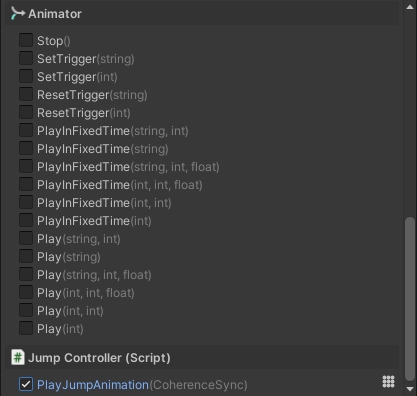

Triggers can be invoked over the network using . Here's an example where we inform networked Clients that we have played a jump animation:

Now, bind the PlayJumpAnimator method as a .

When we connect to a Game World with a Game Client, the traditional approach is that all Entities originating on our Client are session-based. This means that when the Client disconnects, they will disappear from the network World for all players.

A persistent object, however, will remain on the Replication Server even when the Client or Simulator that created or last simulated it, is gone.

This allows us to create a living world where player actions leave lasting effects.

In a virtual world, examples of persistent objects are:

A door anyone can open, close or lock

User-generated or user-configured objects left in the world to be found by others

Game progress objects (e.g. in PvE games)

Voice or video messages left by users

NPC's wandering around the world using an AI logic

Player characters on "auto pilot" that continue affecting the world when the player is offline

And many, many more

A persistent object with no Simulator is called an orphan. Orphans can be configured to be auto-adopted by Clients or Simulators on a FCFS basis.

This document explains how to set up an ever increasing counter that all Clients have access to. This could be used to make sure that everyone can generate unique identifiers, with no chance of ever getting a duplicate.

By being persistent, the counter will also keep its value even if all Clients log off, as long as the Replication Server is running.

First, create a script called Counter.cs and add the following code to it:

This script expects a command sent from a script called NumberRequester, which we will create below.

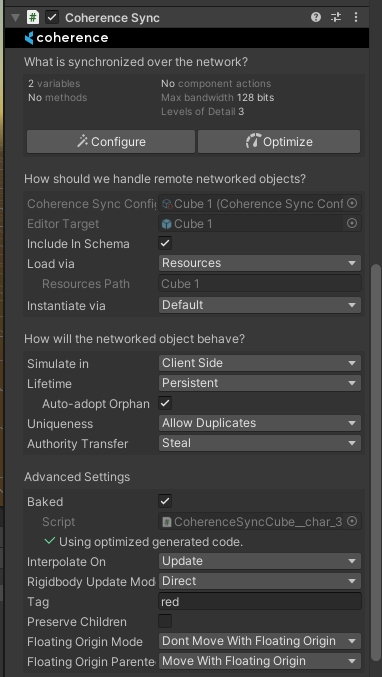

Next, add this script to a Prefab with CoherenceSync on it, and select the counterand the method NextNumber for syncing in the bindings window. To make the counter behave like we want, mark the Prefab as "Persistent" and give it a unique persistence ID, e.g. "THE_COUNTER". Also change the adoption behaviour to "Auto Adopt":

Finally, make sure that a single instance of this Prefab is placed in the scene.