Loading...

Loading...

Loading...

Loading...

Loading...

Loading...

Loading...

Loading...

Loading...

Loading...

Loading...

Loading...

Loading...

Loading...

Loading...

Loading...

Loading...

Loading...

Loading...

Loading...

Loading...

Loading...

Loading...

Loading...

Loading...

Loading...

Loading...

Loading...

Loading...

Loading...

Loading...

Loading...

Loading...

Loading...

Loading...

Loading...

Loading...

Loading...

Loading...

Loading...

Loading...

Loading...

Loading...

Loading...

Loading...

Loading...

Loading...

Loading...

Loading...

Loading...

Loading...

Loading...

Loading...

Loading...

Loading...

Loading...

Loading...

Loading...

Loading...

Loading...

Loading...

Loading...

Loading...

Loading...

Loading...

Loading...

Loading...

Loading...

Loading...

Loading...

Loading...

Loading...

Loading...

Loading...

Loading...

Loading...

Loading...

Loading...

Loading...

Loading...

Loading...

Loading...

Loading...

Loading...

Loading...

Loading...

Loading...

Loading...

Loading...

Loading...

Loading...

Loading...

Loading...

Loading...

Loading...

Loading...

Loading...

Loading...

Games are better when we play together.

coherence is a network engine, platform and a series of tools to help anyone create a multiplayer game. Our mission is to give any game developer, regardless of how technical they are, the power to make a connected game.

If you would like to get started right away, you can check the Installation page to learn how to install coherence in Unity, set up your Scene, Prefabs, interactions, as well as deploy your project to be shared with your friends.

To learn how to use coherence, we recommend you start by exploring the package Samples included right inside the Unity SDK. Or download one of our pre-made Unity projects First Steps or Campfire, which both come with extensive documentation explaining the thinking behind them.

If you enjoy learning with videos, we have a playlist of videos dedicated to getting started with the Unity SDK.

Finally, if you're new to networking you might enjoy our Beginner's Guide to Networking. This high-level introduction is not coherence-specific, but rather is applicable to any networking technology.

If you are an existing user and looking to update, check out the latest Release Notes. And maybe the SDK Upgrade Guide as well!

Get help, ask questions and suggest features in our Community

Chat with us on Discord

Contact us at devrel@coherence.io

Once you have installed the Unity SDK, you can start using coherence in a project.

We recommend for first-time users of coherence to go through this flow in an empty project at least once, before trying to network an existing game. This will give you a good understanding of the different aspects that make up the coherence toolset.

This section provides an example of the general coherence workflow in most projects.

It covers how to:

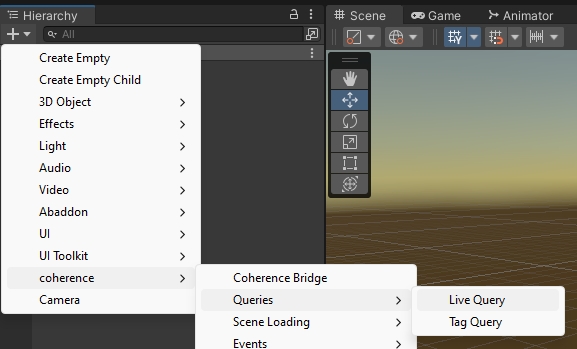

Prepare a Unity scene for network synchronization. This requires a CoherenceBridge, at least one CoherenceLiveQuery, and an in-game UI for connecting (see: Sample UIs).

Configure Prefabs to sync over the network using the CoherenceSync component.

Test your game locally.

Try the game live with coherence Cloud.

In the sub-pages of this section we'll go through all of them. Let's begin!

Here is the roadmap of the coherence SDK, engine and backend. We're constantly listening to your feedback to improve coherence. Please reach out on our forum and discord if you have suggestions.

Better API reference documentation

Channels (ordered/unordered, reliable/unreliable)

Synchronizing Lists

Improvements to Uniqueness

Performance improvements

Scene transitioning improvements

Fully authoritative Simulator

Improvement to online dashboard logs

More code samples

Inventory

Voice

Matchmaking

Leaderboards

Console-specific updates

Mobile-specific updates

Platform-specific accounts

Debug tools

Built-in network condition simulation

Network profiler

Global KV store

Support for multiple Simulators and Replicators in a single project

More logging and diagnostics tools

Additional server regions

Support for lean pure C# clients and simulators without Unity

Bare-metal and cloud support

Unreal Engine SDK

The first step to use coherence in Unity is to install the coherence SDK.

Latest Unity LTS releases are officially supported. As of now, we support:

Unity 6 LTS (min. 6000.0.23f1)

Unity 2022 LTS (min. 2022.2.5f1)

Unity 2021 LTS (min. 2021.3.18f1)

You can conveniently download the SDK from the Unity Asset Store:

As an alternative to the Asset Store download, you can manually install coherence from our package repository. The rest of this page describes the steps to do so.

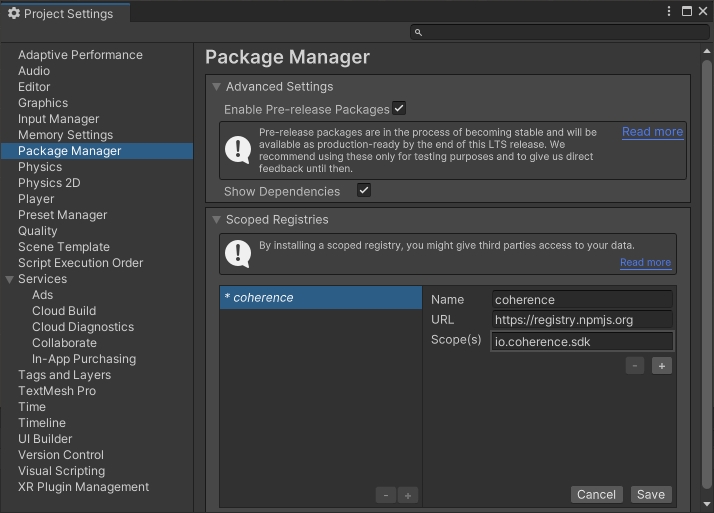

First, go to Edit > Project Settings. Under Package Manager, add a new Scoped Registry with the following information:

Name: coherence

URL: https://registry.npmjs.org

Scope(s): io.coherence.sdk

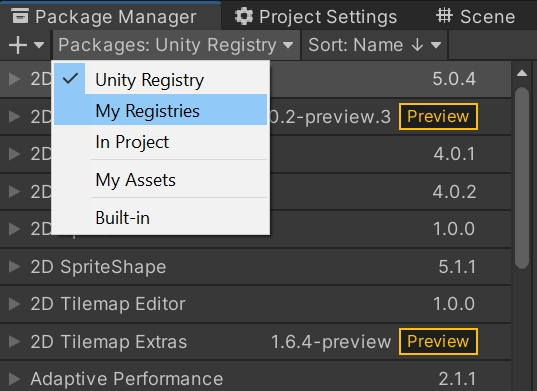

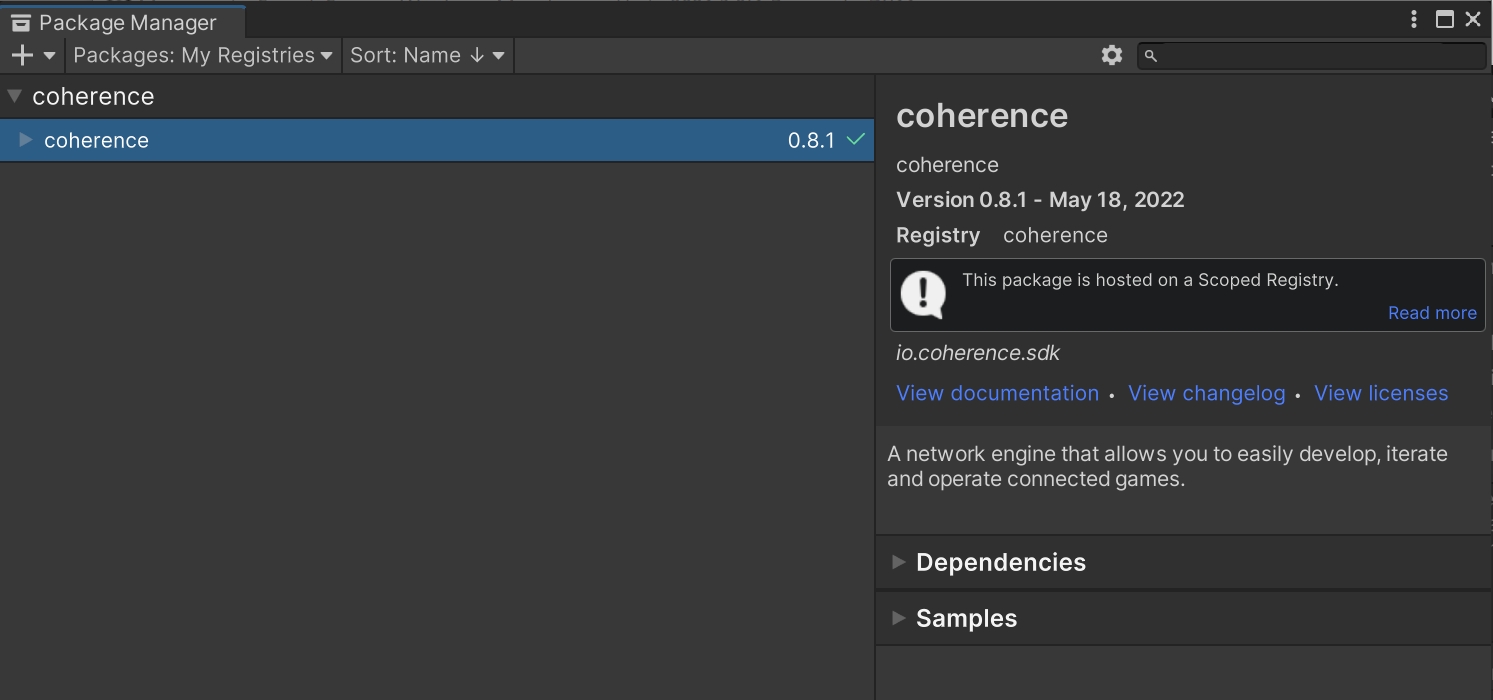

Now open Window > Package Manager. Select My Registries in the Packages dropdown.

Highlight the coherence package, and click Install.

As an alternative to the step-by-step process above, you can directly edit your package manifest.

Refer to Unity's instructions on modifying your project manifest.

Add an entry for the coherence SDK under the dependencies object

Add one for the scoped registry in the scopedRegistries array

You can see an example below:

You will then see the package in the Package Manager under My Registries.

When you successfully install the coherence SDK, after code compilation, you should see the Welcome window.

coherence is a network engine, platform, and a series of tools to help anyone create a multiplayer game.

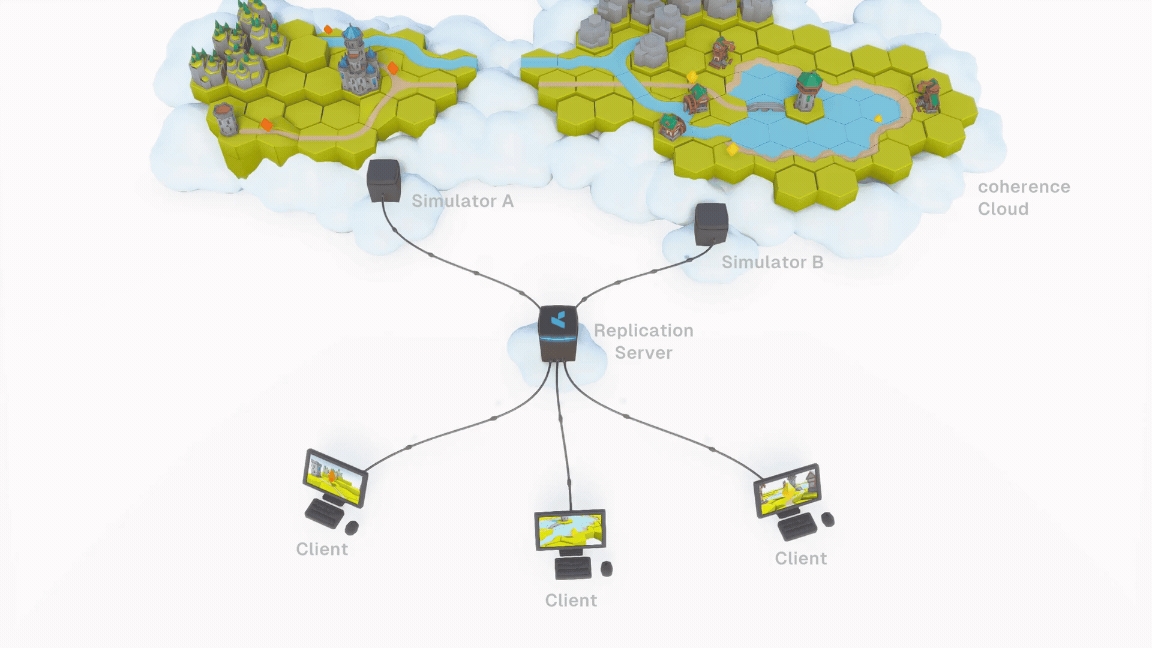

Our network engine is our foundational tech. It works by sharing game world data via the and passing it to the connected Clients. The Clients, in this context, can be regular game Clients (where a human player is playing the game) or a special version of the game running in the cloud, which we call "Simulator".

While coherence's network engine is meant to be , we offer SDKs to integrate with popular engines (for instance, Unity).

The coherence Unity SDK provides a suite of tools and pre-made Unity components, and a designer-friendly interface to easily configure . It also takes care of generating via a process called "Baking". In fact, simple networking can be setup completely without code.

But coherence is not just an SDK.

For more information about how a network topology is structured in coherence, check out this video:

A lean and performant smart relay that keeps the state of the world, and replicates it efficiently between various Simulators and game Clients.

A special version of the Game Client without graphics (a "headless client"), optimized and configured to perform server-side simulation of the game world. When we say something is simulated "server-side", we mean it is simulated on one or several Simulators.

An easy-to-manage platform for hosting and scaling the backend for your multiplayer game. The coherence Cloud can host a Replication Server, but also Simulators.

Our cloud-backed dashboard, where you can control all of the aspects of a project, configure matchmaking, Rooms, Worlds, and keep an eye on how much traffic the project is generating.

Edit /Packages/manifest.json

The is a platform that can handle scaling, matchmaking, persistence and load balancing, all automatically. And all using a handy . The coherence Cloud can be used to launch and maintain live games, as well as a way to quickly test a game in development together with remote colleagues.

The Replication Server usually runs in the , but developers can start it locally from the command line or the Unity Editor. It can also be run on-premise, hosted on your servers; or be hosted by one of the Clients, to create a scenario (Client-hosting).

A regular build of the game. To connect to coherence, it uses our.

Clients (and Simulators) can define (Live Queries), levels of detail, varying simulation and replication frequencies and other to control how much bandwidth and CPU is used in different scenarios.

This is the process of specific to the game engine that takes care of network synchronization and other network-specific code. This is also known as "baking", and it's a completely automated process in coherence, triggered by just pressing a button. You can however for very advanced use cases.

In addition, every project can have a showcase page where you can host !

For more coherence terminology, visit the .

Fast network engine with cloud scaling, state replication, persistence and auto load balancing.

Easy to develop, iterate and operate connected games and experiences.

SDK allows developers to make multiplayer games using Windows, Linux or Mac, targeting desktop, console, mobile, VR or the web.

Game engine plugins and visual tools will help even non-coders create and quickly iterate on a connected game idea.

Scalable from small games to large virtual worlds running on hundreds of servers.

Game-service features like user account and key-value stores.

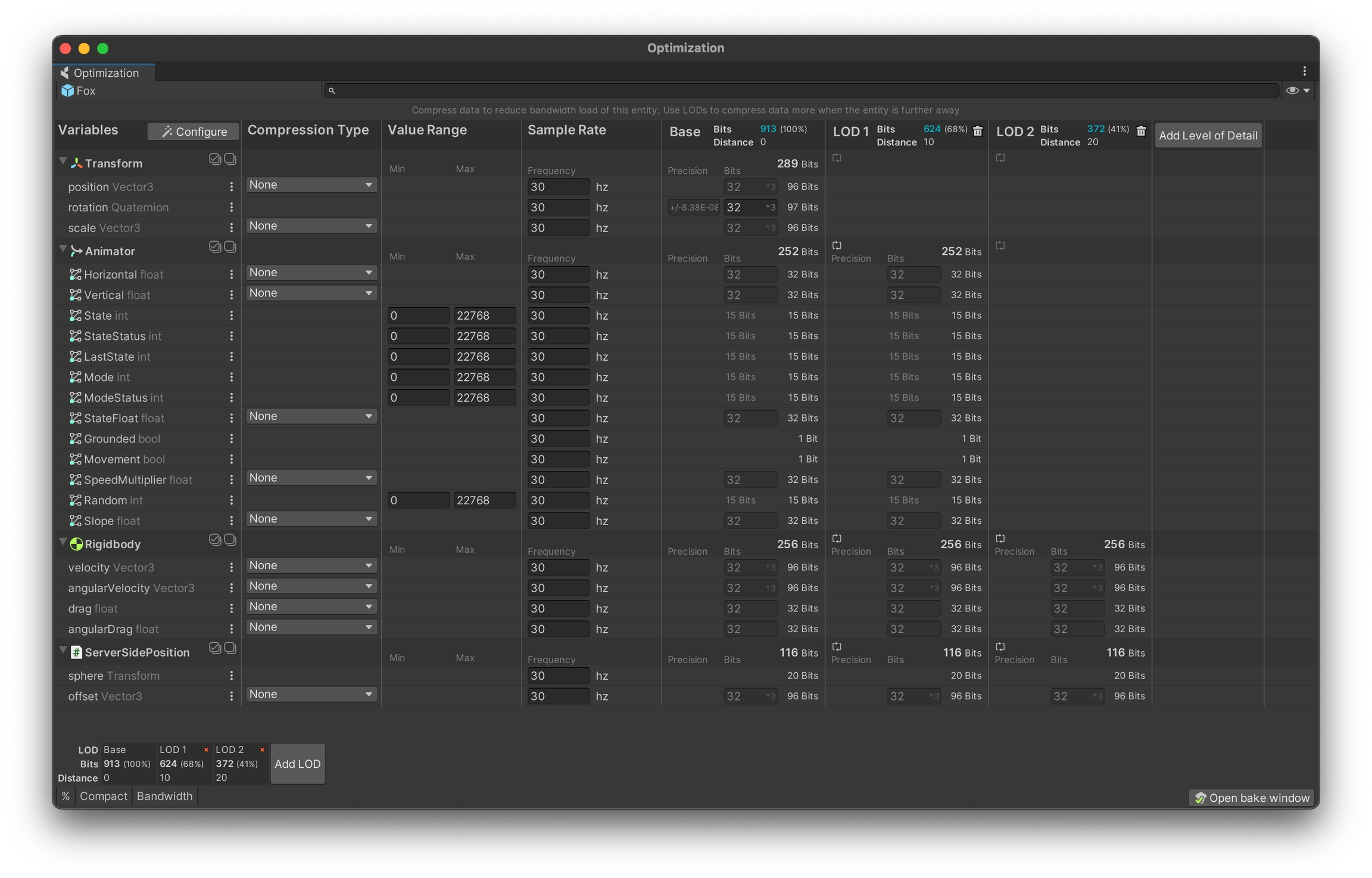

At the core of coherence lies a fast network engine based on bitstreams and a data-oriented architecture, with numerous optimization techniques like delta compression, quantization and network LOD-ing ("Level of Detail") to minimize bandwidth and maximize performance.

The network engine supports multiple authority models:

Client authority

Server authority

Server authority with client prediction

Authority handover (request, steal)

Distributed authority (multiple simulators with seamless transition)

Deterministic client prediction with rollback ("GGPO") - experimental

Different authority models can be mixed in the same game.

coherence supports persistence out of the box. This means that the state of the world is preserved no matter if clients or simulators are connected to it or not. This way, you can create shared worlds where visitors have a lasting impact.

The coherence SDK only supports Unity at the moment. Unreal Engine support is planned. For more specific details, please check the Unreal Engine Support page. For custom engine integration, please contact our developer relations team.

Custom UDP transport layer using bit streams with reliability

WebRTC support for WebGL builds

Smooth state replication

Server-side, Client-side, distributed authority

Connected entity support

Fast authority transfer

Remote messaging (RPC)

Persistence

Verified support for Windows, macOS, Linux, Android, iOS and WebGL

Support for Rooms and Worlds

Floating Origin for extremely large virtual Worlds

TCP Fallback

Support for Client hosting through Steam Datagram Relay

Unity SDK with an intuitive no-code layer

Per-field adjustable interpolation and extrapolation

Input queues

Easy deployment into the cloud

Multi-room Simulators

Multiple code generation strategies (Assets/Baking, automated with C# Source Generators)

Extendable object spawning strategies (Resources, Direct References, Addressables) or implement your own

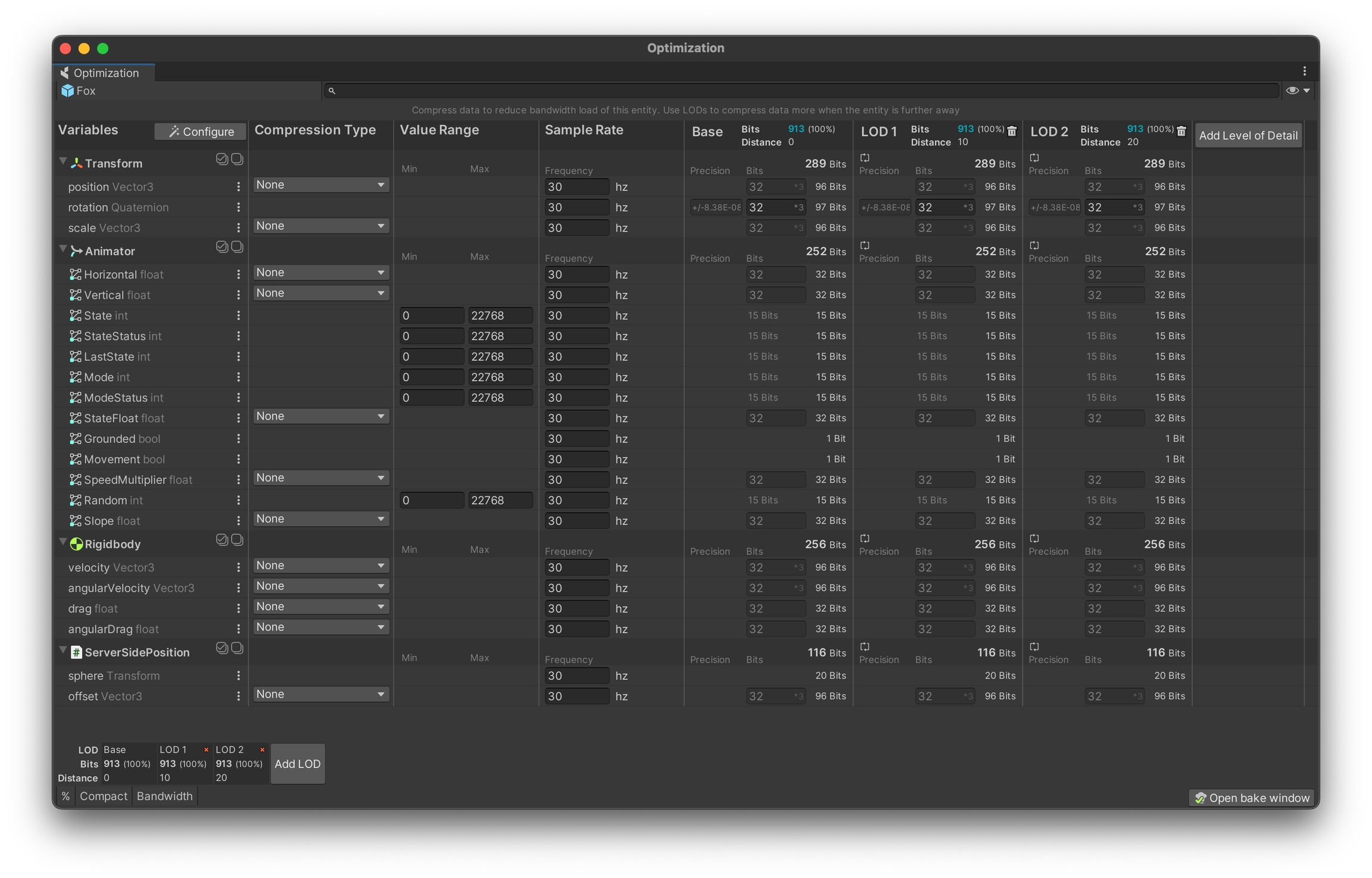

Per-field compression and quantization

Per-field sampling frequency adjustable at runtime

Unlimited per-field levels of detail

Areas of interest

Accurate Simulation Frame tracking

Network profiler

Online Dashboard for management and usage statistics

Automatic server deployment and scaling

Multiple regions in the US, Europe and Asia

Player accounts with a persistent key/value store

Matchmaking and lobby rooms

If you need to support your game in mainland China, please contact us for a custom solution.

An easy way to test your game locally is to simply create a build, and open several instances of it.

You can also connect the Editor alongside the builds, with the extra benefit of being able to inspect the hierarchy and the state of its GameObjects.

Pros

Easy to distribute amongst team members and testers

Well-understood workflow

Can test with device-specific constraints (smartphones, consoles, ...)

Cons

Not the shortest iteration time, as you need to continuously make builds

Harder to debug on the builds (requires custom tooling on your side to do so)

Make sure you've read through Local Development and have started a Local Replication Server.

Let's create a standalone build. Before we do so, check a few settings:

In Project Settings > Player make sure that the Run in Background option is checked.

Go to Project Settings > Player and change the Fullscreen Mode to Windowed and enable Resizable Window. This will make it much easier to observe standalone builds side-by-side when testing networking.

With these in place, we're ready to build.

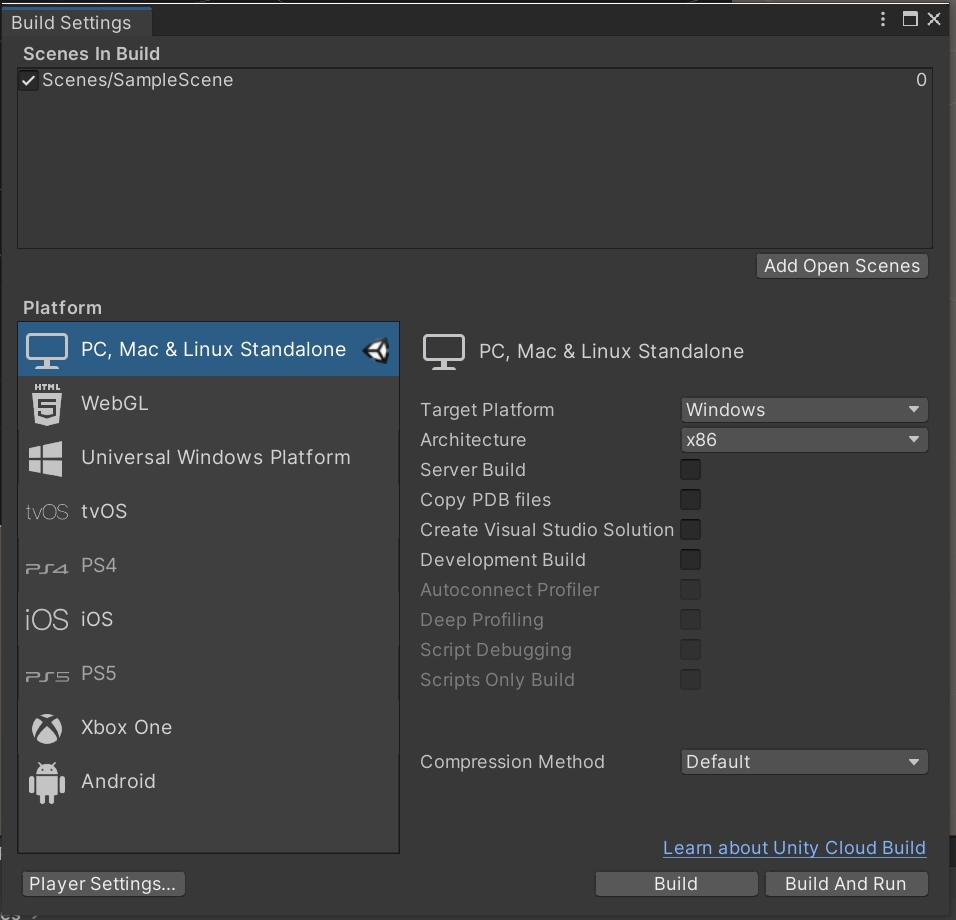

Open the Build Settings window (File > Build Settings). Click on Add Open Scenes to add the current scene to the build.

Click Build and Run.

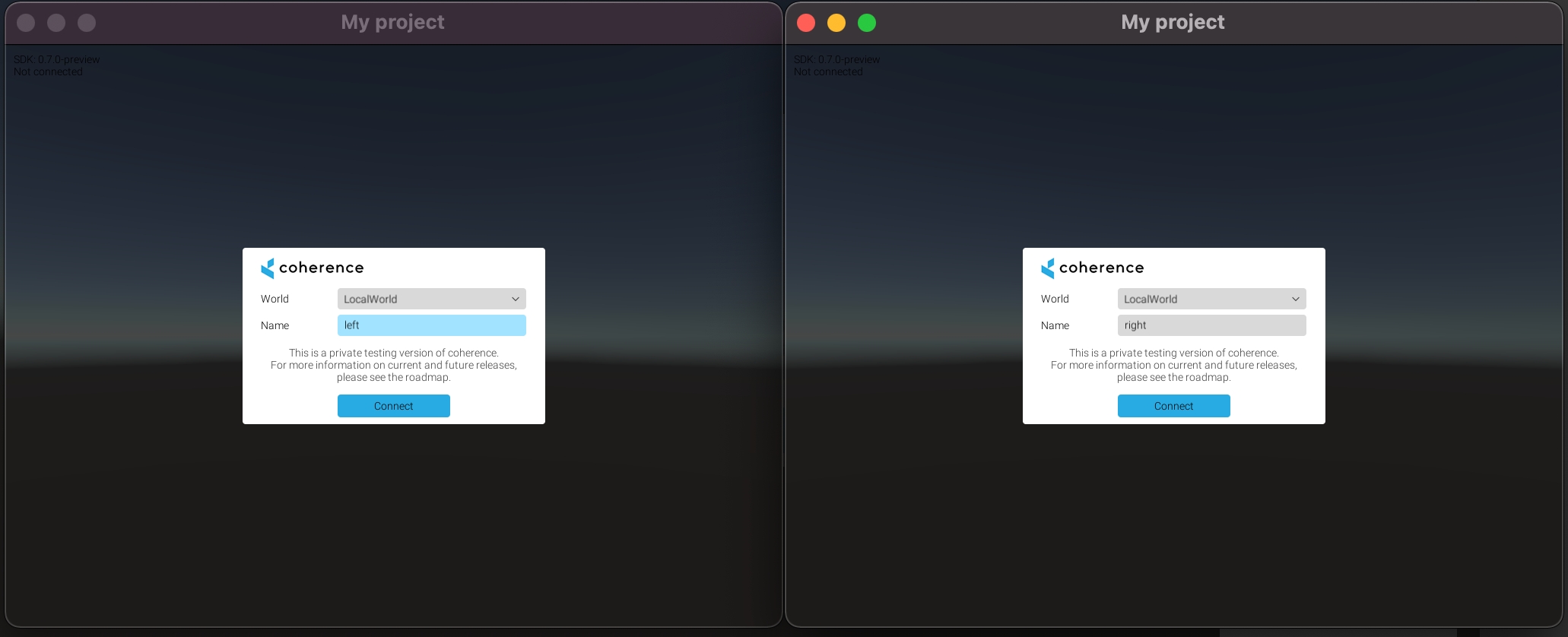

When the build is done, start another instance of the executable (or run the project in Unity by just hitting Play).

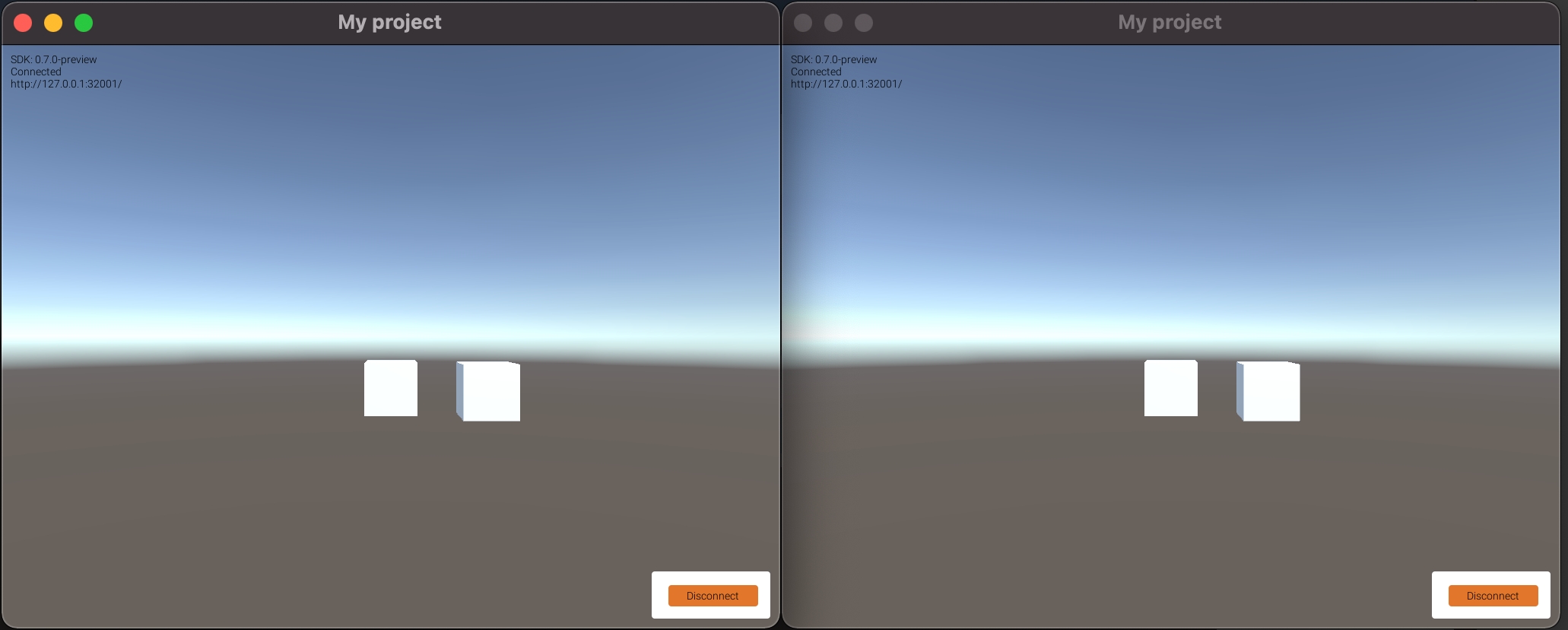

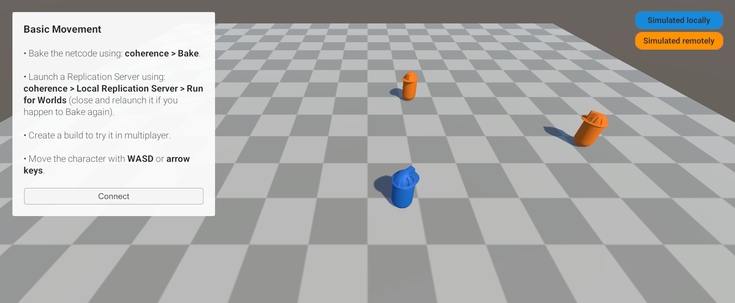

Click Connect in the connection UIs on both clients. Now, try focusing on one and using WASD keys. You will see the box move on the other side as well.

One of the first steps in adding coherence to a project is to setup the scene that you want the networking to happen in.

The topics of this page are covered in the first minute of this video:

Preparing a scene for network synchronization requires to add three fundamental objects:

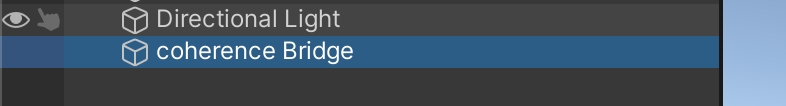

In the top menu: coherence > Scene Setup > Create CoherenceBridge

A GameObject with a CoherenceBridge script will be created.

This object manages the connection with coherence's relay, the Replication Server, and is the centre of many connection-related events.

No particular setup is required now, but feel free to explore the options in its Inspector.

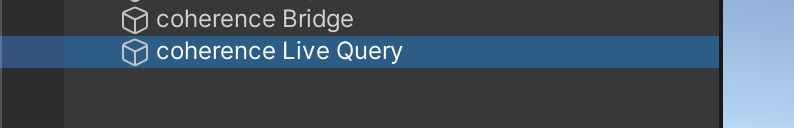

In the top menu: coherence > Scene Setup > Create LiveQuery

A GameObject with a LiveQuery script will be created.

A LiveQuery defines what part of the world the Client is interested in when requesting data from the Replication Server. When Constrained, it covers limited volume. The Extent property specifies how far it reaches. Anything that is outside the area defined by the LiveQuery will not be synced.

For a big game world, it makes sense to use a small range and parent the LiveQuery to the player character or the camera, so it can move with it. But for now, let's just create a LiveQuery, position it at the centre of the world, and keep it as Infinite (no spatial constraints).

While LiveQueries are an optimisation tool, having at least one LiveQuery is necessary.

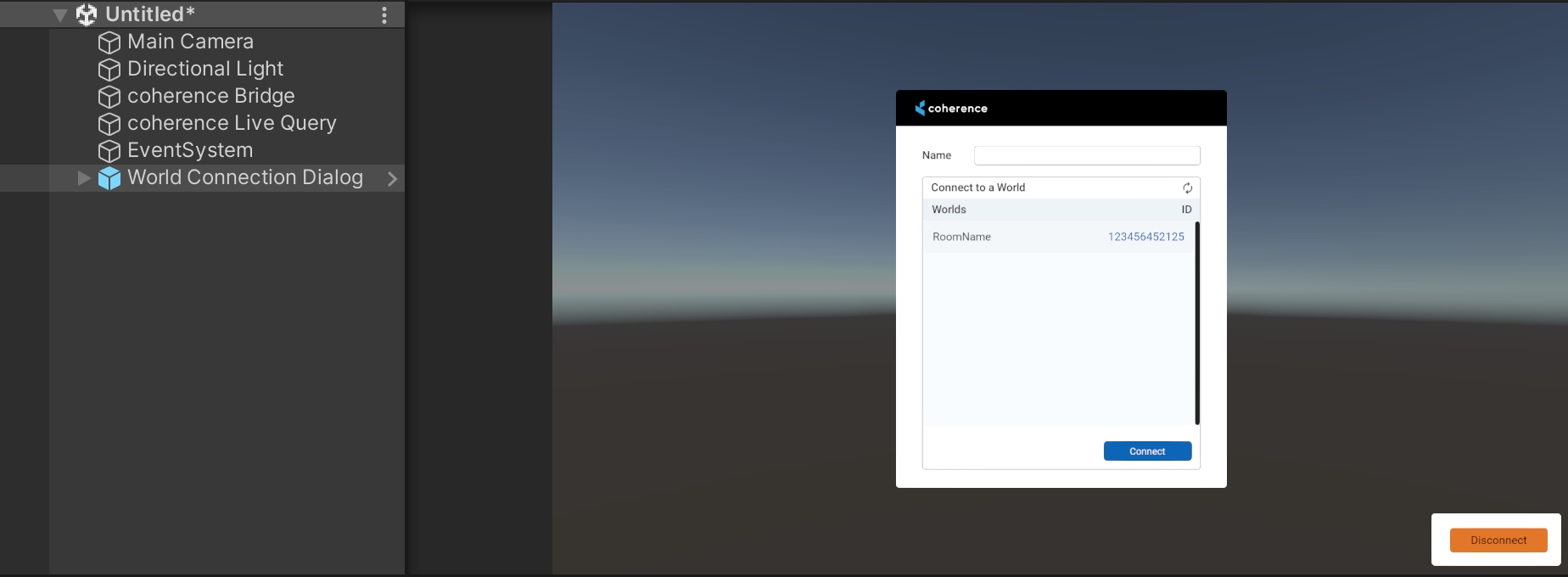

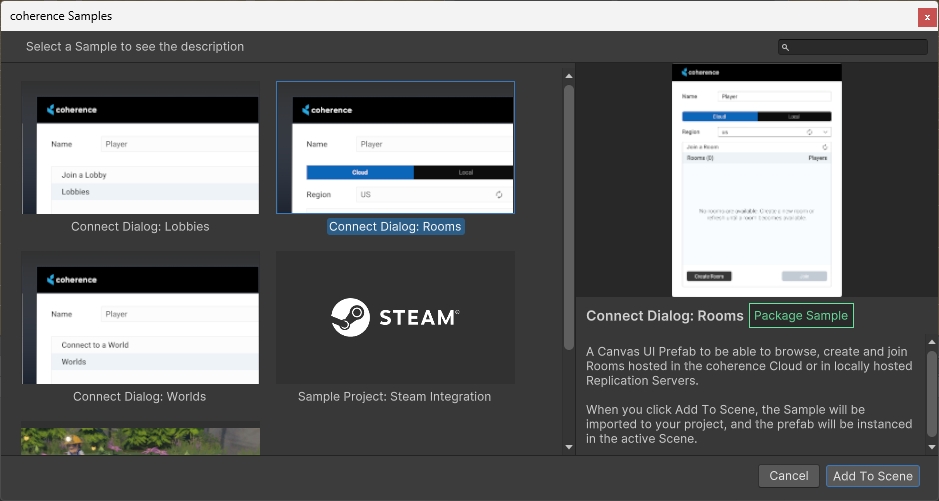

In the top menu: coherence > Explore Samples

From the Explore Samples menu, choose Connect Dialog: Rooms. The Prefab will be instantiated in your scene.

A Connect dialog UI provides an interface for the player to connect to the Replication Server, once the game is running. You can create your own connection dialog, but we provide a few examples as a quick way to get started and for prototyping. Read more in the section dedicated to Sample UIs.

In this section, we:

Added a CoherenceBridge to the scene to facilitate connection to the Replication Server

Used a LiveQuery to ensure we receive network updates

Added an in-game UI to allow players to connect over the network

Next: time to setup some Prefabs!

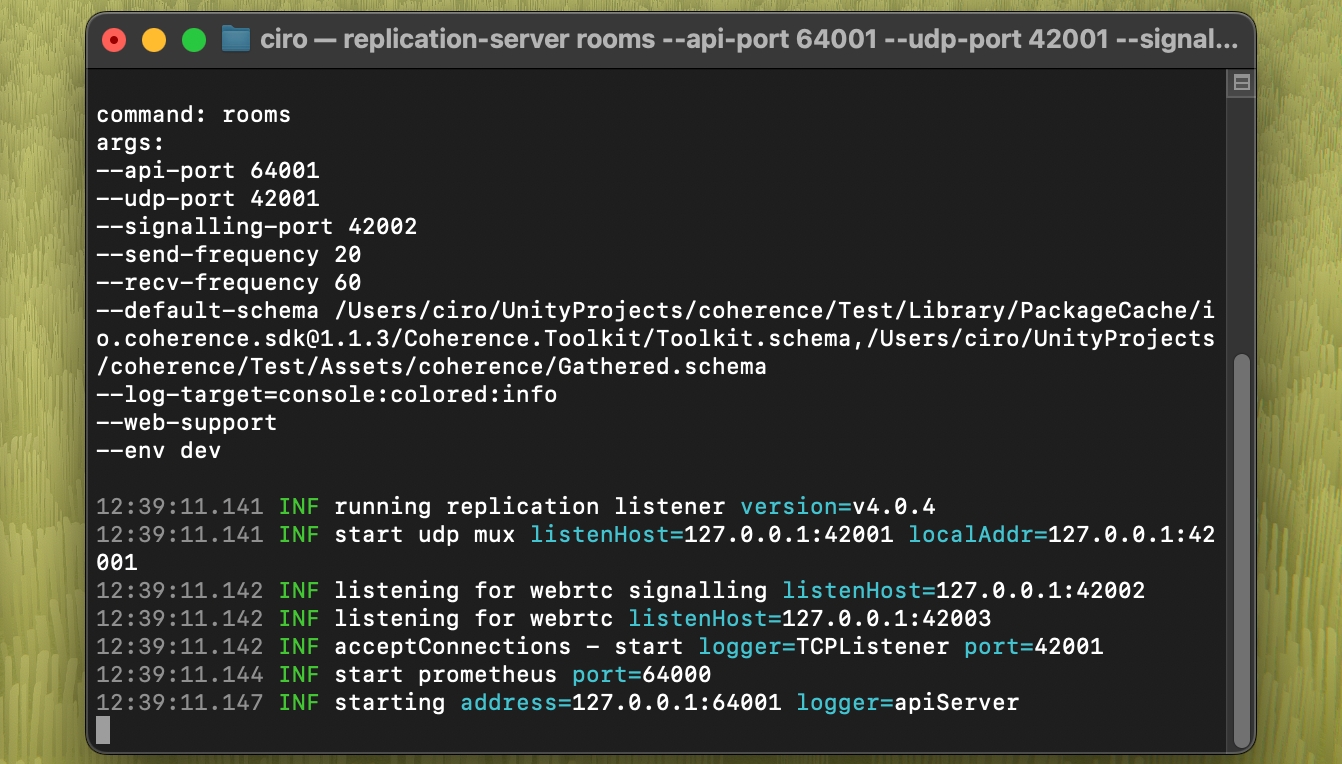

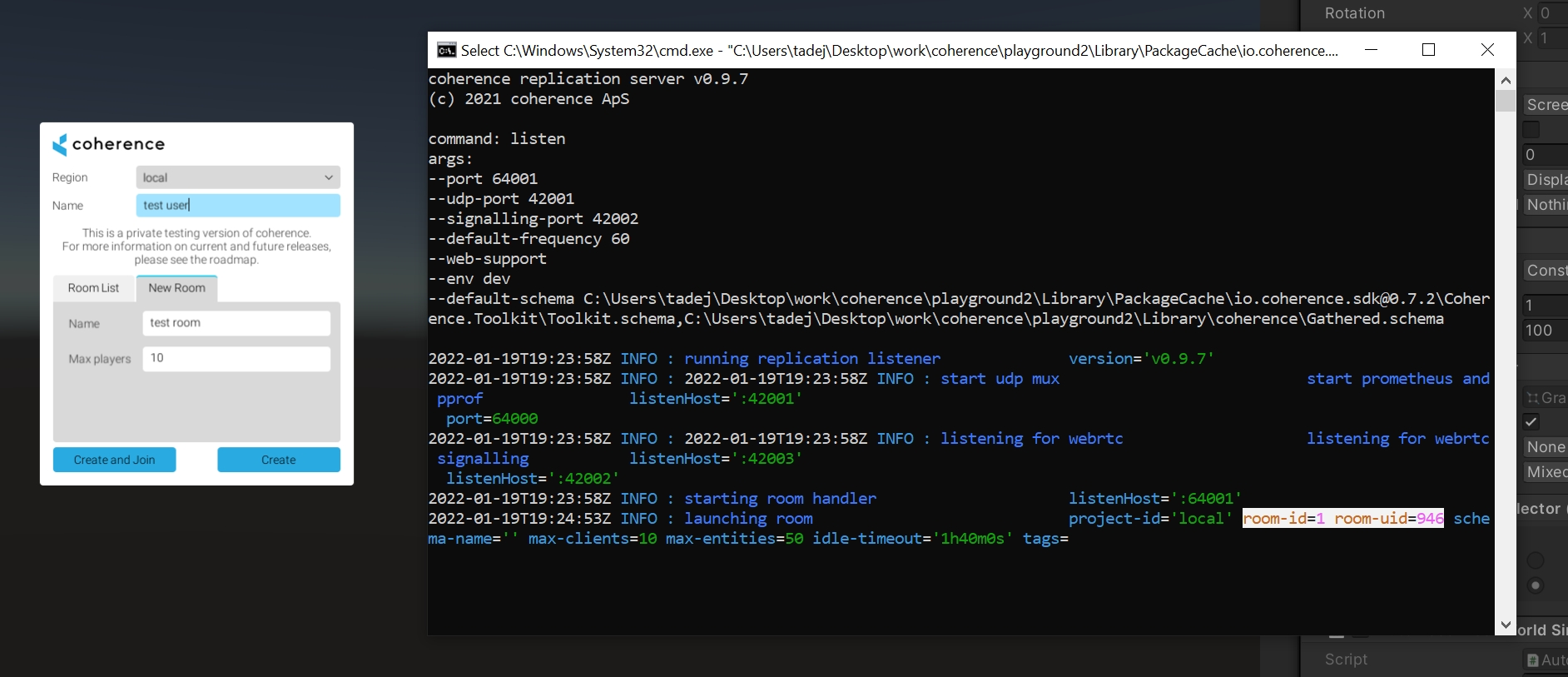

Now we can build the project and try out network replication locally. To do so, we need to launch and connect to a Replication Server.

You can run a local Replication Server directly on your machine! You can either:

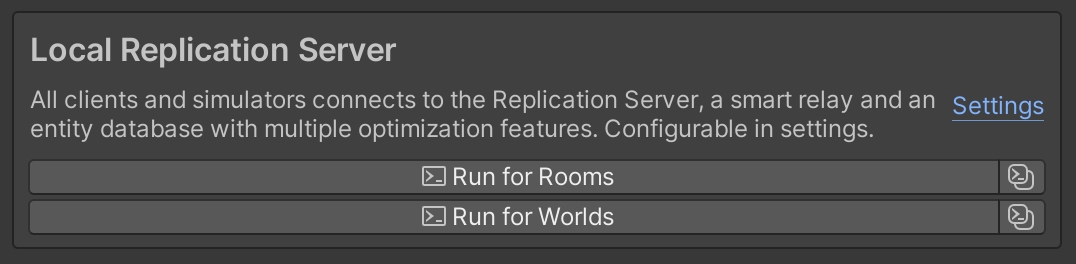

Go to: coherence > Local Replication Server > Run for Rooms or Run for Worlds

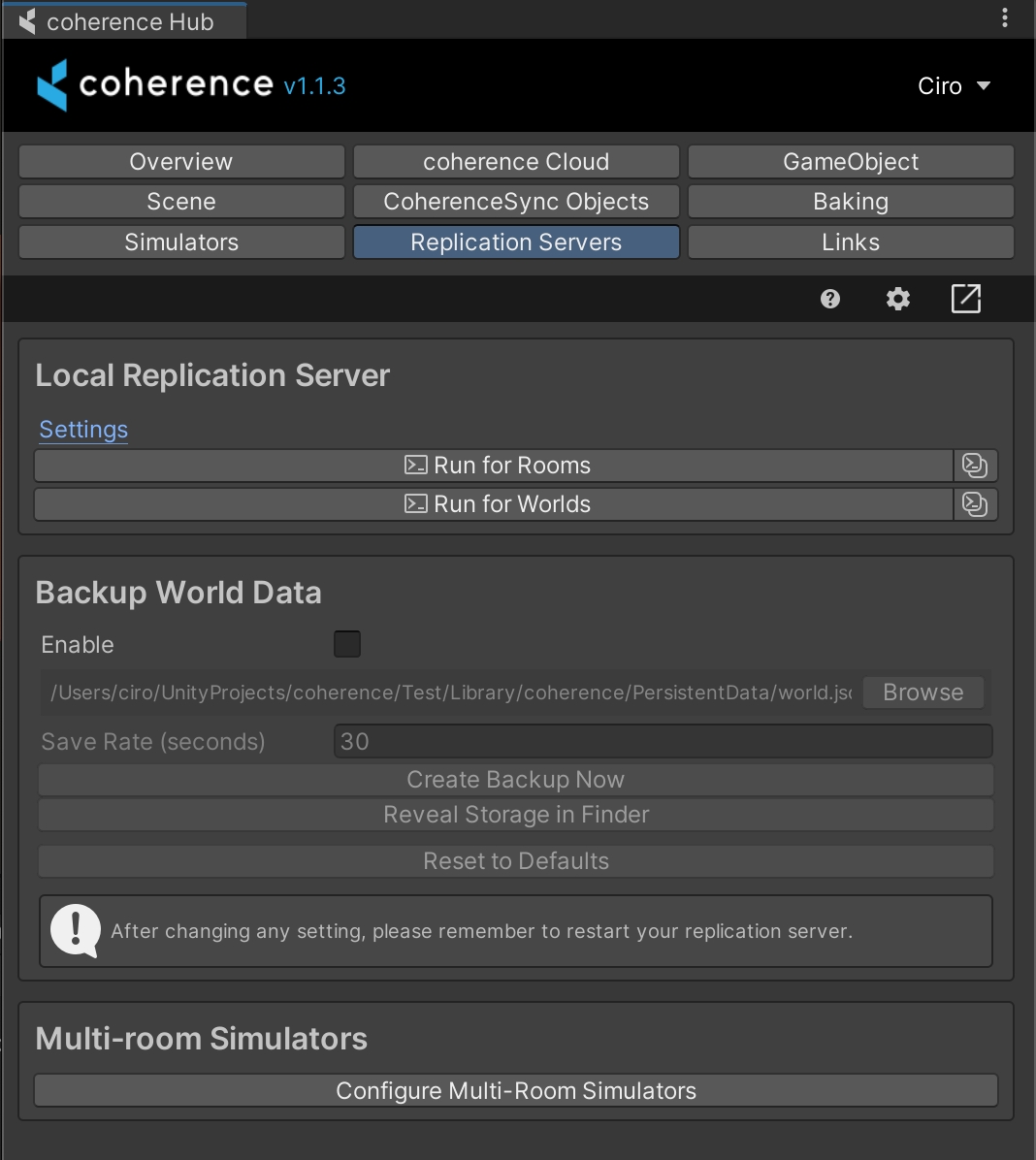

In the coherence Hub, open the Replication Servers tab. From here, you can run a server for Rooms or Worlds:

Whether you run a Replication Server for Rooms or for Worlds depends on which setup you plan to use, which in turn requires the correct corresponding Sample UI.

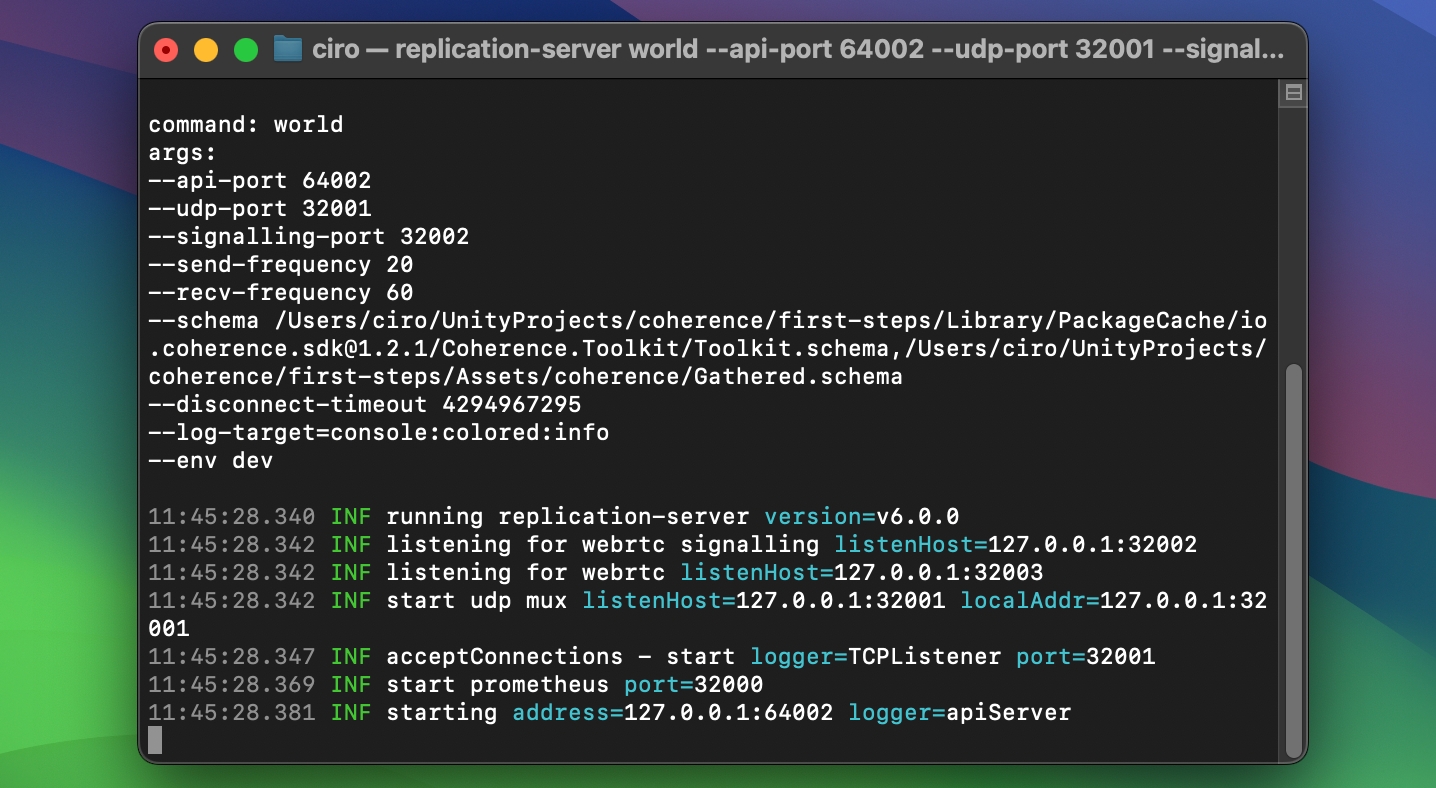

Regardless of how you launch it, a new terminal window will open and display the running Replication Server:

If the console opens correctly and you don't see an error line (they show up in red), it means your Replication Server is running! Now you should be able to connect to it, in the game.

It is often useful to be able to run multiple instances of your game on the same device. This allows you to simulate multiple player connections.

There are multiple ways to do this:

Make a build of the game

Use the Multiplayer Play Mode package (recommended, only available in Unity 6)

Use a third-party plugin, such as ParrelSync

Dive into the individual pages to see our recommendation for each option.

Connecting is done using our API. For now, use one of the Sample UIs we provide. You should already have one in the scene if you followed the steps in the Scene Setup section.

With the game and the RS running, you can now connect and play the game.

If you can't connect

Did you change something in the configuration of your connected Prefabs? You have to bake again, and restart the Replication Server.

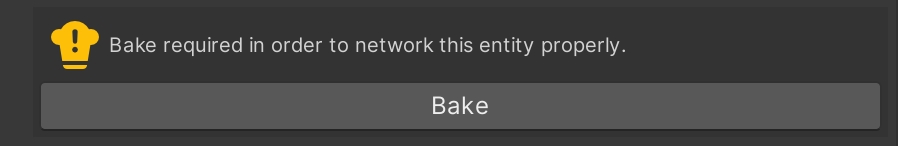

Time to bake!

In this section, we:

Ran a local Replication Server

Saw how to run multiple instances of the game

Connected to the Replication Server

Now that we know things work locally, it's time to test the game in the coherence Cloud!

Multiplayer Play Mode (MMPM) is Unity's official solution for local multiplayer testing, available from Unity 6.

Pros

Short iteration times

Tighter integration within the Editor, doesn't require multiple (standalone) Editors open

Cons

Requires Unity 6+

Can be more resource demanding than just running builds

Install MPPM as described in their Installation Instructions

Open Window > Multiplayer Play Mode

Enable up to 4 virtual Players

Make sure that the baked data is up-to-date before starting to test, and that the Replication Server is running with the latest schema

Enter Play Mode

coherence tells apart virtual Players from the main Editor, so it's easier for you to not edit assets in clones by mistake.

In this section, we will learn how to prepare a Prefab for network replication.

Setting up basic syncing is explained in this video, from 1:00 and onwards:

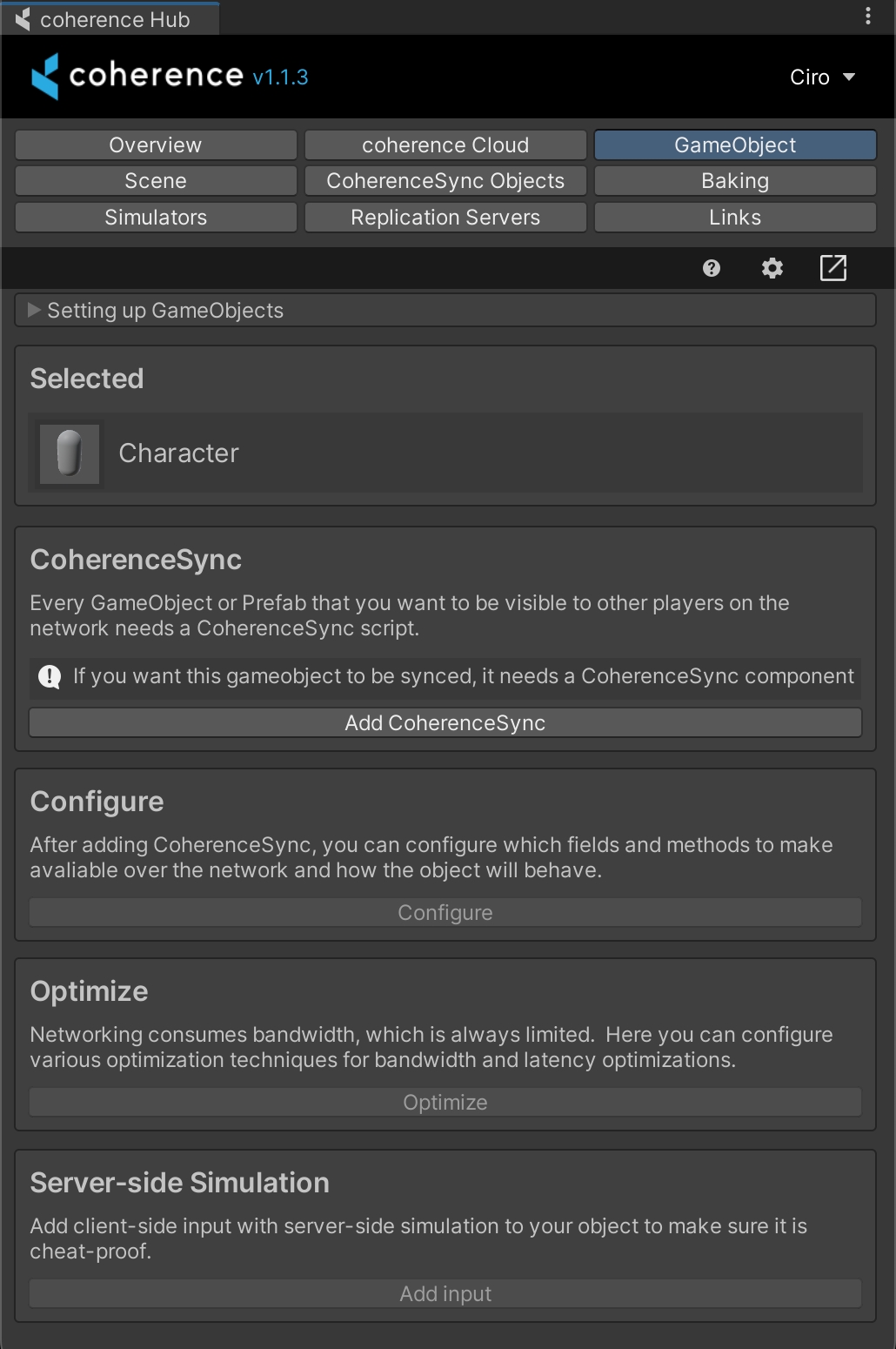

Menu: coherence > coherence Hub

You can let the coherence Hub guide you through your Prefab setup process. Simply select a Prefab, open the GameObject tab and follow the instructions.

You can also follow the detailed step-by-step text guide below.

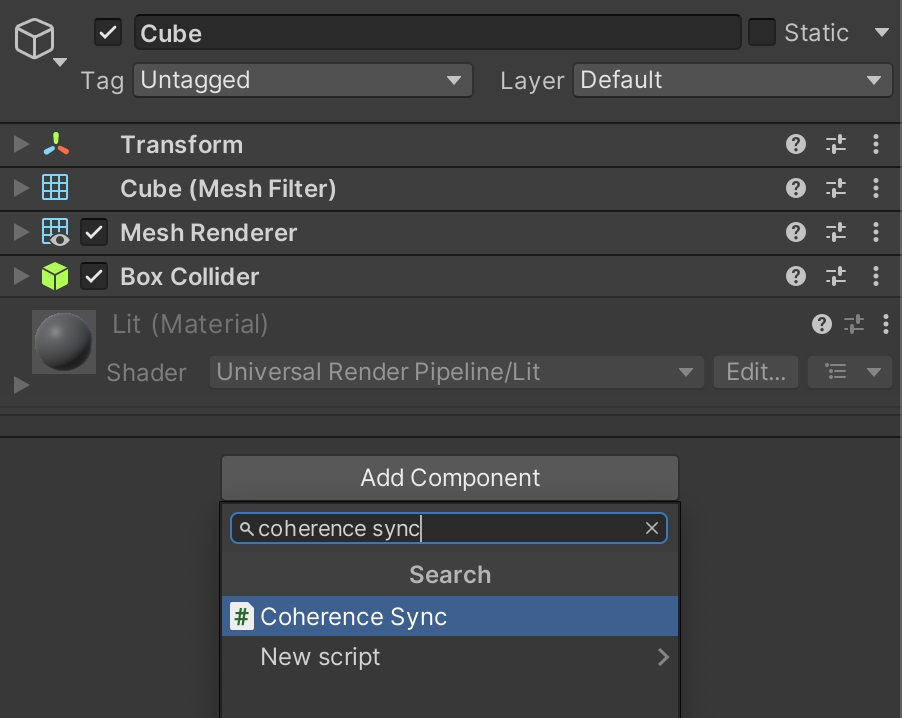

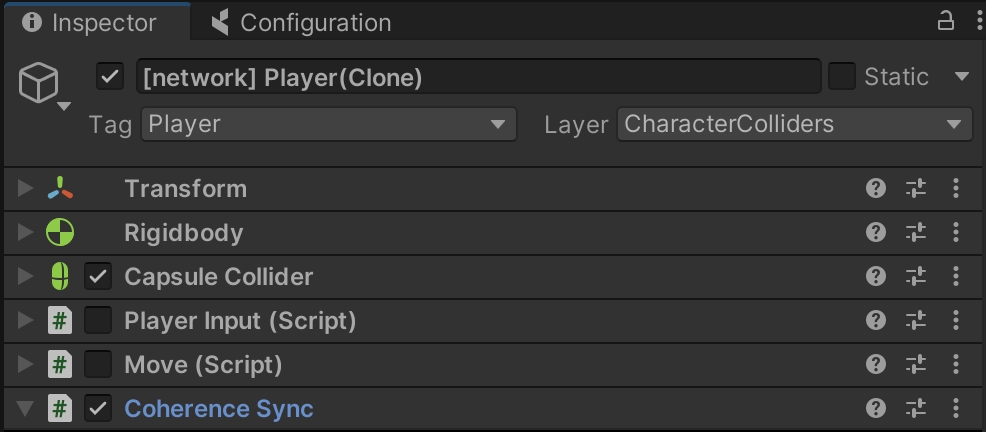

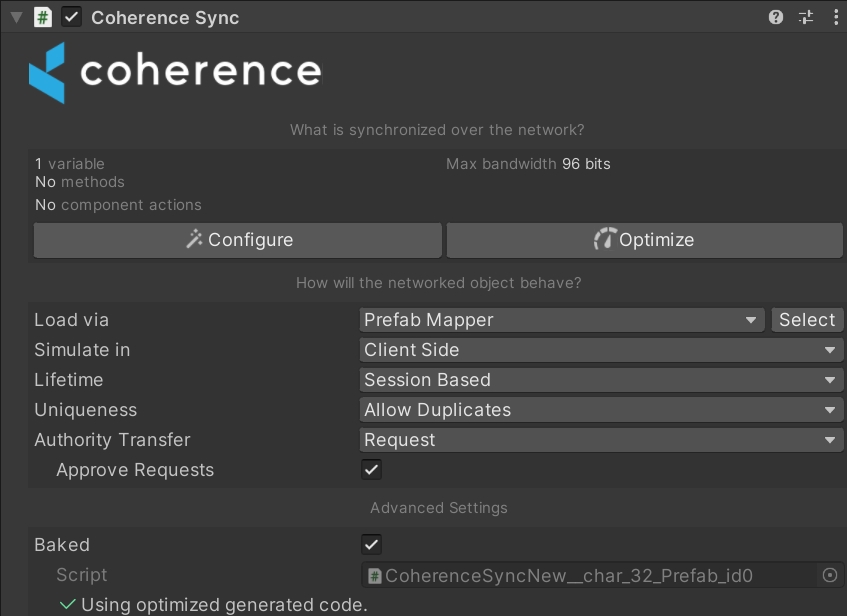

CoherenceSync componentFor an object to be networked through coherence, it needs to have a CoherenceSync component attached, and be a Prefab.

Currently, only Prefabs can be networked.

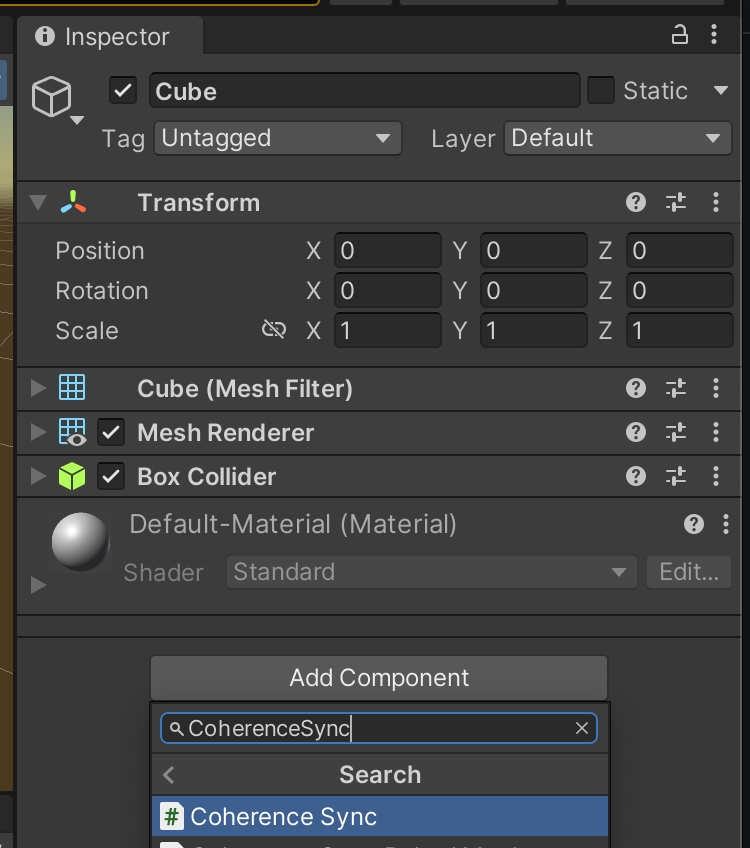

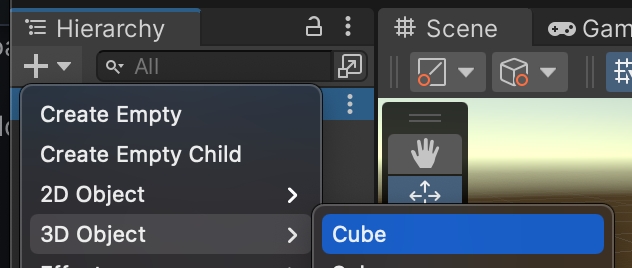

First, create a new GameObject. In this example, we're going to create a cube.

Next, we add the CoherenceSync component to Cube.

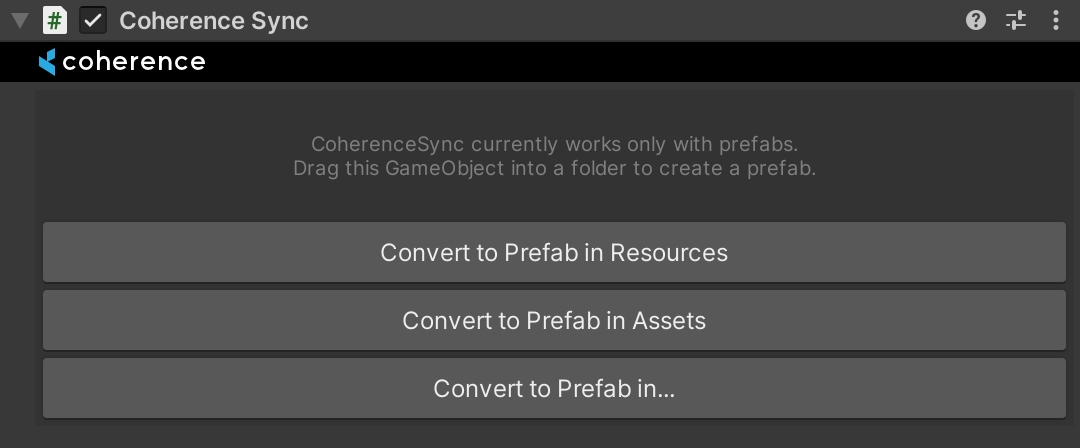

The CoherenceSync inspector now tells us that we need to make a Prefab out of this GameObject for it to work. We get to choose where to create it:

Now you can either:

Click on the Sync with coherence checkbox at the top of the Prefab inspector.

Manually add the CoherenceSync component.

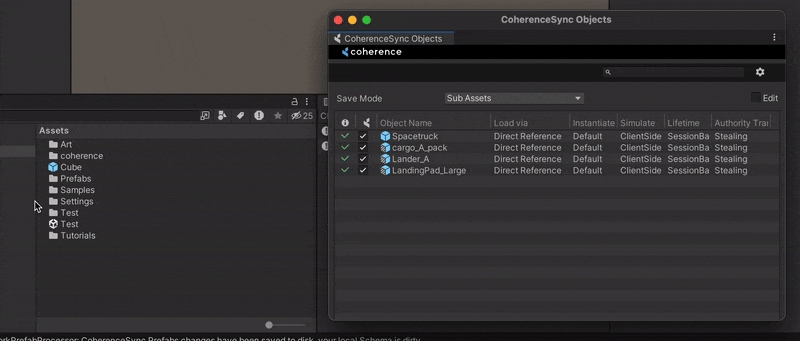

Drag the Prefab to the CoherenceSync Objects window. You can find it in coherence > CoherenceSync Objects.

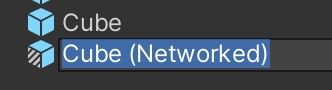

One way to configure a pre-existing Prefab for networking, instead of just adding CoherenceSync to it, is to derive a Prefab variant and add the component to that instead.

In our Cube example, instead of adding CoherenceSync to Cube, you can create a Cube (Networked) Prefab and add the component to it:

This way, you can retain the original Prefab untouched, and build all the network functionality separately, in its own Prefab.

Another way to use Prefab variants to our advantage is to have a base Prefab using CoherenceSync, and create Prefab variants off that one with customizations.

For example, Enemy (base Prefab) and Enemy 1, Enemy 2, Enemy 3... (variant Prefabs, using different models, animations, materials, etc.). In this setup, all of the enemies will share base networking settings stored in CoherenceSync, so you don't have to manually update every one of them.

The Prefab variants inherits the network settings from their base, and you change those with overrides in the Configuration window. When a synced variable, method or component action is present in the variant and not in the parent, it will be bolded and it will have the blue line next to it, just like any other override in Unity:

CoherenceSyncThe CoherenceSync component will help you prepare an object for network synchronization during design time. It also exposes APIs that allow to manipulate the object during runtime.

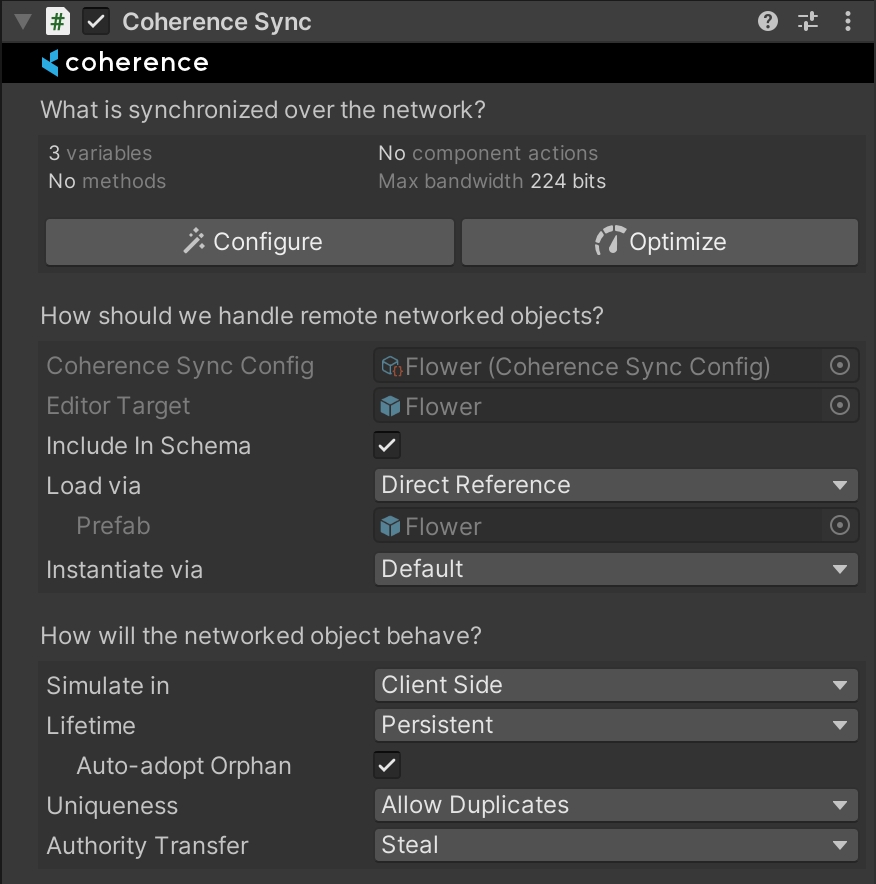

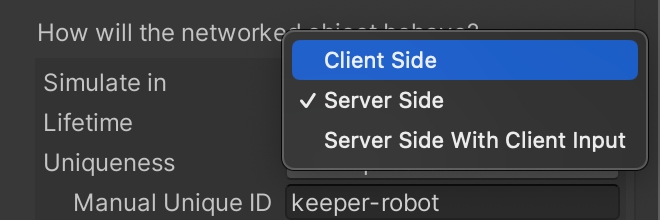

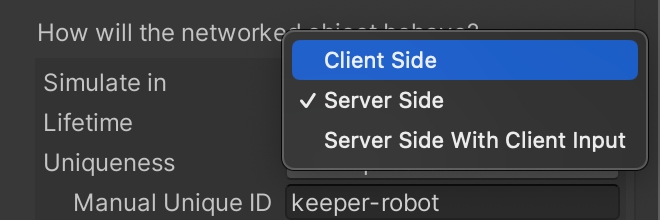

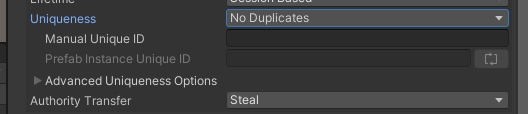

In its Inspector you can configure settings for Lifetime (Session-based or Persistent), Authority transfer (Not Transferable, Request or Steal), Simulate In (Client-side, Server-side or Server-side with Client Input) and Adoption settings for when persistent entities become orphaned, and more.

There are also a host of Events that are triggered at different times.

For now, we can leave these settings to their defaults.

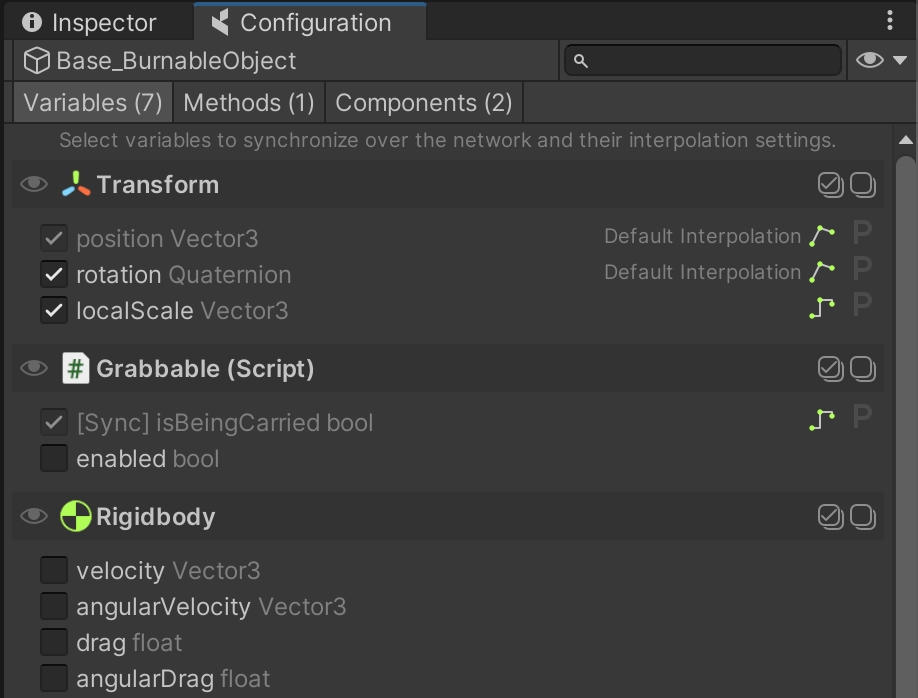

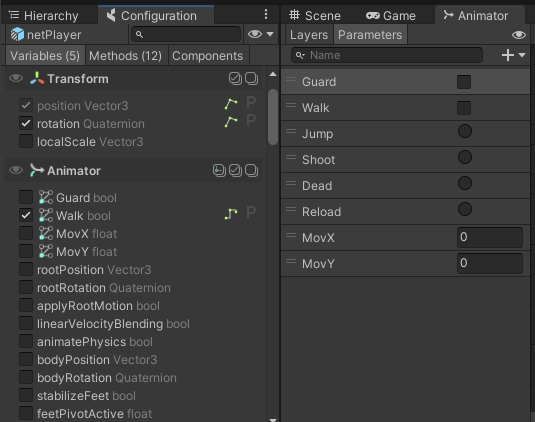

CoherenceSync allows you to automatically network all public variables and methods on any of the attached components, from built-in Unity components such as Transform, Animator , etc. to any custom script, including scripts that came with the Asset Store packages that you may have downloaded.

Make sure the variables you want to network are set to public. coherence cannot sync non-public variables.

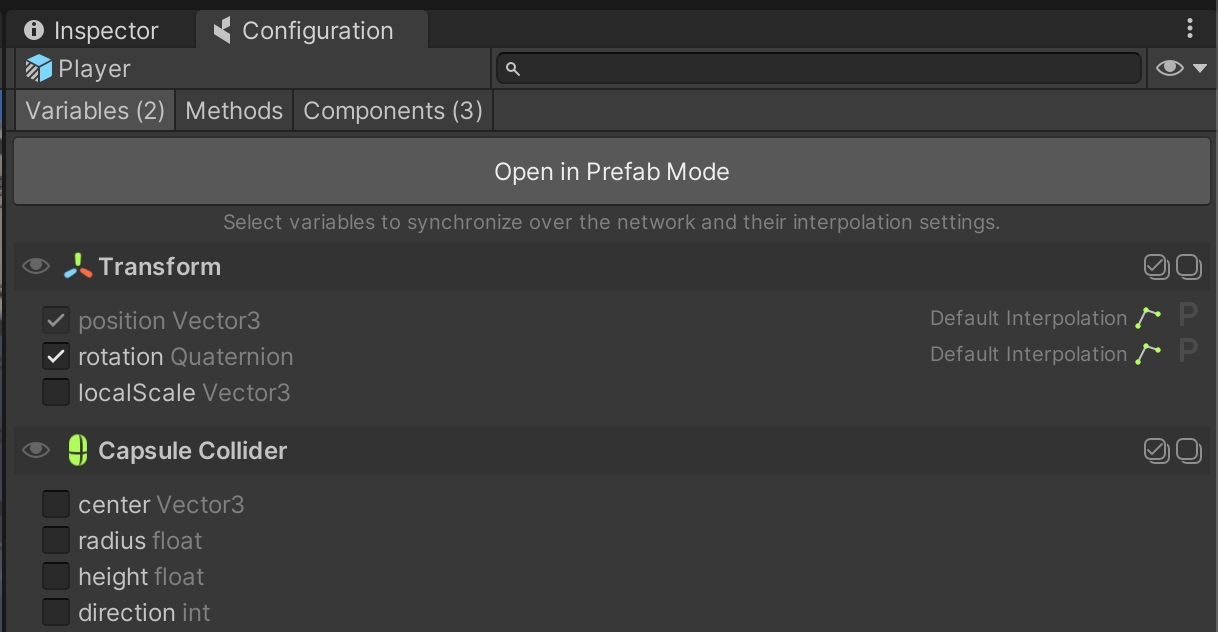

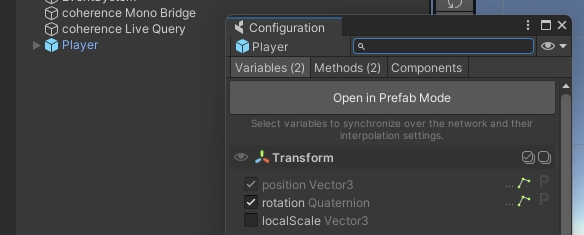

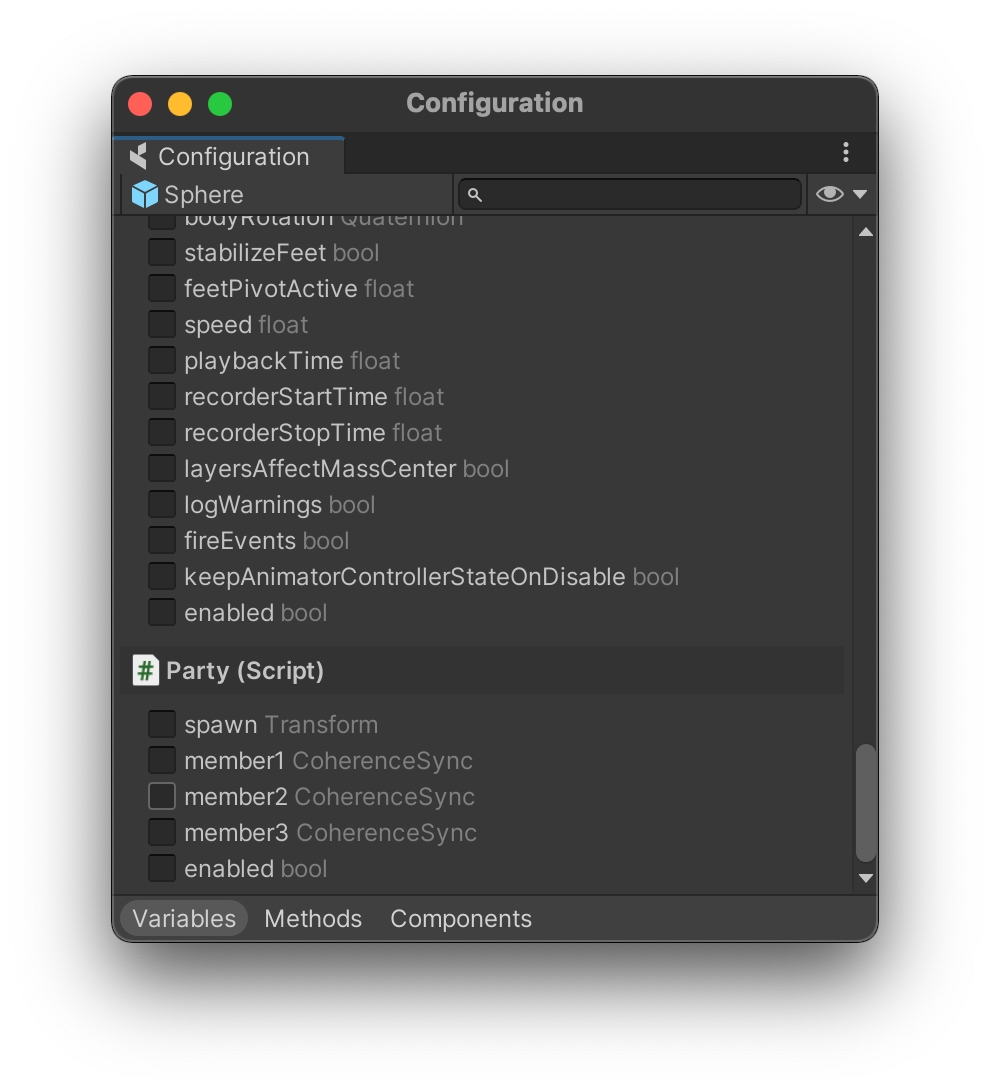

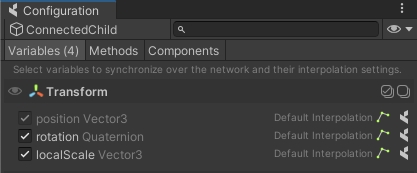

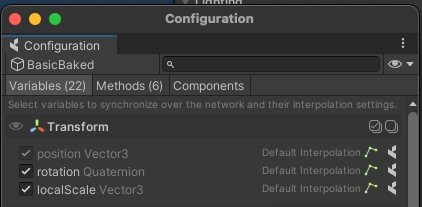

To set it up, click on the Configure button in the CoherenceSync's Inspector. This brings up the Configuration window. Here you can select which variables you would like to sync across the network:

You will notice that position is already selected, and can't be unchecked. For our use case, let's also add rotation and localScale.

Close the Configuration dialog.

Let's add a simple movement script that uses WASD or Arrow keys to move the Prefab in the scene.

Click on Assets > Create > C# Script. Name it Move.cs.

Copy-paste the following content into the file:

Wait for Unity to compile, then attach the script to the Prefab.

We have added a Move script to the Prefab. This means that if we just run the scene, we will be able to use the keyboard to move the object around.

But what happens with Prefab belonging to other Clients? We don't have authority over them, they just need to be replicated. We don't want our keyboard input interfering with them, we just want the position coming from the network to be replicated.

For this reason, we need the Move component to be disabled when the object is remote. coherence has a quick way to do this.

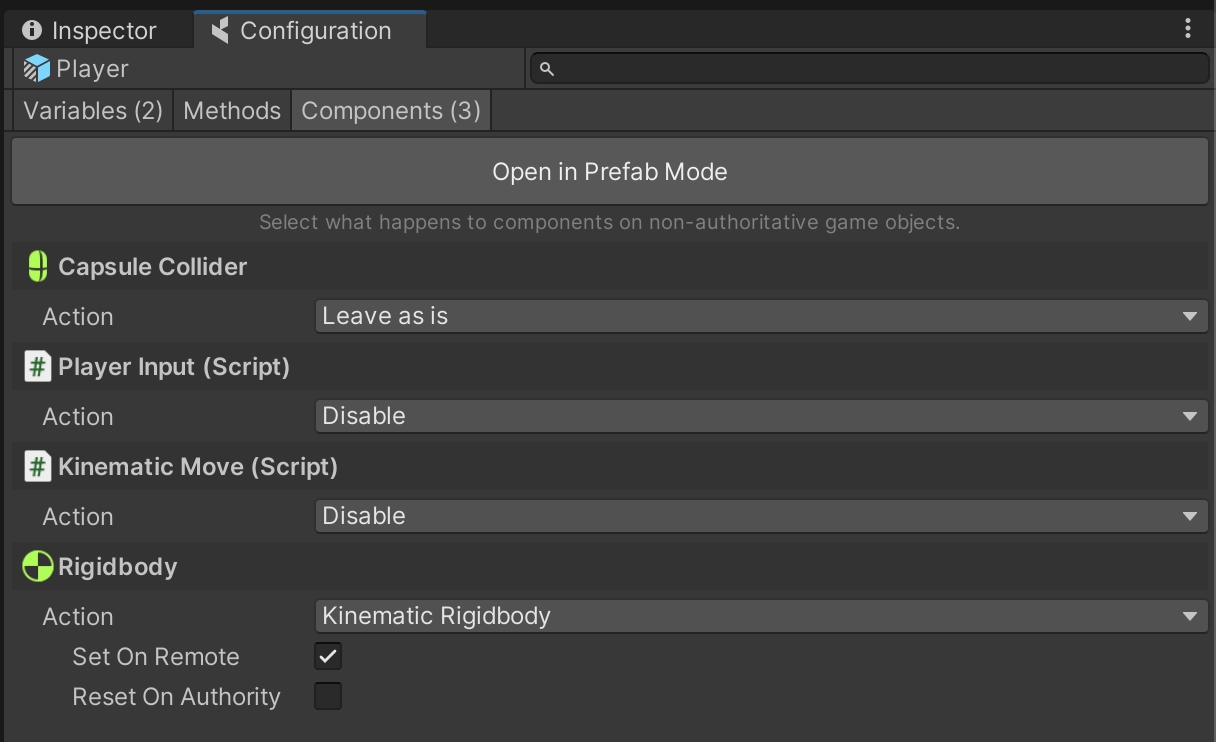

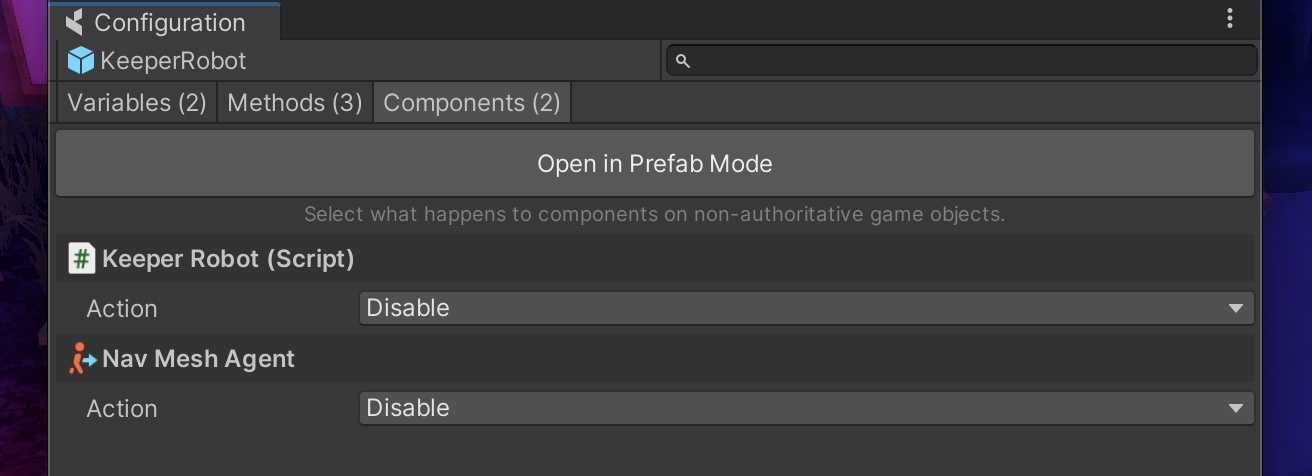

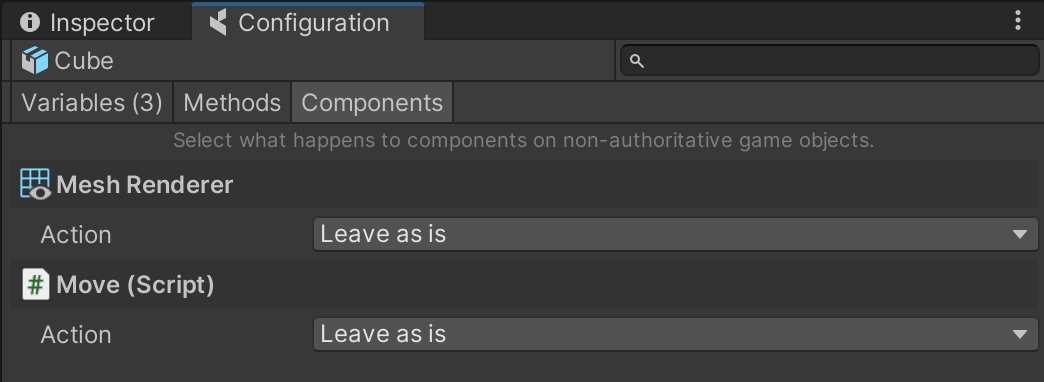

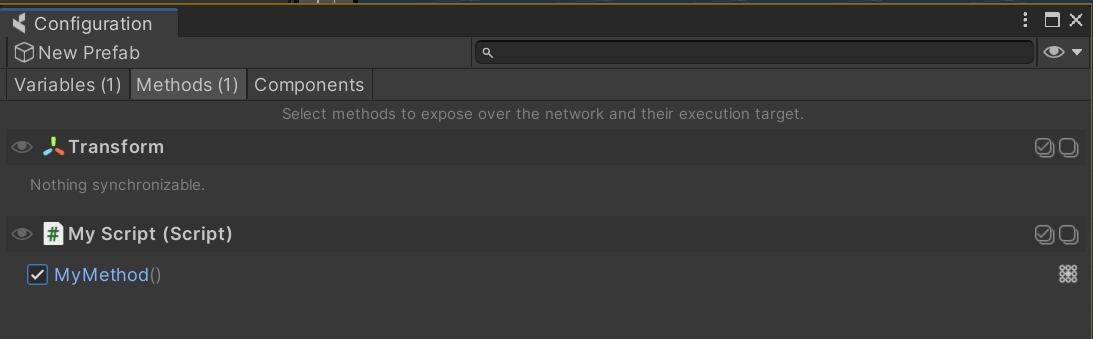

In the Configuration window, click the Components tab:

Here you will see a list of Component Actions that you can apply to non-authoritative entities that have been instantiated by coherence over the network.

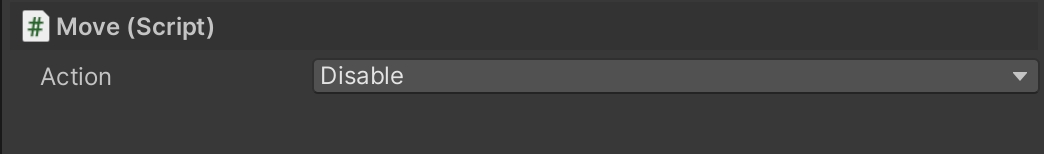

Selecting Disable for your Move script will make sure the Component is disabled for network instances of the Prefab:

This ensures that if a copy of this Prefab is instantiated on a Client with no authority, this script will be disabled and won't interfere with the position that is being synced.

This process is very quick, and can be done in different ways:

From the menu item coherence > Bake

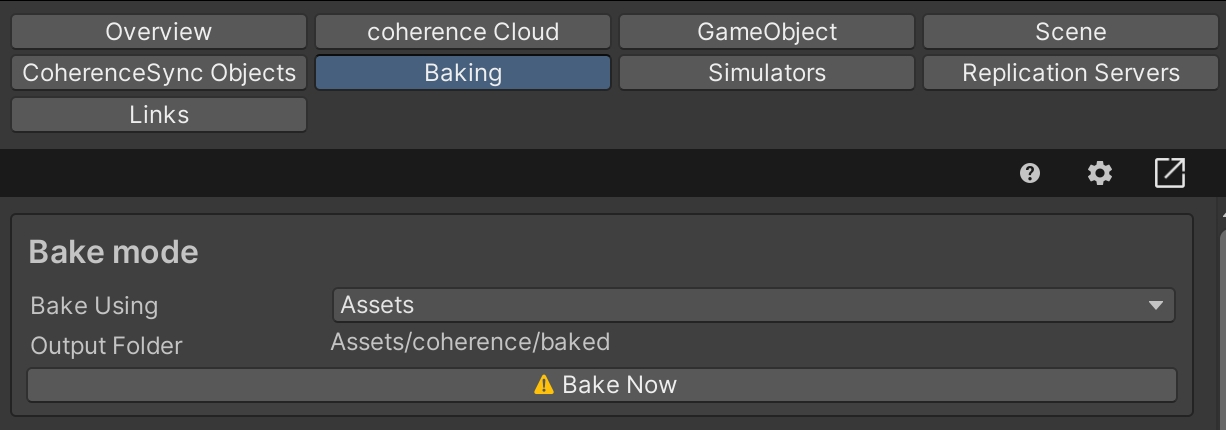

Within the coherence Hub, in the Baking tab, using Bake Now:

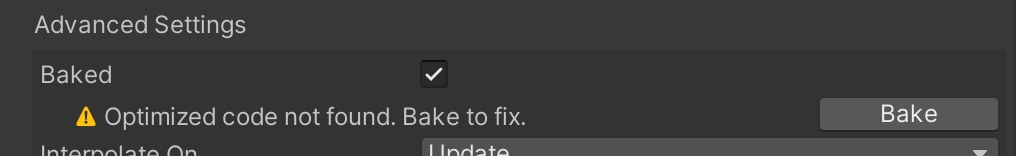

When a Prefab contains changes that need baking, its Inspector will warn you. Pressing Bake here will actually bake all code for all Prefabs:

To recap

This is it! Setting up an object to be networked doesn't require additional steps:

A Prefab with a CoherenceSync on it

Configuring what to sync in the Configure window

Disabling components on remote entities, in the Configure > Components tab

Baking the netcode

is an open-source project which allows you to open multiple Unity Editor instances, all pointing to the same Unity project (using Symbolic links).

Pros

Short iteration times

Easy to debug since every client is an Editor

Works with Unity versions prior to Unity 6

Cons

Can be more resource demanding than just running builds

Each clone requires the whole project to be duplicated on disk (1 clone means 2x the disk space, and so on). This might be a lot for huge projects.

Install as described in their

UPM Package installation is preferred as coherence supports it out-of-the-box

If installed via .unitypackage, you need to set by yourself. One way is by adding the following script to an Editor folder in your project:

Open ParrelSync > Clones Manager. Create a new clone, and open it

Continue development in the main Editor. Don't edit files in clone Editors

Enter Play Mode in each Editor

coherence tells apart ParrelSync clones from the main Editor, so it's easier for you to not edit assets in clones by mistake.

A collection of frequently asked questions, and where to find the answers

All Clients have to connect to a Replication Server, which can or .

There is no specific place to designate a special . Any script can create it, by just instantiating a Prefab.

Spawning is done by simply instantiating a Prefab that has a CoherenceSync, using the Instantiate() API. Same for removing entities, using the Destroy() API. Read more about .

Syncing individual values of the can simply be done using the . If a type is not supported, you can send the value using a as an index, or by reducing it to a byte array, and reconstructing it on arrival.

provide a view on who is connected at any given time.

Now that we have tested our project locally, it's time to upload it to the cloud and share it with our friends and colleagues. To be able to do that, we need to with coherence.

At this point, you can create a free account, which will grant you a number of credits that are more than sufficient to go through developing and testing your game in the cloud.

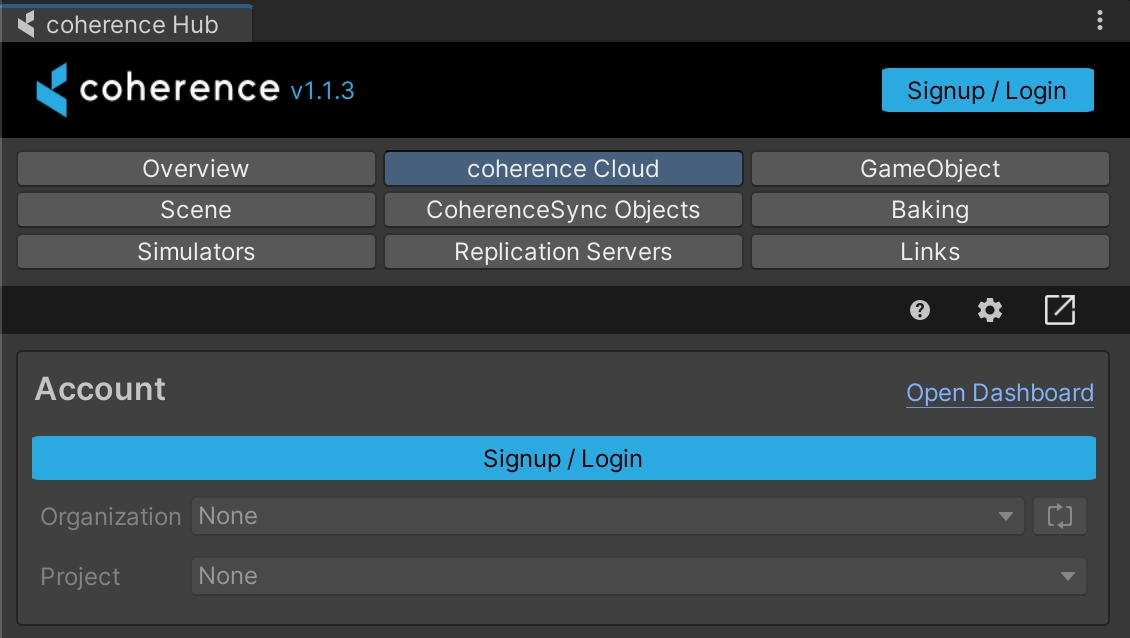

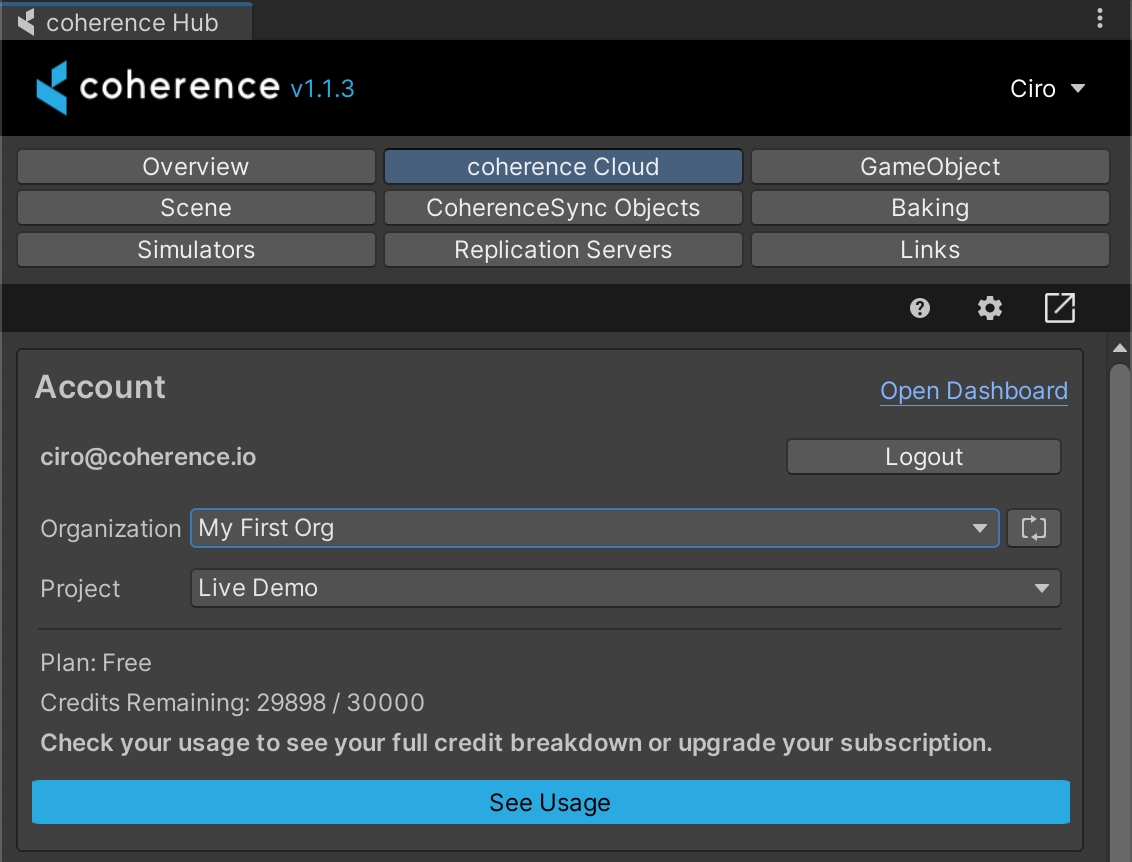

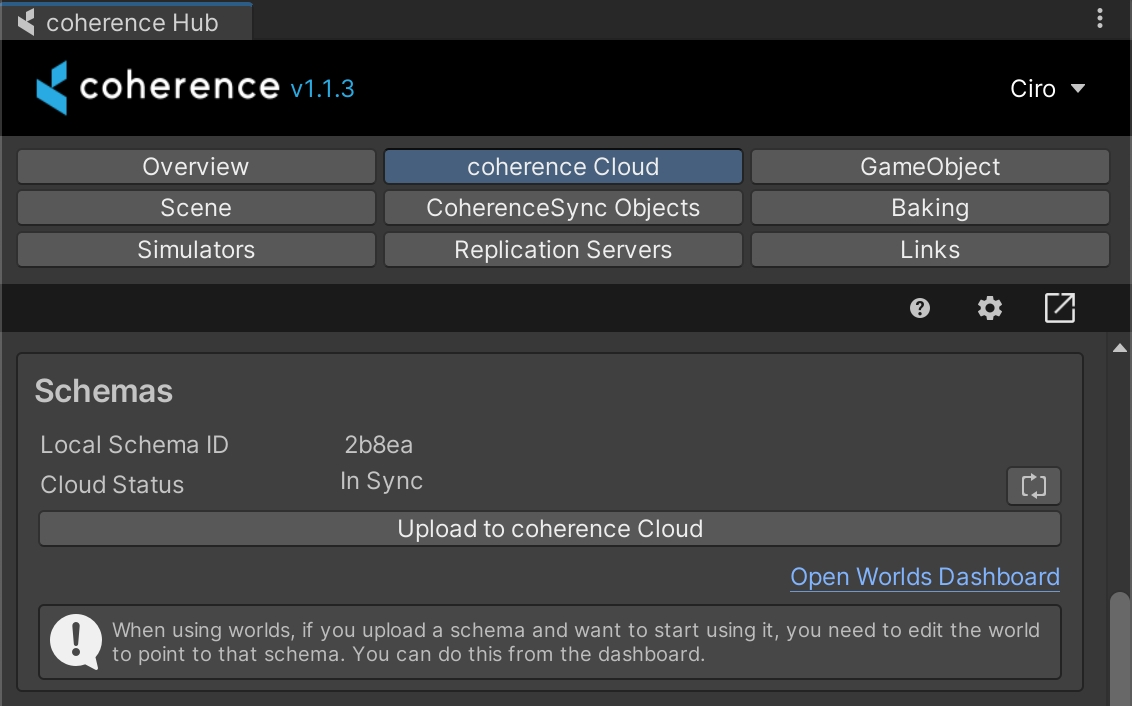

Open the coherence Hub window. Then open the coherence Cloud tab.

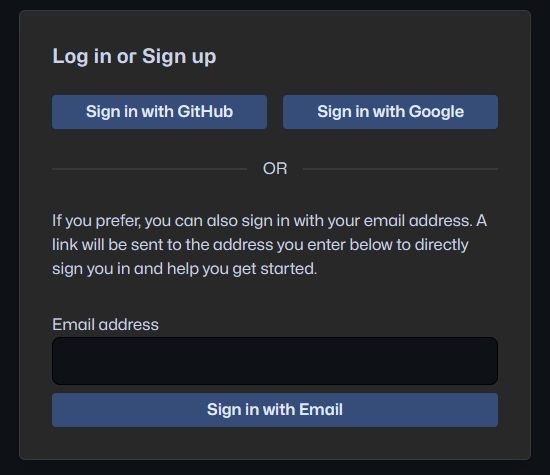

After pressing Login you will be taken to the login page. Simply login as usual, and return to Unity.

You are now logged into the Portal through Unity. Select the correct Organization and Project, and you are ready to start creating.

To recap

We created a coherence account and connected in the Unity, so now we can see our orgs and projects directly within the Editor and link to them.

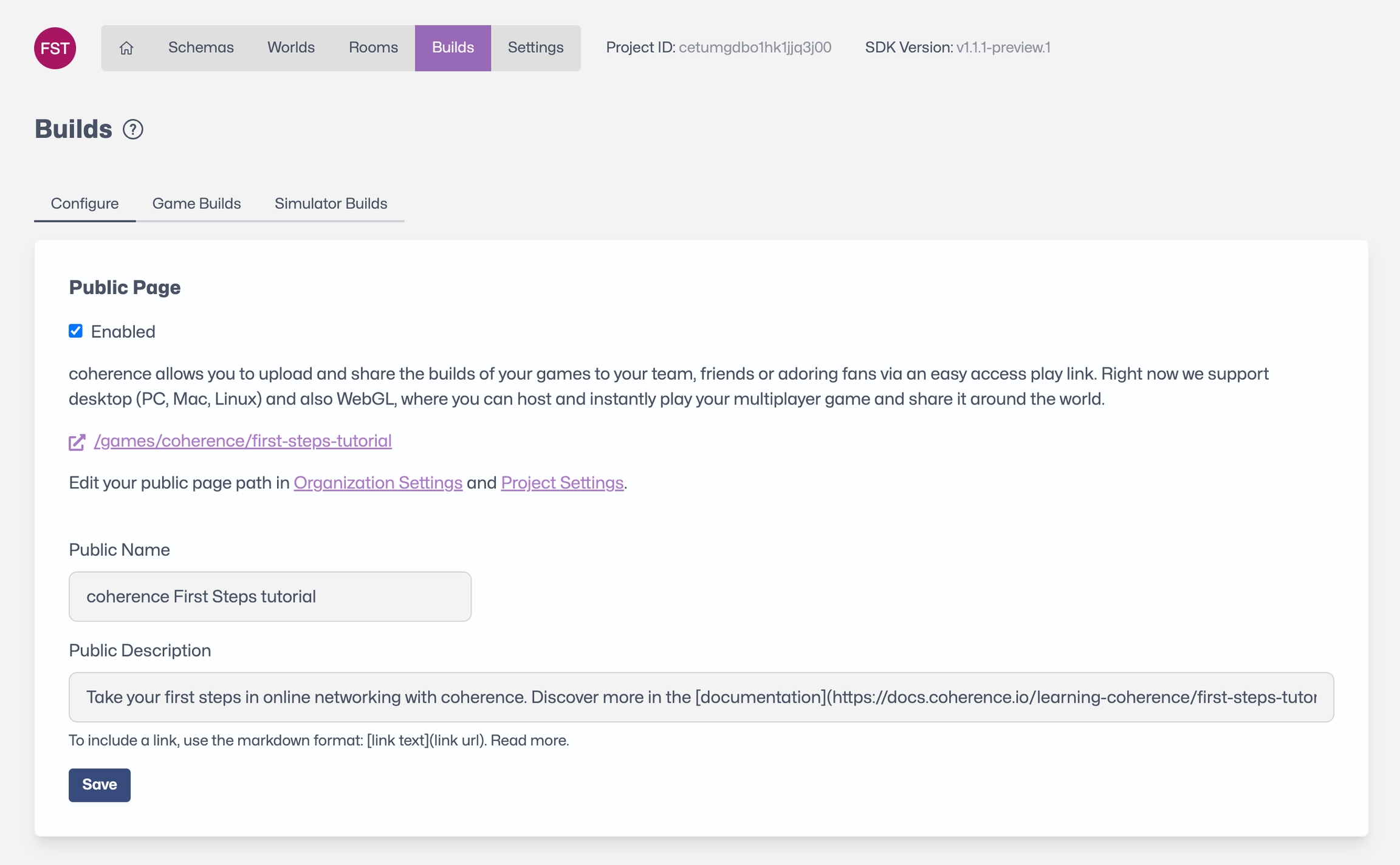

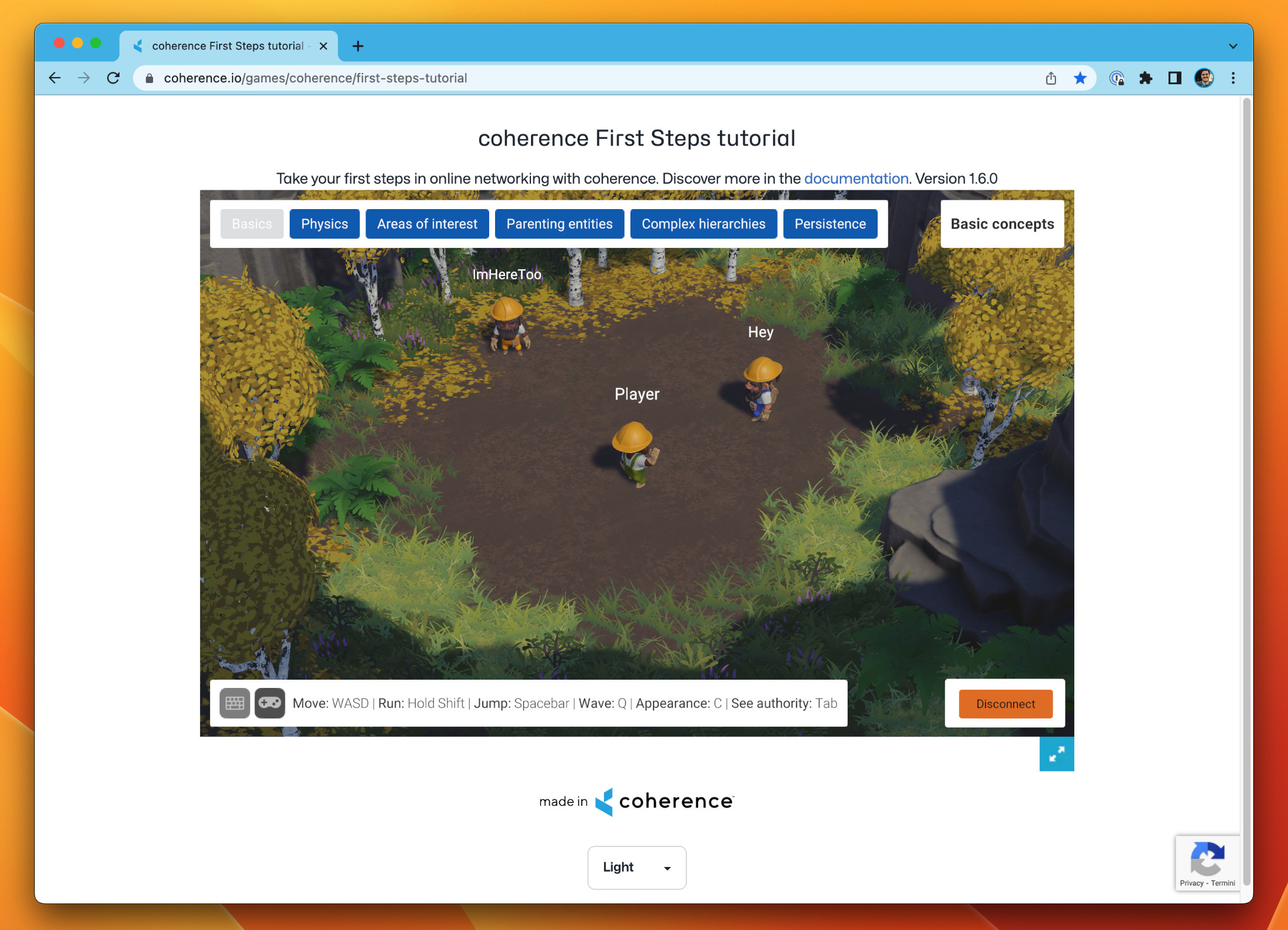

coherence allows you to upload and share the builds of your games to your team, friends or adoring fans via an easy-access play link.

Right now we support desktop (PC, Mac, Linux) and also WebGL, where you can host and instantly play your multiplayer game and share it around the world.

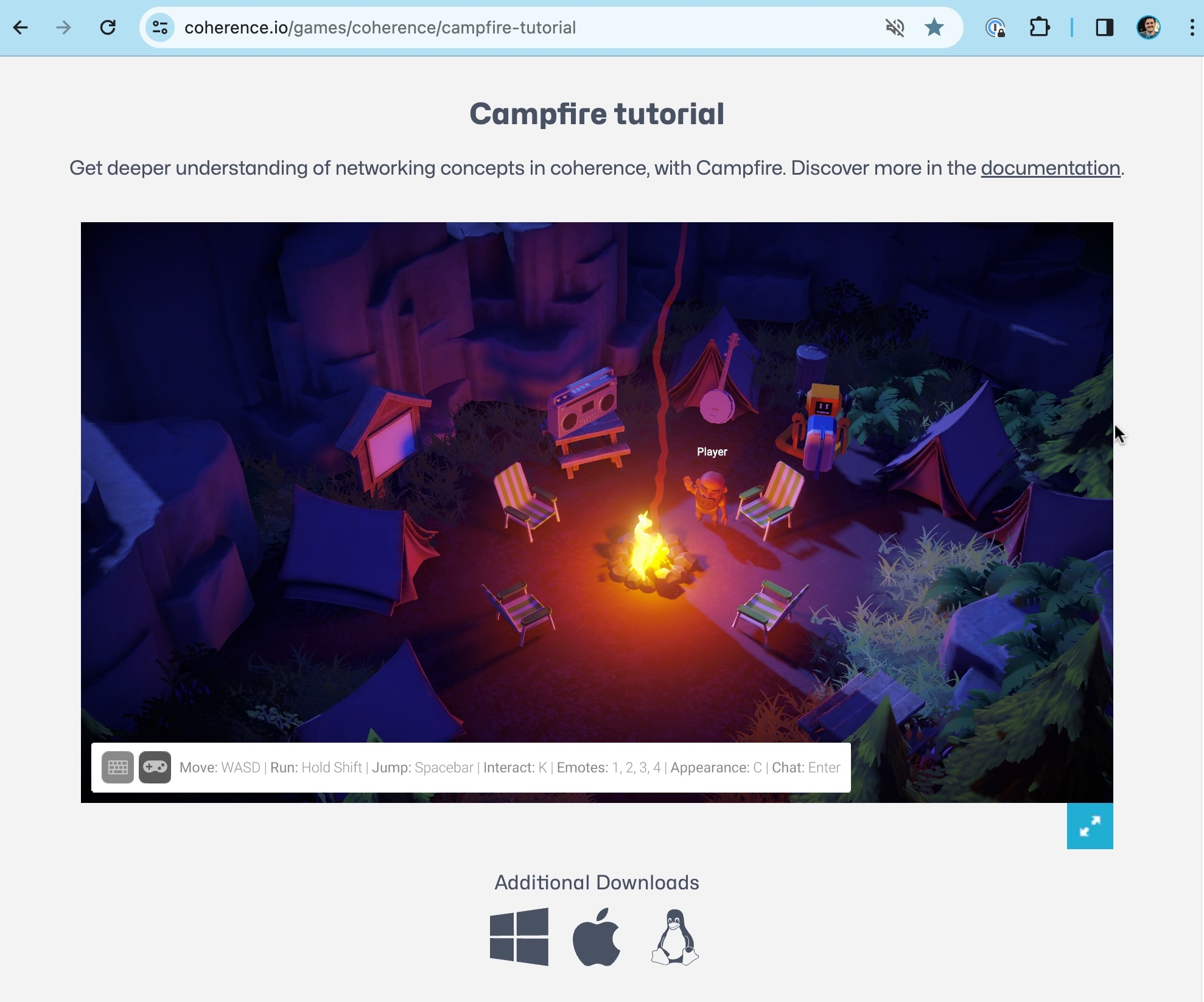

If you want an example of WebGL builds, try out our sample projects or (make sure to use Chrome!)

First, you need to build your game to a local folder on your computer as you normally would. Ensure to bake before doing so!

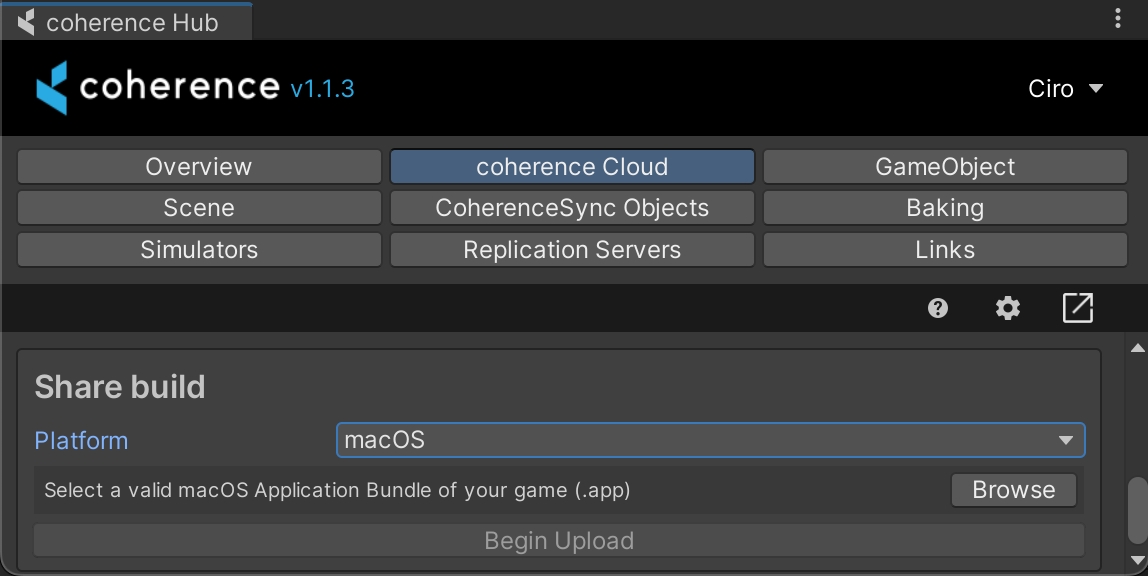

In the coherence Hub window, select the coherence Cloud tab.

You can upload your build from the Share Build section of the tab. Select the platform, browse for the previously-created build, and click on the Begin Upload button.

Now that build has been uploaded, you can share it by enabling and sharing the public URL on the coherence Cloud Dashboard:

Here you can customise the page to a degree. Don't forget to include instructions in the description, if your game doesn't have any!

By unchecking the Enabled option, you can obscure the page altogether, without having to remove builds.

Click on the Game Builds tab to manage builds for different platforms.

If you uploaded a WebGL build, the public link now allows for instant play directly in the browser:

If you uploaded builds for other platforms, they will be downloadable by clicking on the icons right below the WebGL build.

That's it! You made and shared a multiplayer game, hosted in the cloud. Surely it's simple for now, but now that the technical aspects are out of the way, you can focus on fun gameplay.

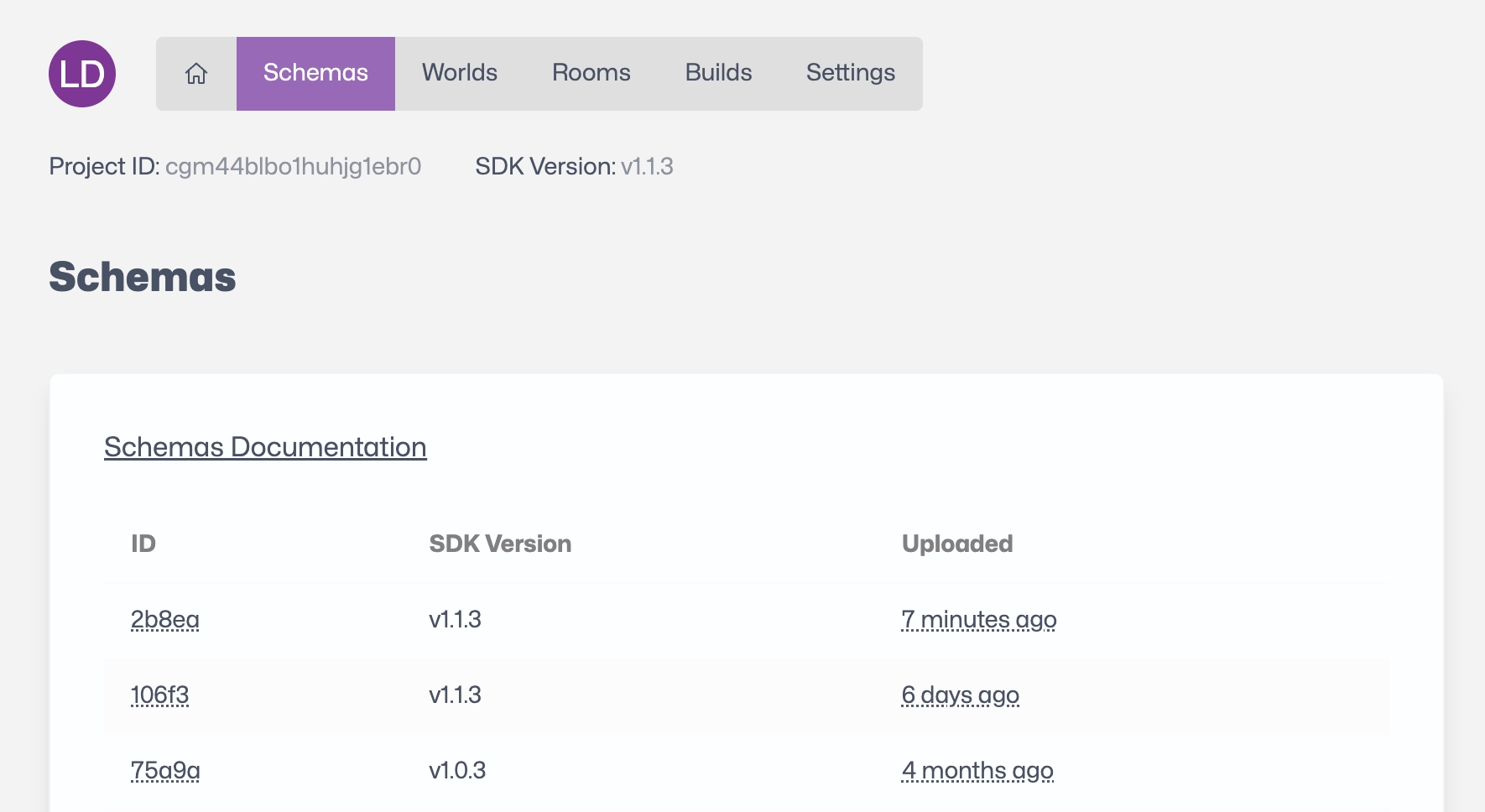

Now we can finally deploy our schema and Replication Server to the coherence Cloud.

In this example we're working with Worlds. Make sure you have created a World before trying to deploy the Replication Server. To create a World, follow the steps described in .

The topics on this page start from around 1:00 in the video below:

In the coherence Hub window, select the coherence Cloud tab, and click on Upload to coherence Cloud in the Schemas section.

The Cloud Status in the Schemas section should now be In Sync.

Your project schema is now deployed with the correct version of the Replication Server already running in the cloud. You will be able to see this in your cloud dashboard status.

You can now build the project and send the build to friends or colleagues for testing.

If you used one of the Connection Dialog samples, once you play the game it will fetch all the regions available for your project. This depends on the project configuration (e.g., the regions that you have selected for your project in the Dashboard).

You will be able to play over the internet without worrying about firewalls and local network connections.

Select a folder (e.g. Builds) and click OK.

You will notice it because there will be a little chef's hat next to the coherence folder, or a warning sign on Bake buttons:

The steps below all do this, but from different starting points: a new (1a), a (1b), or a (1c).

First, ensure you enter , as we don't want to add the component as an override.

Ensure you're in Isolation Prefab editing mode, not In Context. Read about .

Ensure you're in Isolation Prefab editing mode, not In Context. Read about .

Learn how to create and use Prefab variants in the .

Its Inspector has quite a number of settings. For more information on them, refer to .

Note that you can configure variables, methods and components not only on the root, but also .

Once everything is setup, you should ensure to run the process of : coherence will produce the necessary netcode (i.e. a bunch of C# scripts) to ensure that when the game is running and the Client connects, all of the properties and network messages you might have configured will correctly sync.

Now let's run this setup or using the .

Make sure is up-to-date before starting to test, and that the Replication Server is running with the latest generated

Messages (or RPCs) in coherence are called . We also have a video on .

To make all connected Clients move to a new scene, you can create a method that loads the scene and mark it as a . Then, from one of the Clients you would send that Network Command on all others (and to themselves) by selecting as destination MessageTarget.All.

Servers in coherence are called . You can also watch an .

To allow client-side prediction, start by on the properties you want to do prediction for. Implement your prediction code, and listen for incoming updates by . When the update arrives, you can reconcile the predicted state and the new, incoming sample in a way that fits your game best.

To create a functionality, you can leverage Network Commands to send strings (or byte arrays) to all connected Clients.

A pre-game chat can also be created by leveraging .

In your web browser, navigate to . Create an account or log into an existing one.

As a next step in the sub-pages of this section we'll see how to in the cloud, and how to .

We recommend heading to our Samples and Tutorials section, dive or watch some , to learn all about deeper topics.

If the status does not say "In Sync", or if you encounter any other issues with the server interface, refer to the section.

For quick and easy testing, we suggest trying out . Anyone with the link can then try the build in a browser.

Once you follow the instructions to install the coherence SDK package for Unity, you will be able to explore the package Samples with no additional download. You can either:

Go to: Coherence > Explore Samples

Open Unity's Package Manager (Window > Package Manager) and navigate to the package samples

Note that the Samples are meant to be self-explanatory, so they come with no documentation.

The scene shows up all magenta!

If, once you import the samples, the scenes show up magenta/pink, it's because the samples are made for the built-in pipeline and your project is using either URP or HDRP.

To fix this in URP, go to: Window > Rendering > Render Pipeline Converter

Click on the checkboxes to choose what to convert (Materials is necessary), then click the Initialize and Convert button. After a brief loading, you should see the example scenes displayed correctly.

For more information, refer to Unity's guides for URP or for HDRP.

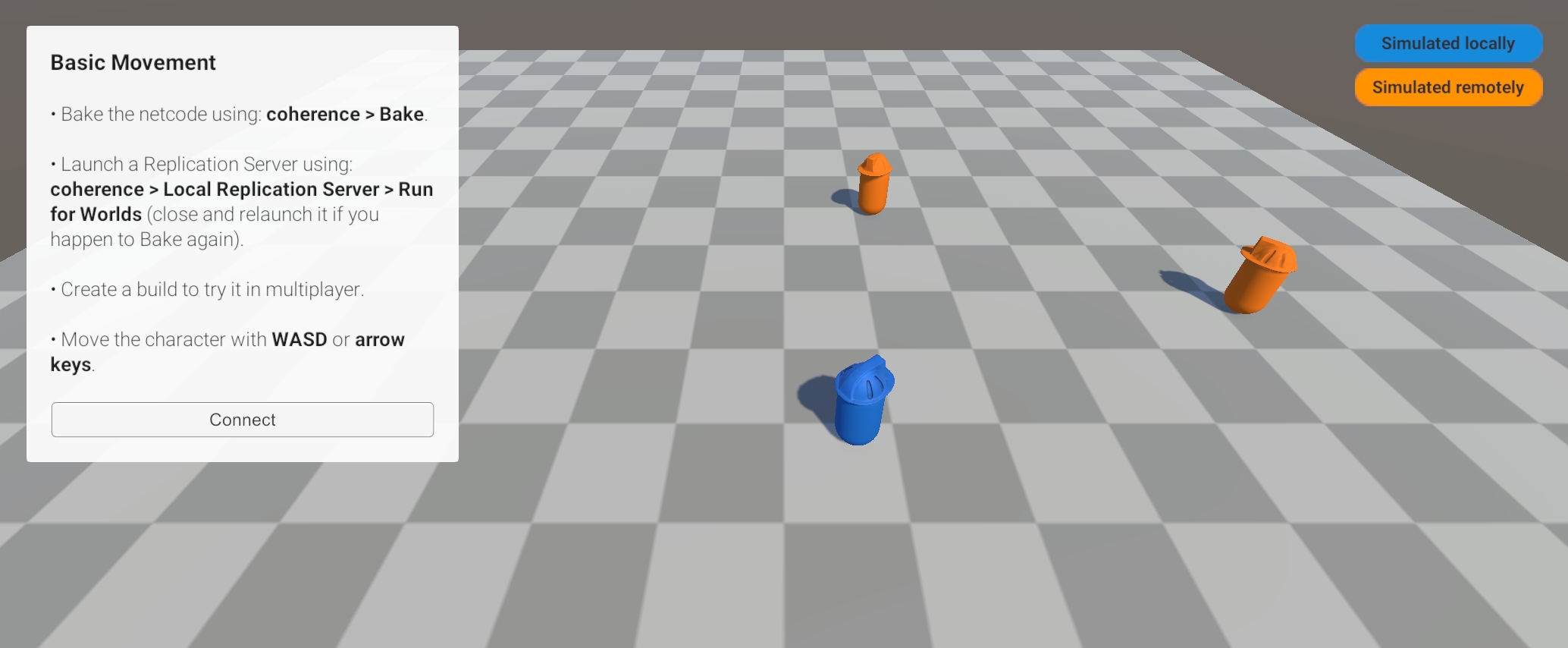

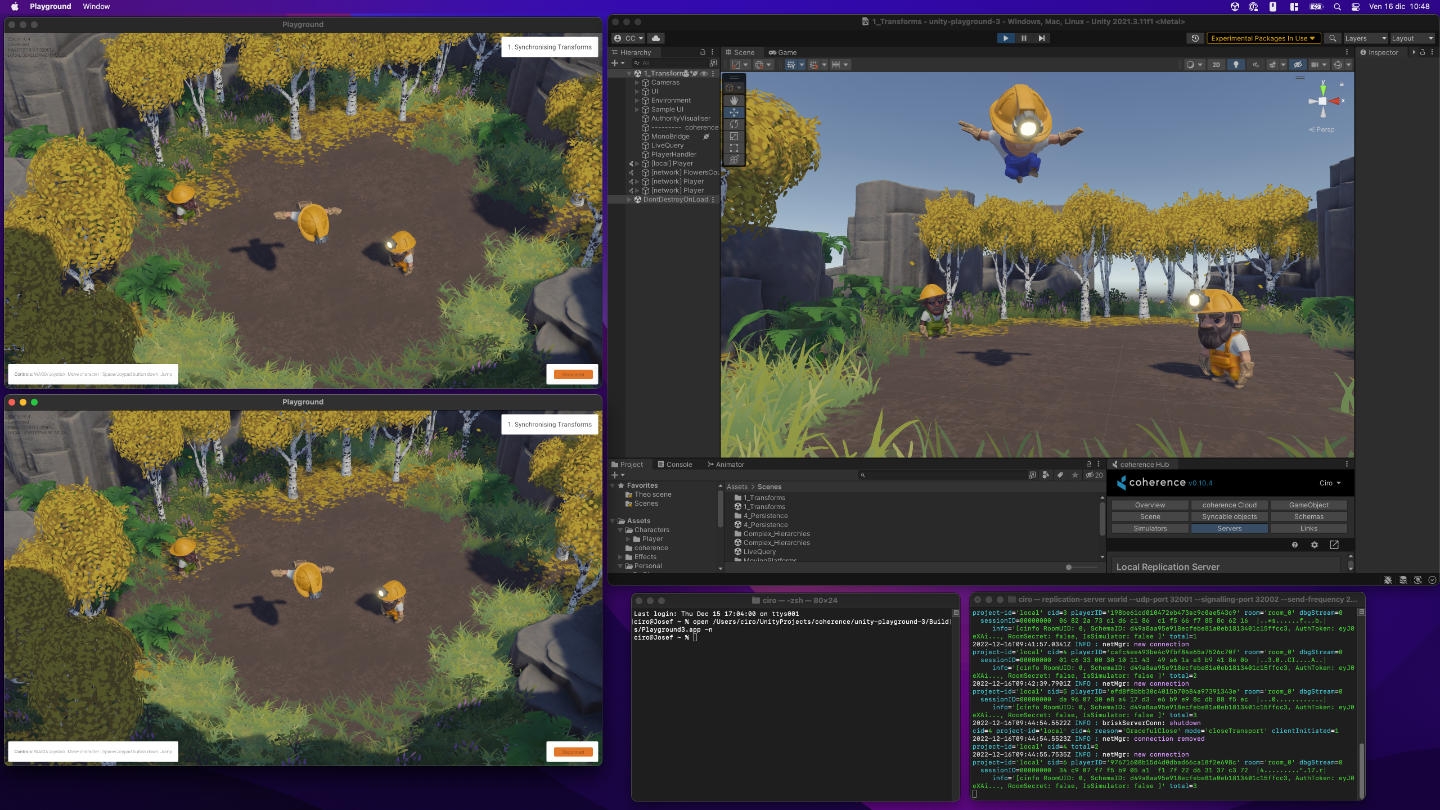

This scene demonstrates the simplest networking scenario possible with coherence. Characters sync their position and rotation, which immediately creates a feeling of presence. Someone else is connected!

CoherenceSync | Bindings | Component behaviors | Authority

WASD or Left stick: Move character

Hold Shift or Shoulder button left: Run

Spacebar or Joypad button down: Jump

Upon connecting, a script instantiates a character for you. Now you can move and jump around, and you will see other characters move too.

To be able to connect, you need to also run a local Replication Server, that can be started via coherence > Local Replication Server > Run for Worlds.

coherence takes care of keeping network entities in sync on all Clients. When another Client connects, an instance of your character is instantiated in their scene, and an instance of their character is instantiated into yours. We refer to this as network instantiation.

When you click Connect in the sample UI, the CoherenceBridge opens a connection. The PlayerHandler GameObject on the root of the hierarchy controls character instantiation by responding to that connection event.

Its PlayerHandler script implements something like this:

On connection, a character is created. On disconnection, the same script destroys the character's instance. Note how instantiating and removing a network entity is done just with regular Unity Instantiate and Destroy.

Now let's take a look at the Prefab that is being instantiated. You can find it in the /Prefabs/Characters folder.

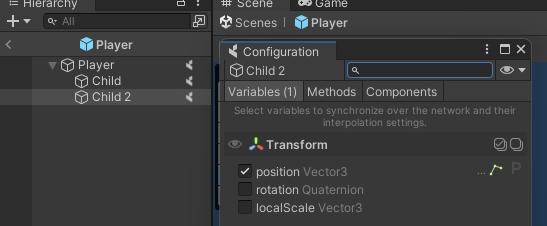

By opening coherence's Configuration window (either by clicking on the Configure button on the CoherenceSync component, or by going to coherence > GameObject Setup > Configure), you can see what is synced over the network.

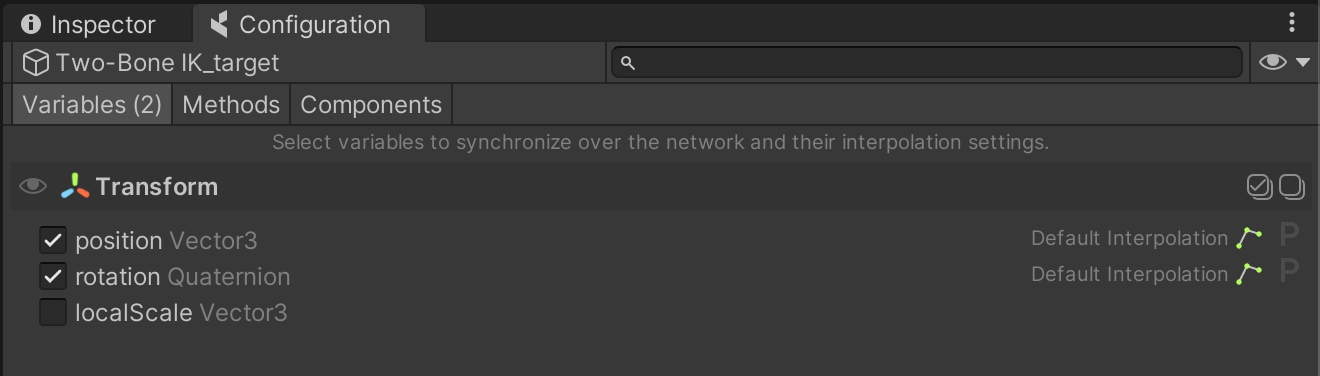

When this window opens on the Variables tab you will notice that, at the very top, Transform.position and Transform.rotation are checked:

This is the data being transferred over the network for this object. Each Client sends the position and rotation of the character that they have authority over to every other connected Client, every time there is a change to it that is significant enough. We call these bindings.

Each connected Client receives these values and applies them to the Transform component of their own instance of the remote player character.

In First Steps, all the variables are set to public by default. The network code that coherence automatically generates can only access public variables and methods, without them being public syncing would not work.

In your own projects, keep it in mind to always set synced variables to public!

To ensure that Clients don't modify the properties of entities they don't have authority over, we need to make sure that they are not running on the character instances that are non-authoritative.

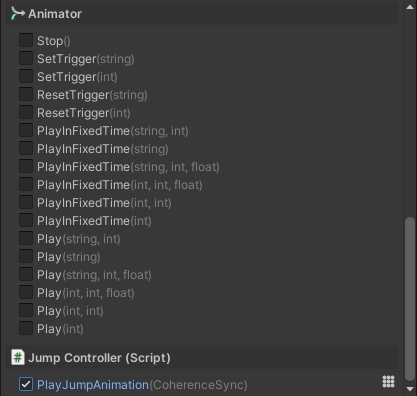

coherence offers a rapid way to make this happen. If you open the Components tab of the Configuration window, you will see that 3 components are configured to do something special:

In particular:

The PlayerInput and KinematicMove scripts get disabled.

The Rigidbody component is made kinematic.

While in Play Mode, try selecting a remote player character. You will notice that some of its script have been disabled by coherence:

You can learn more about Component Actions here.

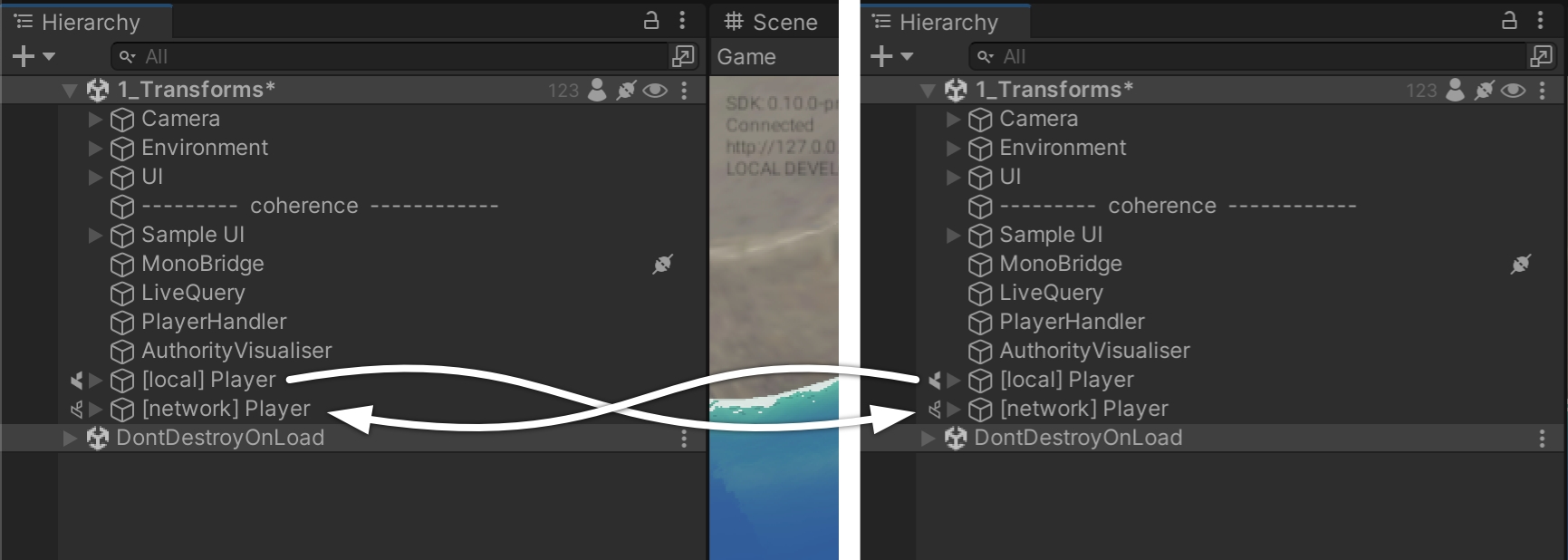

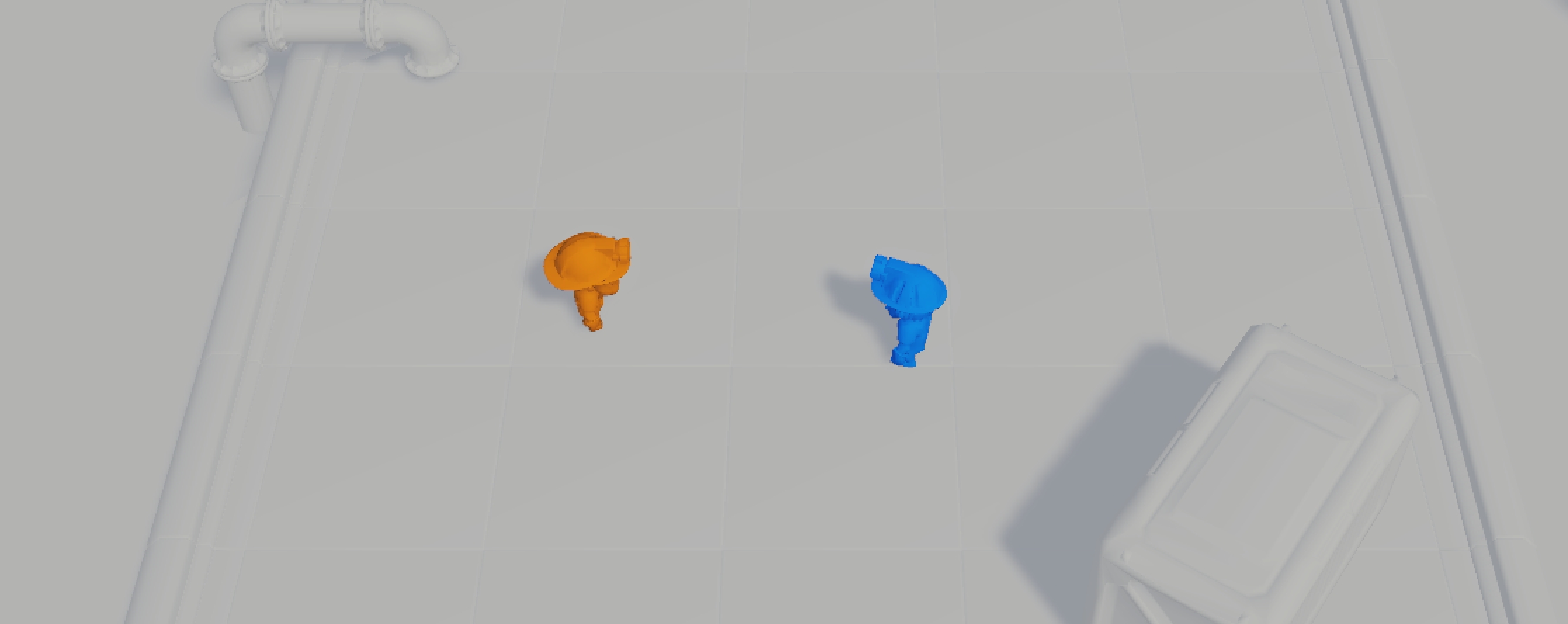

One important concept to get familiar with is the fact that every networked entity exists as a GameObject on every Client currently connected. However, only one of them has what we call authority over the network entity, and can control its synced variables.

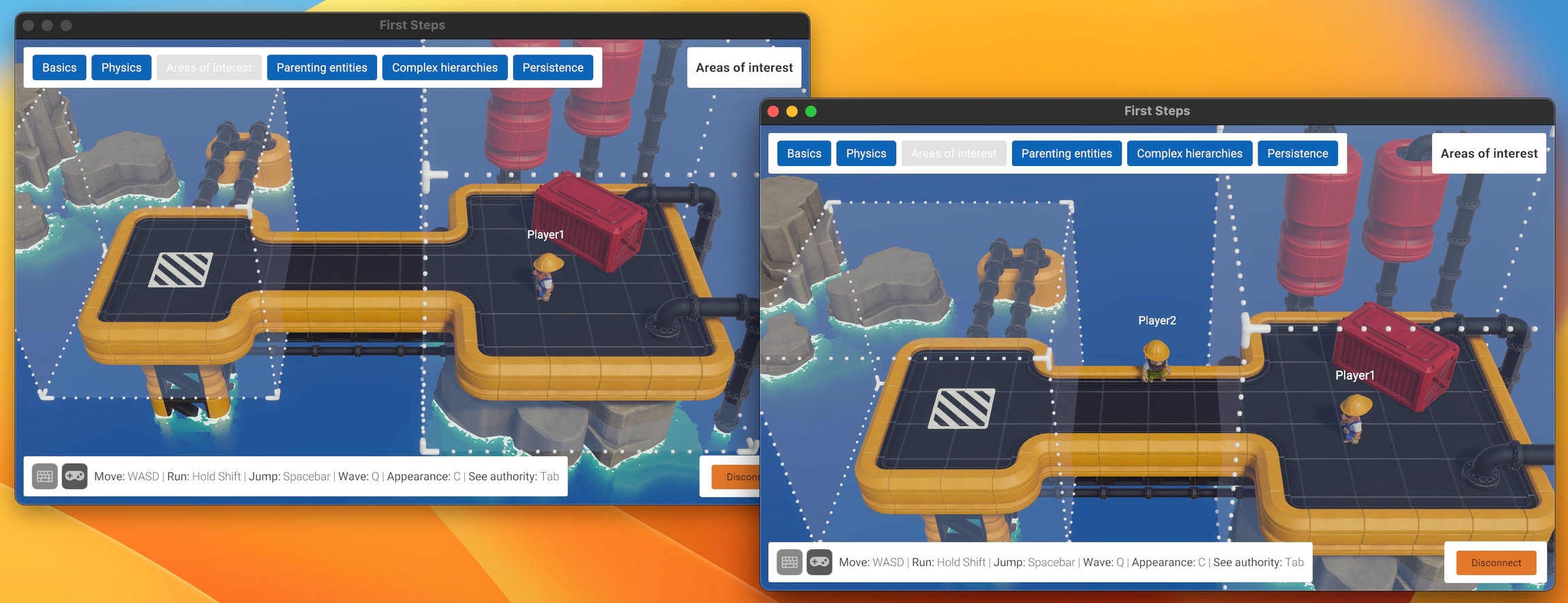

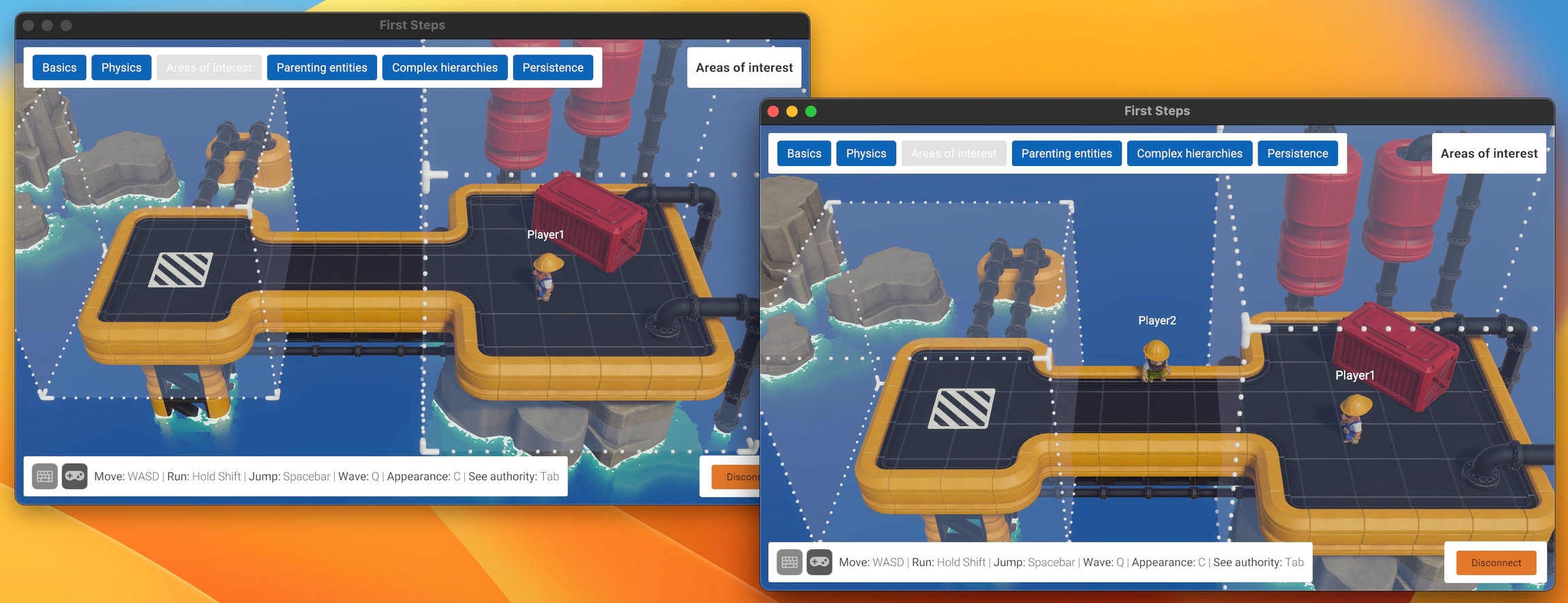

For instance, if we play this scene with two Clients connected, each one will have 2 player instances in their respective worlds:

This is something to keep in mind as you decide which components have to keep running or be disabled on remote instances, in order to not have the same code running unnecessarily on various Clients. This could create a conflict or put the two GameObjects in a very different state, generating unwanted results.

In the Unity Editor, when connected, the name of a GameObject and the icon next to it informs you about its current authority state (see image above).

There are two types of authority in coherence: State and Input. For the sake of simplicity, in this project we often refer just to a generic "authority", and what we mean is State authority. Go here for more info on authority.

If you want to see which entities are currently local and which ones are remote, we included a debug visualization in the project. Hit the Tab key (or click the Joystick) to switch to a view that shows authority. You can keep playing the game while in this view, and see how things change (try the Physics scene!).

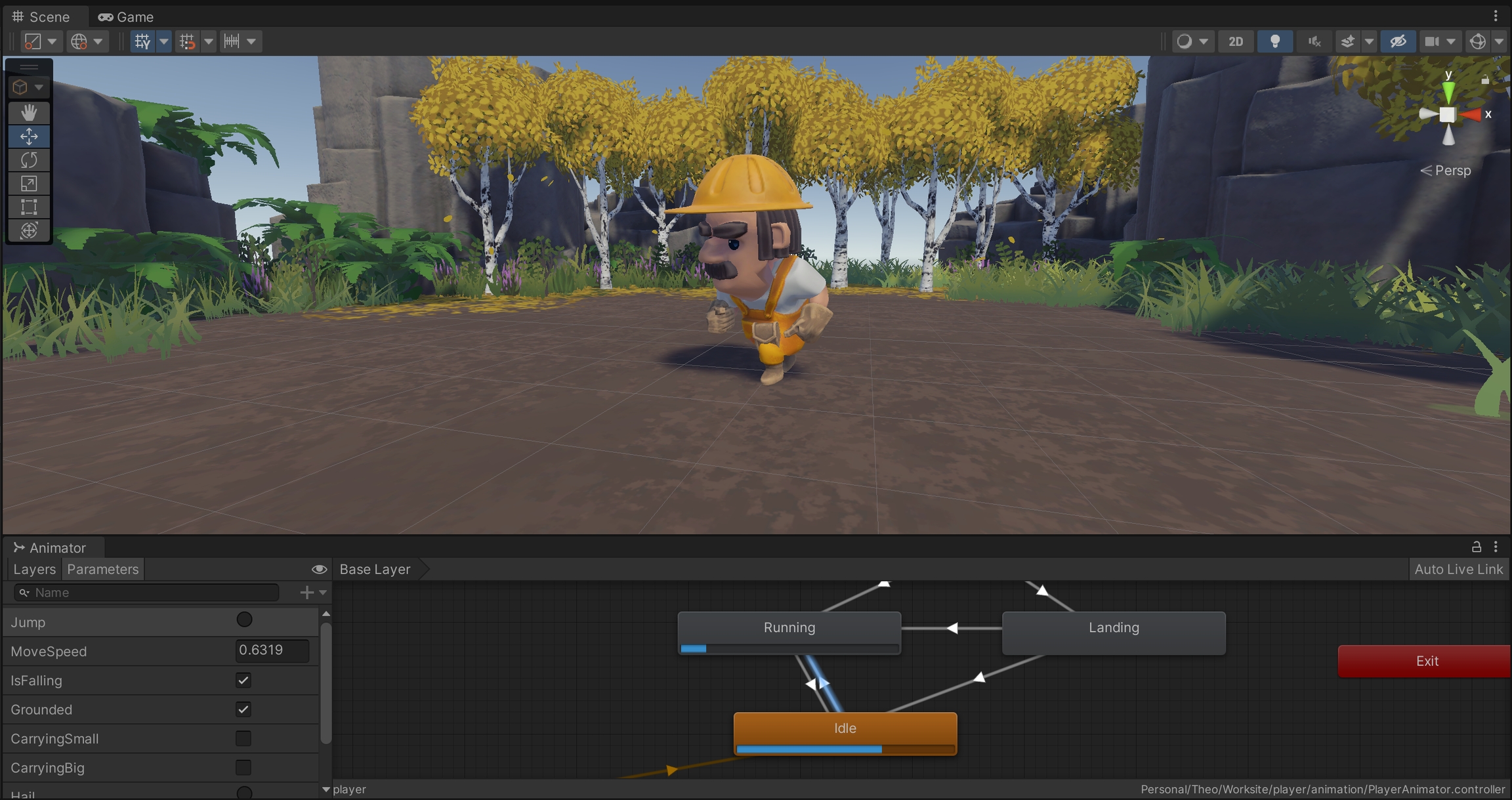

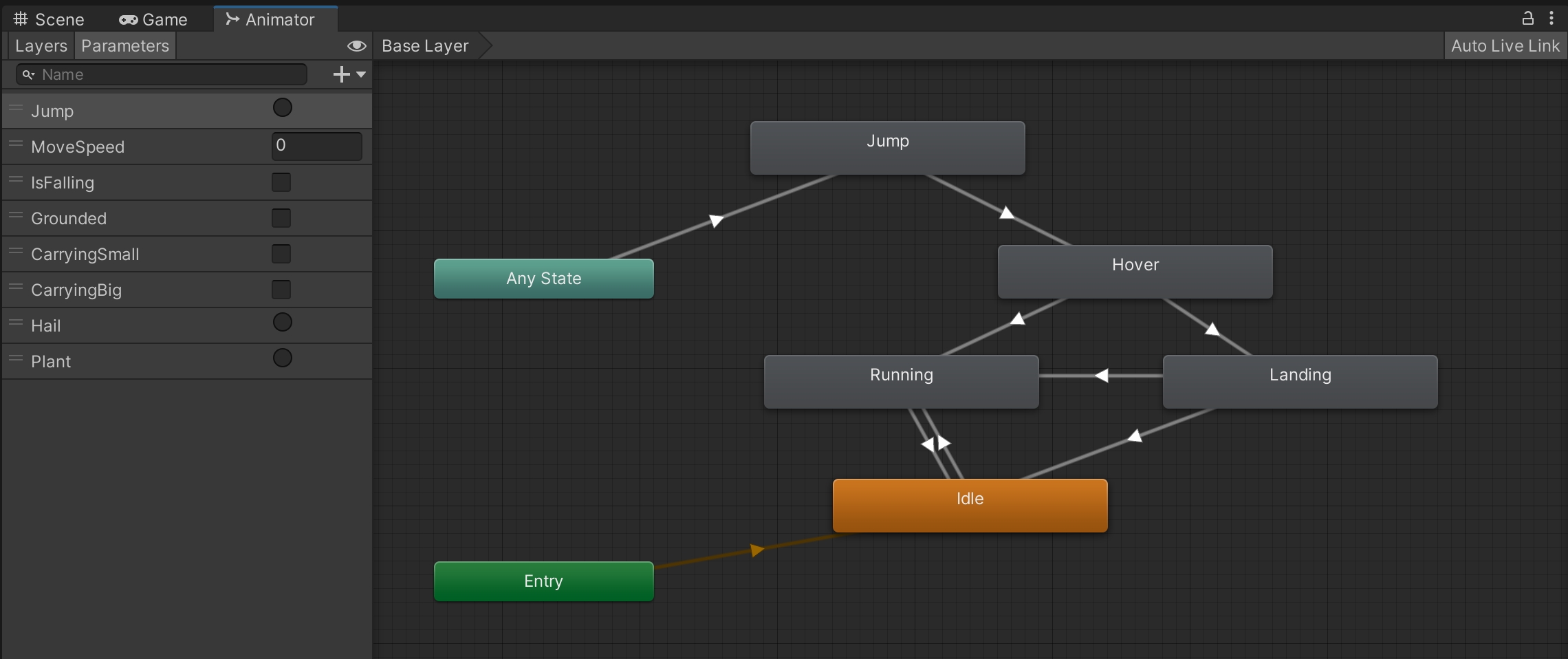

Using the same scene as in the previous lesson, let's see how to easily sync animation over the network.

Animation | Bindings

WASD or Left stick: Move character

Hold Shift or Shoulder button left: Run

Spacebar or Joypad button down: Jump

We haven't mentioned it before, but the character Prefab does a lot more than just syncing its position and rotation.

When you move around, you will notice that animation is also replicated across Clients. This is done via synced Animator parameters (and Network Commands, but we cover these in the next lesson).

Very much like in the example about position and rotation, just sending these across the network allows us to keep animation states in sync, making it look like network-instantiated Prefabs on other Clients are performing the same actions.

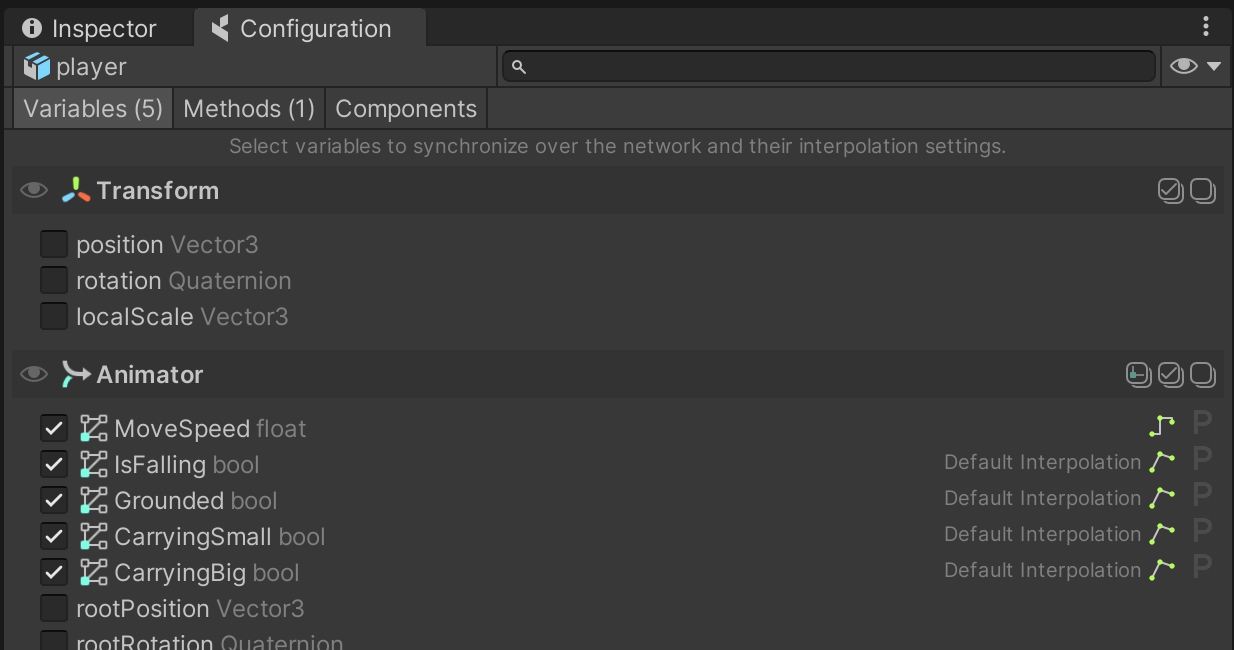

Open the player Prefab located in the /Prefabs/Characters folder. Browse its Hierarchy until you find the child GameObject called Workman. You will notice it has an Animator component.

Select this GameObject and open the Animator window.

As is usually the case, animation is controlled by a few Animator parameters of different types (int, bool, float, etc.).

Make sure to keep the GameObject with the Animator component selected, and open the coherence Configure window:

You will see that a group of animation parameters are being synced. It's that simple: just checking them will start sending the values across, once the game starts, just like other regular public properties.

Did you notice that we are able to configure bindings even if this particular GameObject doesn't have a CoherenceSync component on it? This is done via the one attached to the root of the player Prefab.

These parameters on child GameObjects are what we call deep bindings.

Learn more in the Complex hierarchies lesson, or on this page.

There is only one piece missing: animation Triggers. We use one to trigger the transition to the Jump state.

Since Triggers are not a variable holding a value that changes over time, but rather an action that happens instantaneously, we can't just enable in the Config window like with other animator parameters. We will see how to sync them in the next lesson, using Network Commands.

If you prefer to be hands-on, we recommend you start by exploring the package Samples included right inside the Unity SDK. Or download one of our pre-made Unity projects First Steps or Campfire, which both come with great documentation explaining the thinking behind them.

Finally if you're new to networking and you want to read more about the fundamentals, you might enjoy our Beginner's Guide to Networking. This high-level intro is not coherence-specific, but rather is applicable to any networking technology.

The basics of coherence

The First Steps project contains a series of small sample scenes, each one demonstrating one or more features of coherence.

If you're a first time user, we suggest to go through the scenes in the established order. They will guide you through some key coherence and networking concepts:

Remember that playing the scenes on your own only shows part of the picture. To fully experience the networked aspects, you have to play in one or more built instances alongside the Unity Editor, and even better - with other people.

The Unity project can be downloaded from its Github repo. The Releases page contains pre-packaged .zip files.

To quickly try a pre-built version of the game, head to this link and either play the WebGL build directly in the browser, or download one of the available desktop versions.

Share the link with friends and colleagues, and have them join you!

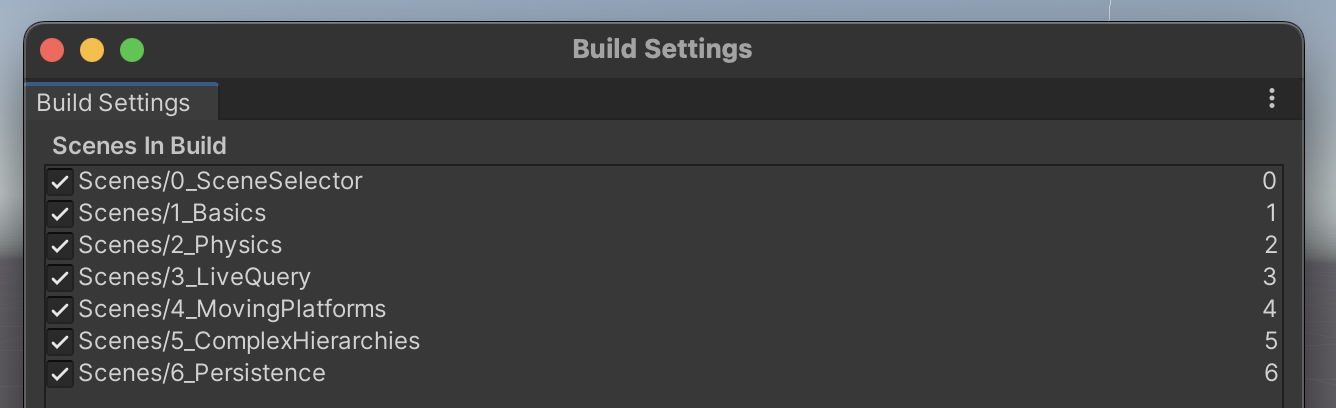

Once you open the project in the Unity Editor, you can build scenes via File > Build Settings, as per usual.

If you want to try all the scenes in one go, keep them all in the build and place SceneSelector as the first one in the list.

If you're working on an individual scene instead, bring that one to the top and deselect the others. The build will be faster.

To be able to connect, you need to also run a local Replication Server, that can be started via coherence > Local Replication Server > Run for Worlds.

You can try running multiple Clients rather than just two, and see how replication works for each of them. You can also have one Client just be the Unity Editor. This allows you to inspect GameObjects while the game runs.

Since you might be building frequently, we recommend making native builds (macOS or Windows) as they are created much faster than WebGL.

You can also upload a build to the cloud and share a link with friends. To do that, follow these steps or watch this quick video to learn how to host builds on the coherence Cloud.

The coherence package comes with several UI samples. The samples can get you connected to the Replication Server in no time, and are really useful for prototyping and learning.

In time, you can also edit the provided Prefabs and scripts however you want, to customise them to fit the style and functionality of your game.

The currently available samples are:

Lobbies Connection dialog

Matchmaking dialog

The difference between Rooms and Worlds is explained on this page: Rooms and Worlds, while Lobbies have somewhat of a different role, in that they are usually used in addition to Rooms in a game flow.

Each sample comes with a Prefab that can be added to your Scene. You can add them via coherence > Explore Samples.

Effectively these do two things for you:

Import the sample in the Samples directory of your project, if it isn't already.

Add the Prefab from the sample to your Scene.

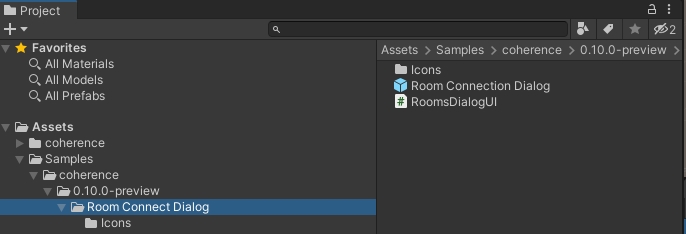

Int the example above, that would be Room Connection Dialog.prefab.

You don't need to do anything else for the sample UIs to work (except of course, a Replication Server needs to be running to connect to it!).

My sample UI doesn't work!

If you notice that the samples are non-responsive to input, make sure you have a GameObject with an EventSystem component in the scene.

Also ensure that the mouse is not locked by a script. Is the cursor invisible? You might have a script that's modifying the cursor's lock state. In that case, modify the script or remove it.

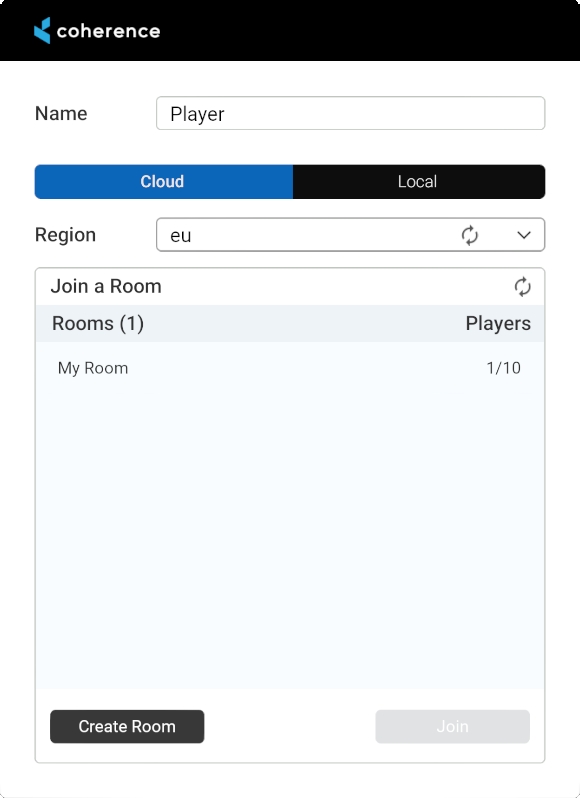

The Rooms Connect Dialog has a few helpful components that are explained below.

At the top of the dialog we have an input field for the player's name.

Next is a toggle between Cloud and Local. You can switch to Local if you want to connect to a Rooms Replication Server that is running on your computer.

Next is a dropdown for region selection. This dropdown is populated when regions are fetched from the coherence cloud. The default selection is the first available region. This is not enabled when you switch from Cloud to Local. This is also only relevant if you deploy your game to several different regions.

Next is a dropdown of available Rooms in the selected region (or in your local server if using the Local mode).

After selecting a Room from the list the Join button can be used to join that Room.

If you know someone has created a room but you don't see it, you can manually refresh the rooms list using the Refresh button.

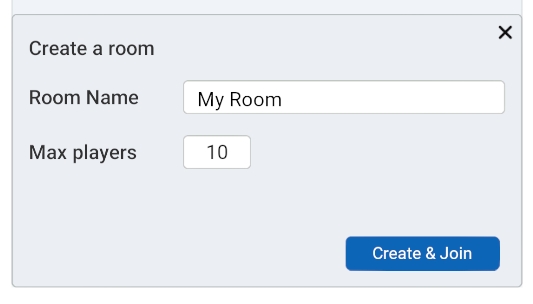

The Create a room section adds a Room to the selected region.

This section contains controls for setting a Room's name and maximum player capacity. Pressing the Create button will create a Room with the specified parameters and immediately add it to the Room Dropdown above. Create and Join will create the Room, and also join it immediately.

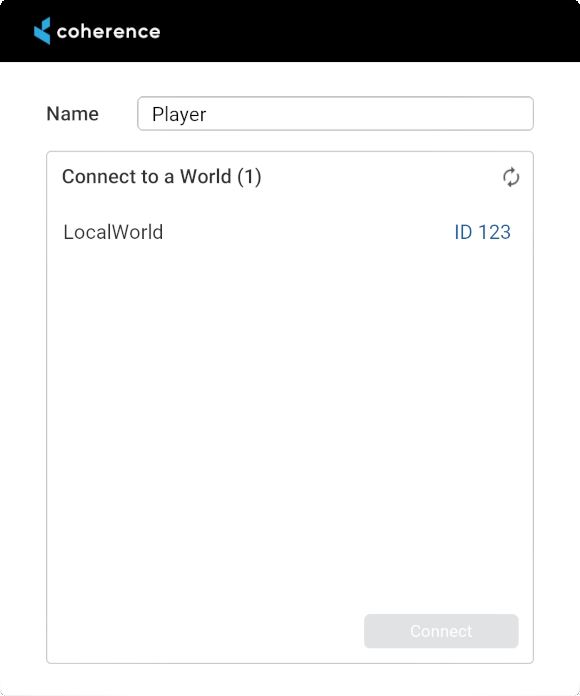

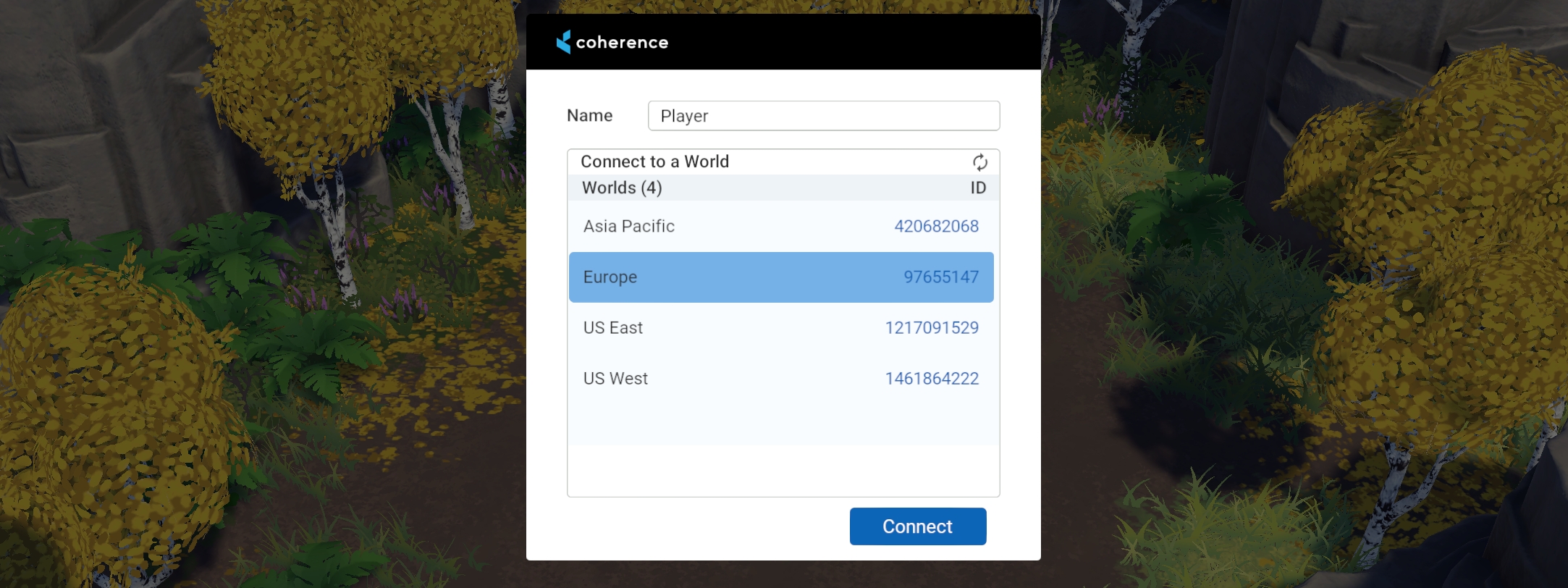

The Worlds Connect Dialog is a good option to start simple. It simply holds a dropdown for region selection, an input field for the players name, and a Connect button.

If you start a local World Replication Server, it will appear as LocalWorld. Similarly if there are Worlds running in the coherence Cloud, they will be listed here.

Future versions of coherence won't override your changes. If you upgrade to a newer version of coherence and import a new sample, they will be imported in a separate folder named after the coherence version.

If you want the new sample to overwrite the old one, first rename the folder in which the samples are, then import the new version.

We have seen a lot of examples where objects belonging to a Client would disappear with them when they disconnect. We call these objects session-based entities.

But coherence also has a built-in system to make objects survive the disconnection of a Client, and be ready to be adopted by another Client or a Simulator. We call these objects persistent. Persistent objects stay on the Replication Server even if no Client is connected, creating the feeling that the game world is alive beyond an individual player session.

| |

WASD or Left stick: Move character

Hold Shift or Shoulder button left: Run

P or Right shoulder button: Plant a flower (hold to preview placement)

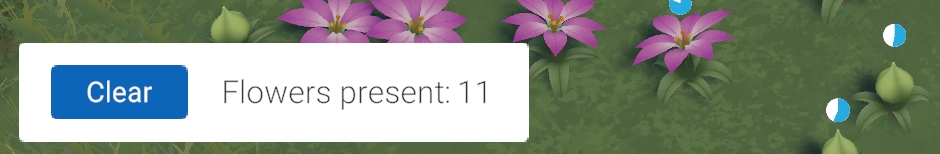

Players can plant flowers in this little valley. Each flower has 3 phases: starts as a bud, blooms into a full flower, and then withers after some time.

Creating a flower generates a new, persistent network entity. Even if the Client disconnects, the flower will persist on the server. When they reconnect, they will see the flower at their correct stage of growth (this is a little trick ).

Planting too many flowers starts erasing older flowers. A button in the UI allows clearing all flowers (belonging to any player) at any time.

When using the plant action, any connected player instantiates a copy of the Flower Prefab (located in the /Prefabs/Nature folder).

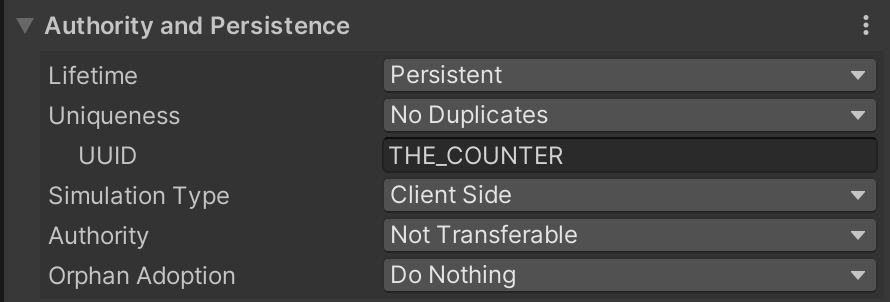

By selecting the Prefab asset, we can see its CoherenceSync component is set up like this:

In particular, notice how the Lifetime property is set to Persistent. This means that when the Client who plants a flower disconnects, the network entity won't be automatically destroyed. Auto-adopt Orphan set to on makes it so the next player who sees the flower instantly adopts it, and keeps simulating its growth.

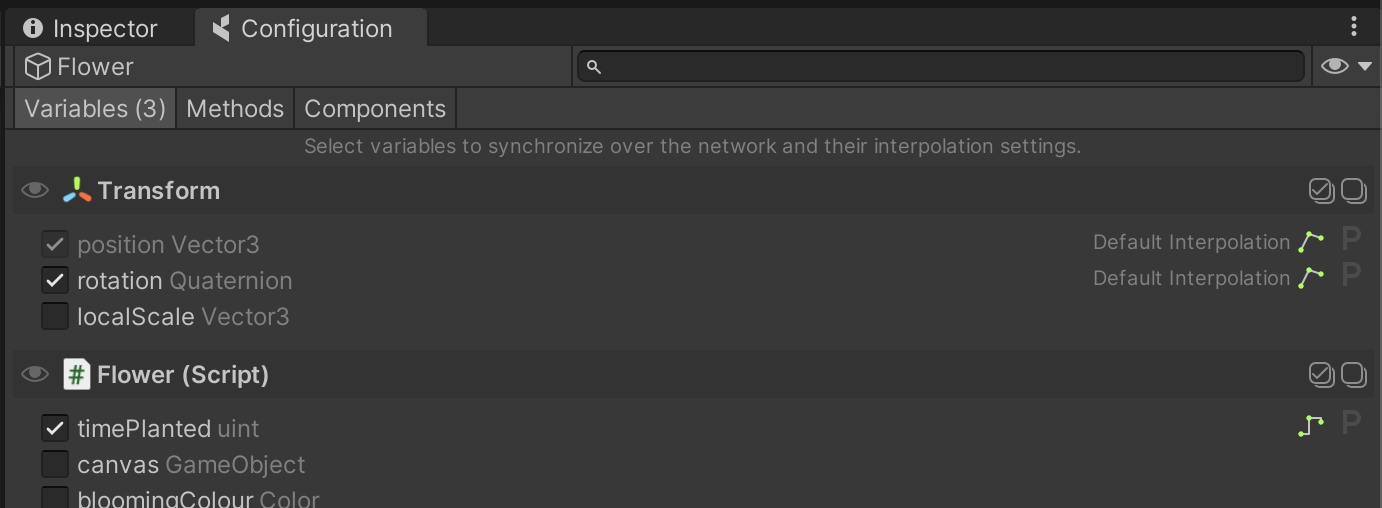

Opening coherence's Configuration window, you will see that we sync position, rotation, and a variable called timePlanted:

Once a flower has spawned, all of its logic runs locally (no coherence involved). An internal timer calculates what phase it should be in by looking at the timePlanted property and doing the math, and playing the appropriate animations and particles as a result.

To achieve this, the flowers of this scene store the Flower.timePlanted value on the Replication Server. A Replication Server with no connected Clients is dormant, and has a very low cost to run. So when nobody's connected the flowers are not actually simulating, they are just waiting.

When a new Client comes online and this value is synced to them, they immediately fast-forward the phase of the flower to the correct value, and then they start simulating locally as normal.

This gives the players the perception that things are still running even when they are not connected.

This setup is not bulletproof, and could be easily cheated if a player comes online with a modified Client, changing the algorithm calculating the flowers' phase.

But for a game in which this calculation is not critical, especially if it doesn't affect other player's experience of the game, this can be a nice setup to cut some costs.

Every Client can, at any time, remove all flowers from the scene by clicking a button in the UI.

It's important to remember that you shouldn't call Destroy() on a network entity on which the Client doesn't have authority on. To achieve this, we first request authority on remote flowers and listen for a reply. Once obtained it, we destroy them.

Check the code at the end of the Flower script:

Getting updates about every entity in the whole scene is unfeasible for big-world games, like MMOs. For this, coherence has a flexible system for creating areas of interest, and getting updates only about the entities that each Client cares about, using a tool called Live Query.

|

WASD or Left stick: Move character

Hold Shift or Shoulder button left: Run

Spacebar or Joypad button down: Jump

This scene contains two cubes that represent areas of interest. Every connected Client can only see other players if they are standing inside one of these cubes.

Select one of the two GameObjects named LiveQuery. You will see they have a CoherenceLiveQuery component:

This component defines an area of interest, in this case a 10x10x10 cube (5 is the Extent). This is telling the Replication Server that this Clients is only interested in network entities that are physically present within this volume.

If a Client has to know about the whole world, it's just enough to set the Live Query to Infinite

In addition, Live Queries can be moved in space. They can be parented to the camera, to the player, or to other moving elements that denote an area of interest - depending on the type of game.

It is also possible, like in this scene, to have more than one Live Query. They will act as additive, requesting updates from entities that are within at least one of the volumes.

Notice that at least one Live Query is needed: a Client with no Live Query in the scene will receive no updates at all.

If you explored previous scenes you might have noticed that GameObjects with a Live Query component were actually there, but in this scene we gave them a special visual representation, just for demo purposes.

Try moving in and out of volumes. You will notice that network-instantiation takes care of destroying the GameObject representing a remote entity that exits a Live Query, and reinstantiates it when it enters one again.

Also, notice that the player belonging to the local Client doesn't disappear. coherence will stop sending updates about this instance to other Clients, but the instance is not destroyed locally, as long as the Client retains authority on it.

If a GameObject can be in a state that needs to be computed somehow, it might not appear correctly in the instant it gets recreated.

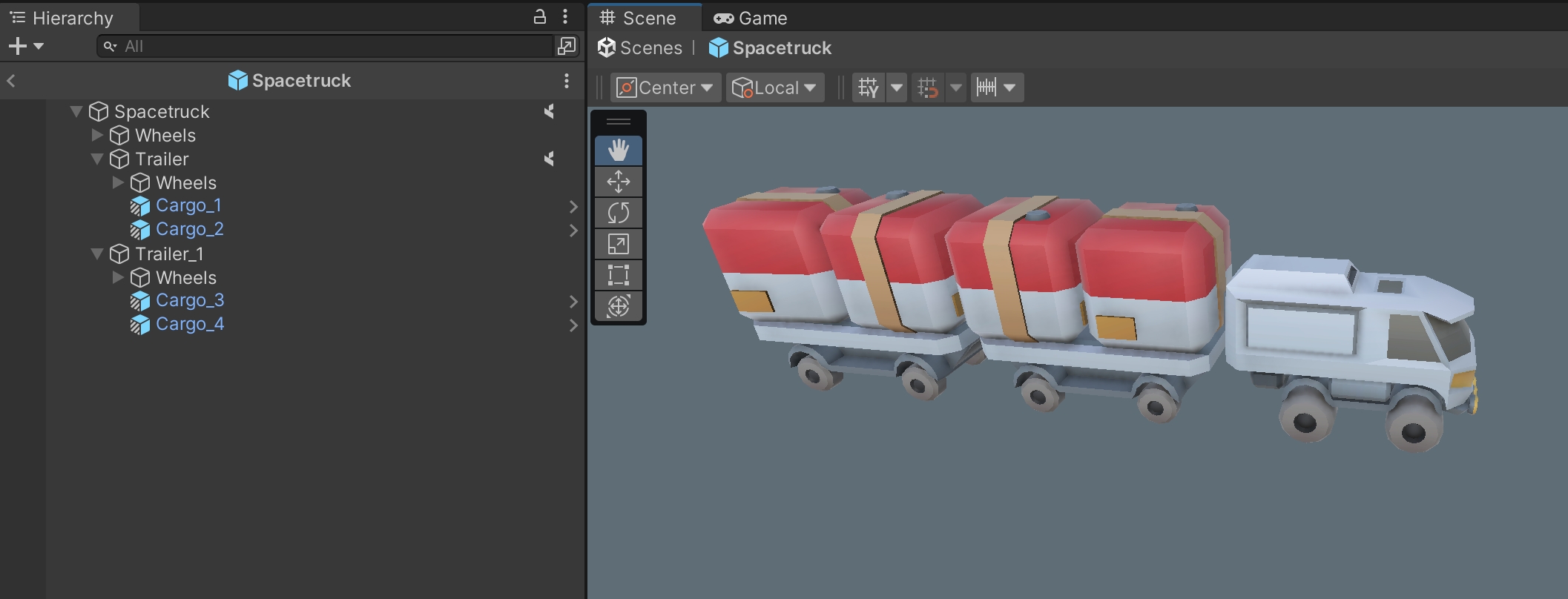

Every now and then it makes sense to parent network entities to each other, for instance when creating vehicles or an elevator. In this sample scene we'll see what are the implications of that, and how coherence uses this to optimize network traffic.

Moving platforms | | |

WASD or Left stick: Move character

Hold Shift or Shoulder button left: Run

Spacebar or Joypad button down: Jump

This wintery setting contains 2 moving platforms running along splines. Players can jump on them and they will receive the platform's movement and rotation, while still being able to move relative to the platform itself.

This scene doesn't require anything special in terms of network setup to work.

Direct parenting of network entities in coherence happens exactly like usual, with a simple transform.SetParent(). The player's Move script is set to recognize the moving platforms when it lands on them, and it just parents itself to it.

As for the platforms, they are just moving themselves as kinematic rigid bodies, following the path of their spline (see the FloatingPlatform script). Their position and rotation is synced on the network, and the first Client to connect assumes authority over them.

Once directly parented, coherence automatically switches to sync the child's position and rotation as local, rather than in world space. This means that when child entities don't move within their parent, no data about them is being sent across the network.

Imagine for instance a situation where 3 players are riding one of the platforms and not moving, only the coordinates of the platform are being synced every frame.

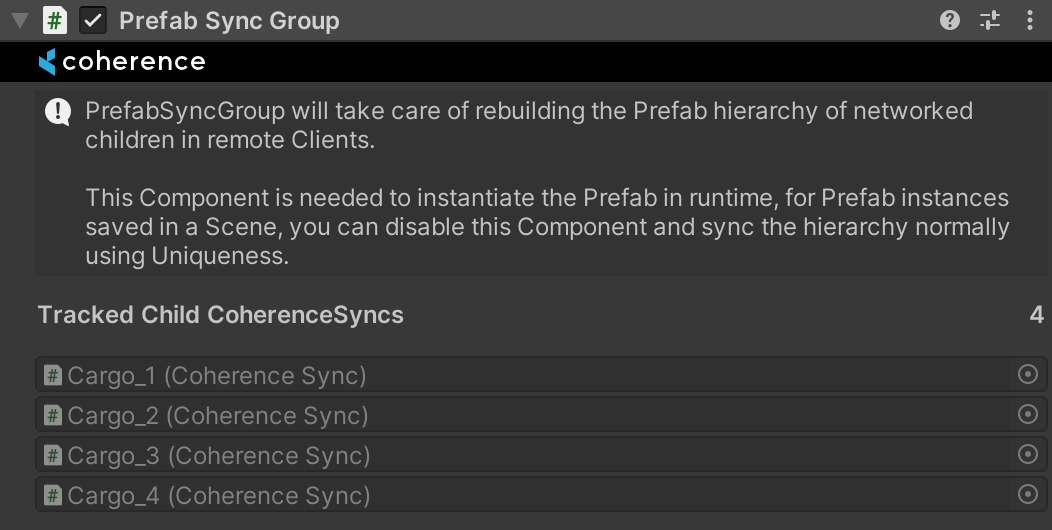

You might have noticed we always mentioned "direct" parenting. One limitation of this simple setup is that the parented network entity has to be a first-level child of the parent one. This doesn't exclude that the parent can have other child GameObjects (and other networked entities!), but networked entities have to be a direct child.

A hierarchy could look like this:

Platform

Player

Character graphics

Bones

...

Platform's graphics

...

(In bold is the root of each Prefab, which has a CoherenceSync component)

You can even parent multiple network entities to each other. For example, a networked character holding a networked crate, riding a networked elevator, on a networked spaceship. In that case:

Spaceship

Elevator1

Elevator graphics

Elevator2

Player

Crate

Character graphics

Elevator graphics

Spaceship graphics

...

When parenting entities, it is important that the child's position, rotation, and local scale are replicated so that all Clients see the relative state of the child when connected to a parent. If these properties are not replicated on the child, it is possible that different Clients will see different states of the child relative to the parent.

In this sample we look at how to network simple physics simulated directly on the Clients, and the implications of this setup.

If we were making a game that relied on precise physics at play between the players (like a sports match, for instance), we would probably go with a setup where the Clients connect to a that runs the physics and prevents cheating.

However, that makes running the game much more expensive for the developer, since a Simulator has to be always on.

Physics | | Uniqueness |

WASD or Left stick: Move character

Hold Shift or Shoulder button left: Run

Spacebar or Joypad button down: Jump

E or Joypad button left: Pick up / throw objects

This scene features a few crates that the players can pick up and throw around. Who runs the physics simulation here? You could say that everyone runs their part.

Let's take a closer look at the setup.

Select one of the crates in the scene. You can see that they have normal Box Collider and Rigidbody components. Up until a player is connected, they are being simulated locally. In fact if you press Play, they will fall down and settle.

The crates also have a CoherenceSync component. The first player to connect gets authority over them, and begins simulating the physics for them.

That Client now syncs 5 values over the network, including the most important ones that will drive the crate's motion: Transform.position and Transform.rotation.

On other Clients however (the ones that connect after the first one) these crates will become "remote". Their Rigidbody will become kinematic, so that now their movement is controlled by the authority (i.e. the first Client).

At this point, the first Client to connect is simulating all the crates. However, if we were to leave things like this, interacting with physical objects that are simulated by another Client would be quite unpleasant due to the lag.

To make it better, other Clients steal authority over crates, whenever they either:

Touch/collide with a crate directly

Pick a crate up

In code, this authority switch is a trivial operation, done in a single line. You can find the code in the Grabbable class. Essentially, it boils down to this:

As you can see, it's good practice to ask first if the requesting script already has authority over an object, to avoid wasted work.

If the request succeeds, the instance of the crate on the requesting Client becomes authoritative, and the Client starts simulating its physics. On the other Client (the previous owner) the object becomes remote (and its Rigidbody kinematic), and is now just receiving position and rotation over the network.

Careful! Since authority request is a network operation, you can't run follow-up code right away after having requested it. It's good practice to set a listener to the events that are available on the Coherence Sync component, like this:

This way, as soon as the reply comes back, we can perform the rest of the code.

Also note that while it's totally possible to configure an object so that Clients can just steal authority from each other, we configured the crates here to require an authority request.

When they want authority, Clients have to request it and most importantly, wait for an answer.

We implemented this request / answer mechanism to avoid problems of concurrency, where two players are requesting authority on a crate at the same time, and end up with a broken state because the game code assumes that they both got it.

So who is running the physics, after all? We can now say that it's everyone at the same time, as roles change all the time.

As mentioned before, pressing Tab (or clicking the Joystick) switches to an authority view. It's very interesting to see how crates switch sides when a player interacts with them.

For more on authority, take a look inside the Grabbable class. It has more code regarding authority events, all commented.

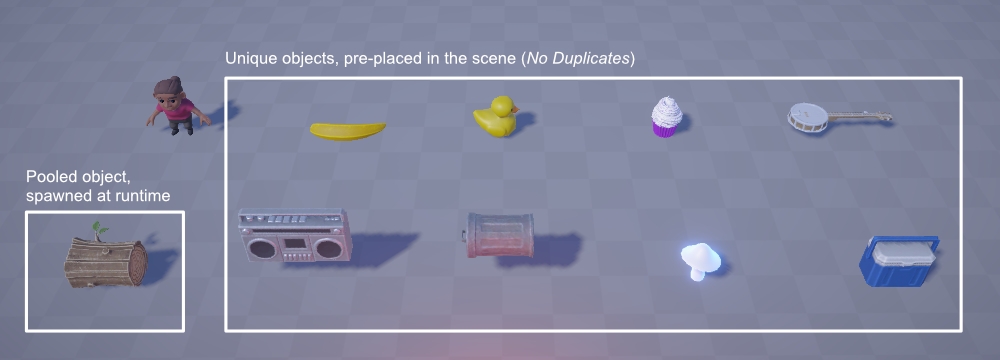

There is one important thing to note in this setup. Since the objects are already in the scene at the start, by default every time a Client connects it would try to sync those instances to the network. This is very similar to what we have seen with character instantiation so far: each Clients brings their own copy.

However, in this case this would effectively duplicate the crates, once online. One extra copy for each connected player! We don't want that.

For this reason, the CoherenceSync is configured so that these crates have No Duplicates. This is generally the correct way of configuring networked Prefab instances that have been manually placed in the scene.

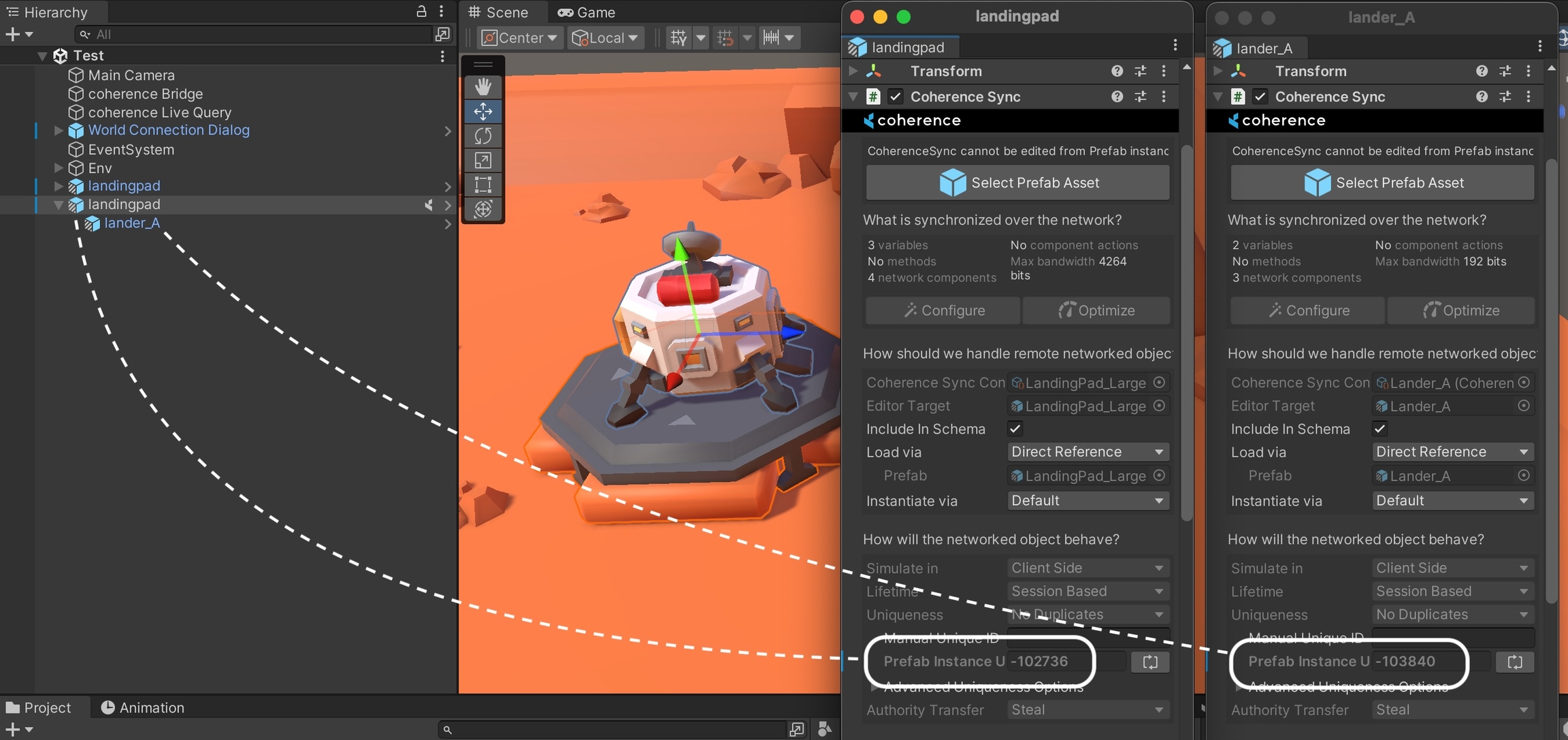

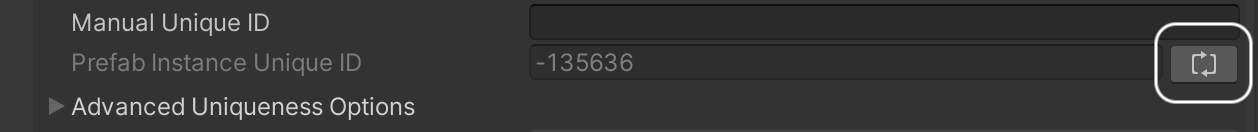

In addition to a unique identifier (the Manual Unique ID), coherence will auto-assign an additional identifier (the Prefab Instance Unique ID) whenever the crate is instantiated in the scene at edit time.

With these parameters in mind, the way the crates behave is as follows:

At the start, none of the entities exist on the Replication Server (yet).

Client A connects. They sync the crates onto the network. Being unique, the Replication Server takes note of their ID.

Client B connects. They try to bring the same crates onto the network, but because it is set to be No Duplicates and coherence finds there is already a network entity with the same ID, it doesn't create a new network entity but recognises that crate as the one on the server, and just makes it non-authoritative for Client B.

If Client A disconnects, the crates are not destroyed because their Lifetime is set to Persistent. They briefly become orphaned (no one has authority on them) but immediately the authority is passed to Client B due to the option Auto-adopt Orphan being on.

If everyone disconnects, the crates remain on the Replication Server as network entities that are orphaned. They keep whatever position/rotation they had, since nobody is simulating them anymore.

At this point, nobody is connected. The Replication Server is not doing any work.

When a new Client reconnects and tries to bring the crates online again, the same thing happens again: the crates in the scene are associated with the orphaned entities and are adopted by the new client, who assumes authority on them.

They will also most probably see the crates snap to the last seen position/translation that was stored on the Replication Server, which is synced just before they assume full control over the crates.

At this point, they start simulating their physics locally, like normal.

Game characters and other networked entities are often made of very deep hierarchies of nested GameObjects, needing to sync specific properties along these chains. In addition, a common use case is to parent a networked object to the tip of a chain of GameObjects.

Let's see how to handle these cases.

|

A/D or Left/right joypad triggers: Rotate crane base

W/S or Left joystick up/down: Raise/lower crane head

Q/E or Left joystick left/right: Move crane head forward/back

P/Space/Enter or Joypad button left: Pick up and release crate

This scene features a robotic arm that can be controlled by one player at a time. In the scene, a small crate can be picked up and released.

The first player to connect takes control of the arm, and other players can request it via a UI button.

To demonstrate complex hierarchies we choose to sync the movement of a robot arm, made of several GameObjects. In addition to syncing several positions and rotations, we also sync animation variables and other script parameters, present on child objects.

To sync the whole arm we use a coherence feature called deep bindings, that is bindings that are located not on the root object, but deeper in the transform hierarchy.

Select the RobotArm Prefab asset located in /Prefabs/Characters, and open it for editing. You will immediately notice a host of little coherence icons to the right of several GameObjects in the Hierarchy window:

These icons are telling us that these GameObjects have one or more binding currently configured (a variable, a method, or a component action).

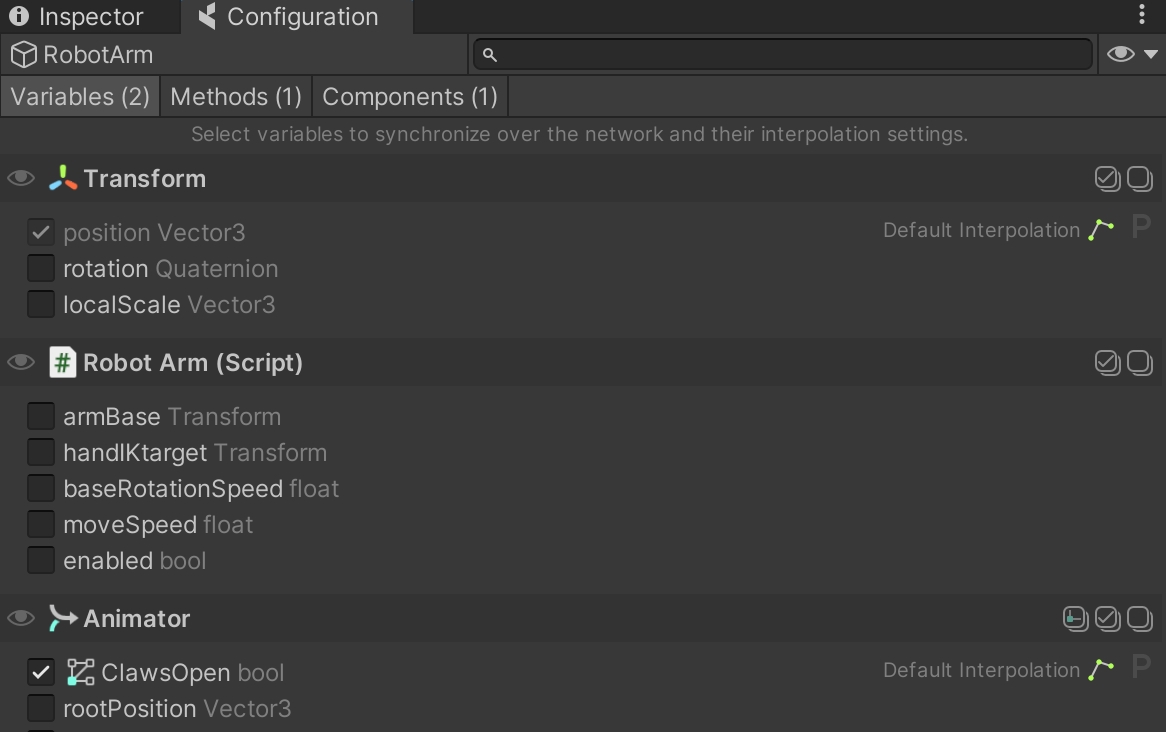

Now open the coherence Configuration window, and click through those objects to discover what's being synced:

In addition to position and rotation, we also choose to sync the animation parameter ClawsOpen, and enable Animator.SetTrigger() as a Network Command. Finally we disable the Robot Arm script when losing authority (to disallow input).

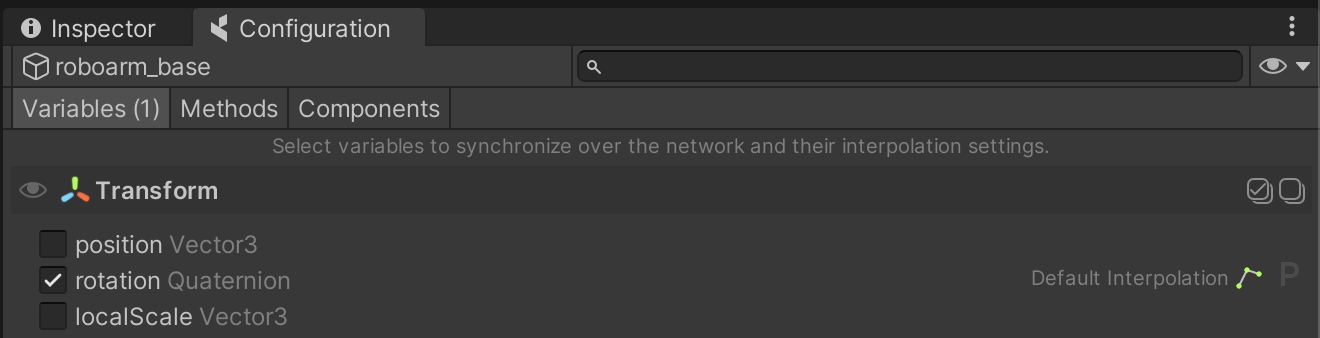

This is the base of the robot arm, for which we only sync rotation:

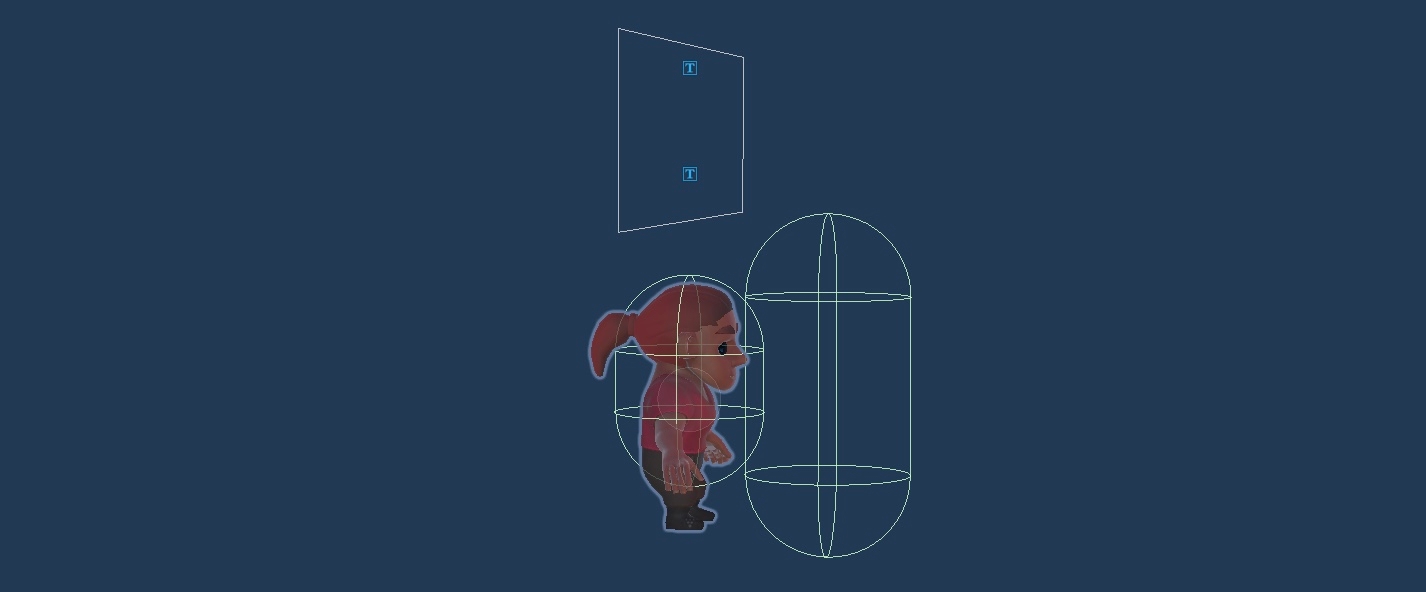

We don't sync the rotation of every object in the chain, since the arm is equipped with an IK solver, which allows us to just sync the target (Two-Bone IK_target) and work out the rotation of the limb (robotarm_bottomarm and robotarm_toparm) on each Client:

By syncing all of these properties, we can have the robotic arm move in sync on all Clients, simply by translating the tip of the IK, and rotating the base of the crane. All of the bindings in this hierarchy are synced through the Coherence Sync component present on the Prefab's root object RobotArm.

As you can see, using deep bindings doesn't require any special setup: they are enabled in exactly the same way as a binding, a Network Command, or a Component action is enabled on the root GameObject.

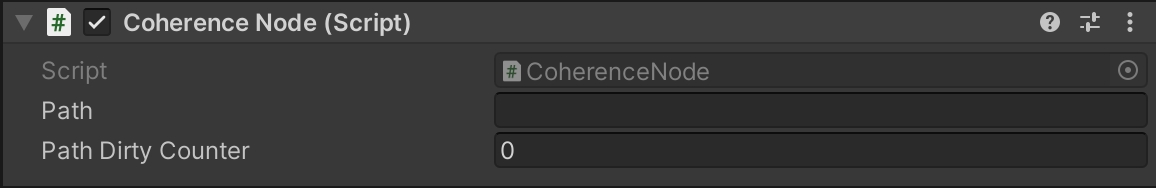

The Path property displays the location in the hierarchy where this object will be inserted. It gets automatically updated by coherence every time the object is parented. Each number represents a child in the root object (and it's 0-based).

Once we have this component set up, parenting the object only requires calling Transform.SetParent() like any usual parenting operation, and setting its Rigidbody component to be kinematic.

When we do this, coherence takes care of propagating the parenting to other Clients, so that the crate becomes a child GameObject on every connected Client.

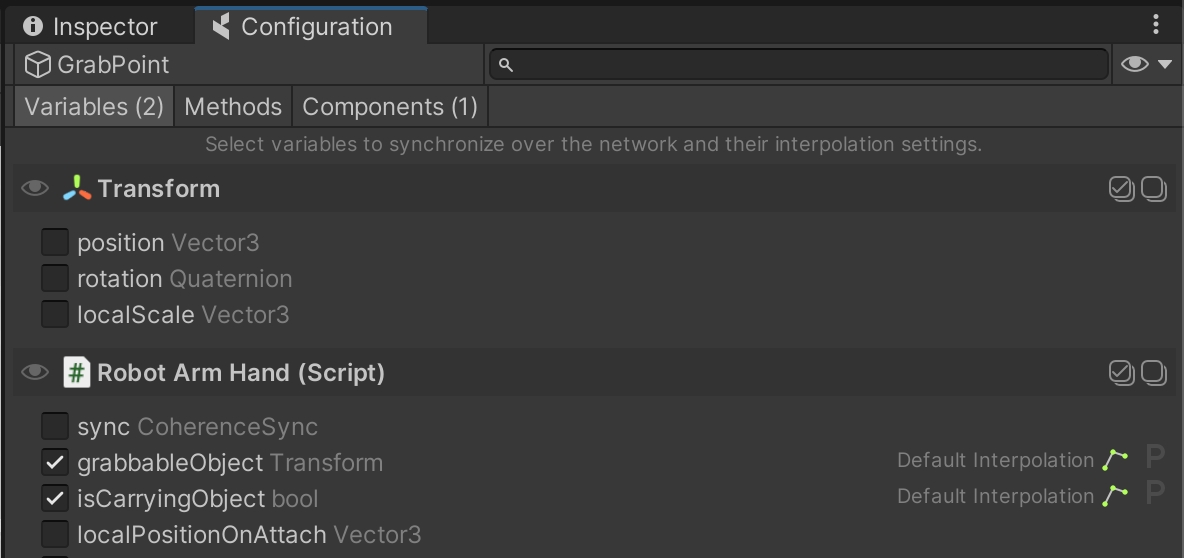

This code is in the RobotArmHand class, a component attached to the tip of our hierarchy chain: GrabPoint. In OnTriggerEnter we detect when the crate is in range, storing a reference to it in a variable of type Transform named grabbableObject.

This reference is set to sync:

When the player presses the key P (or the Left Gamepad face button), the referenced crate is parented to the GrabPoint GameObject.

Note that coherence natively supports syncing references to CoherenceSync and Transform components, and to GameObjects.

Even if the Robot Arm Hand script is disabled on non-authoritative Clients, it references the correct grabbed crate in the grabbableObject variable due to it being synced over the network. So when its authority disconnects, other Clients will already have the correct reference to the crate network entity.

This allows us to gracefully handle a case where, for instance, a Client picks up the crate and disconnects. Because both the crate and the robot arm have Auto-adopt Orphan set to "on", authority is passed onto another Client and they immediately have all the data needed to keep handling the crate.

To move authority between Clients, we can use the UI in the bottom left corner. The button is connected to the Robot Arm Authority script on the ArmAuthoritySwapper GameObject, and it transfers authority on both the robot arm and the crate. This script takes care also of what happens as a result of the transfer, including setting the crate to be kinematic or not.

Is Kinematic is set as follows:

The code is in the RobotArmAuthority class. To detect whether it's currently being held, it's as simple as checking whether its Transform.parent is null:

Remember you can use Tab/click the Gamepad stick to use the authority visualization mode. Try requesting authority from another Client while in this mode.

Using the same scene as in the , we now take a look at another way to make Clients communicate: Network Commands. Network Commands are commonly referred to as "RPCs" (Remote Procedure Calls) in other networking frameworks. You can think of them as sending messages to objects, instead of syncing the value of a variable.

|

WASD or Left stick: Move character

Hold Shift or Shoulder button left: Run

Spacebar or Joypad button down: Jump

Q or D-pad up: Wave

Building on top of previous examples, let's now focus on two key player actions. Press Space to jump, or Q to greet other players. For both of these actions to play their animation, we need to send a command over the network to invoke Animator.SetTrigger() on the other Client.

Like before, select the player Prefab located in the /Prefabs/Characters folder, and browse its Hierarchy until you find the child GameObject called Workman.

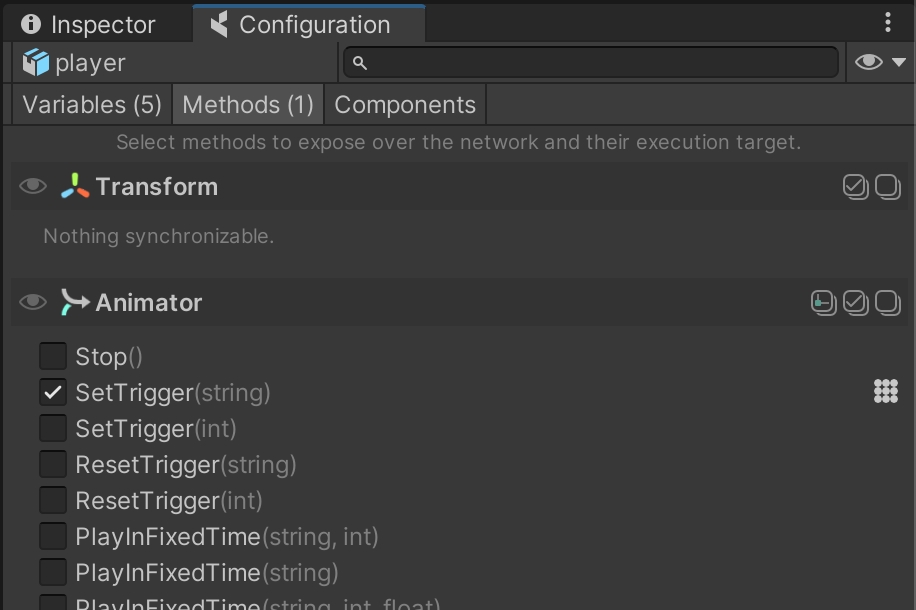

Open the coherence Configure window on the third tab, Methods:

You can see how the method Animator.SetTrigger(string) has been marked as a Network Command. With this done, it is now possible to invoke it over the network using code.

You can find the code doing so in the Wave class (located in /Scripts/Player/Wave.cs):

Analysing this line of code, we can recognize 5 key parts:

First, notice how the command is invoked on a specific CoherenceSync (that sync property).

We want to invoke this command on a component that is an Animator.

We invoke a method called "Animator.SetTrigger".

With MessageTarget.Other, we are asking to send this message only to network entities other than the one that has the CoherenceSync we chose to use.

We pass the string "Wave" as the first parameter of the method to invoke.

Because we don't invoke this on the one with authority, you will notice that just before invoking the Network Command, we also call SetTrigger locally in the usual way:

An alternative to this would have been to call CoherenceSync.SendCommand() with MessageTarget.All.

Samples are copied to your assets folder, in Samples/coherence/version_number/. This means you can change and customise the scripts and Prefabs however you like.

The folder to rename is the one that is named after the version number (normally its path would be something like Samples/coherence/1.1.0/ for coherence 1.1.0).

When it gets instantiated, the flower writes the current into the timePlanted variable. This variable never changes after this, and is used to reconstruct the phase in which the flower is in (see ). Similarly, as the flower is not moving, position and rotation are only synced at the time of planting.

coherence supports the ability to have an instance of the game active in the cloud, running some logic all the time (we call this a ). However, this might be an expensive setup, and it's good advice to think things through differently to keep the cost of running your game lower.

As we discussed in the , switching authority is a network operation that is asynchronous, so we need to wait for the reply from the player who currently has authority.

Now it's clear why Transform.position cannot be excluded from synchronization, as we saw in . coherence needs to know where network entities are in space at all times, to detect if they fall within a Live Query or not.

For instance, an animation state machine might not be in the correct animation state if it had previously reached that state via a trigger parameter. You would have to ensure that the trigger is called again when the instance gets network-instantiated (via a ) or switch your state machine to use other type of animation parameters, which would be automatically synced as soon as the entity gets reinstantiated.

One important note: this sample describes parenting at runtime. For more information on edit-time parenting, see the page about .

For cases like these, coherence takes care of them automatically. More complex hierarchies require a different handling, and we cover them in .

As we mentioned in the intro - in a simple game where precise physics are non-crucial this might be enough, and it will definitely keep the costs of running the game down, since no has to run in order to make the game playable.

For more information on persistence, there's about it.

One important note: this sample describes deep parenting at runtime. For more information on edit-time deep parenting, see the page about .

As mentioned in the lesson about , parenting a network entity to a GameObject that belongs to a chain requires some setup. To be able to pick up the crate with the crane, we equip it with a CoherenceNode component:

Similarly to the crates in the , we don't just want the crate to automatically become non-kinematic when we have authority on it. We want the crate to stay kinematic when authority changes while it's being held by the arm.

In this example we used Network Commands to trigger a transition in an animation state machine, but they can be used to call any instantaneous behavior that has to be replicated over the network. As an example of this, it is also used in the lesson to change a number in a UI element across all Clients.

Is being held

true

true

Has been released

false

true

Is a Replication Server running?

Tip: Try running one locally, before trying the coherence Cloud.

Does the running Replication Server have the right ?

Ensure you have baked the netcode.

For local RS, shut down the the Replication Server and launch it again.

For cloud RS, ensure you have uploaded the schema to the cloud, then retry.

Ensure you only have one Replication Server running.

Are you connected to the Replication Server? Check the icon and messages on the CoherenceBridge component.

Do you have at least one LiveQuery in the scene, and is it framing the gameplay?

Tip: You can set it to radius 0 so it encompasses the whole game space.

Authority | Authority transfer | Network Commands

In a networked game, an object's logic is always run by one node on the network, whether it's a Client or a Server (which we call a Simulator in coherence). We say that the node "has authority" on the network entity.

There are cases where it makes sense to transfer authority, like it happens in this project with objects that can be picked up. When the player grabs an object, the Client performing it requests authority over the network entity. Once it gets authority it starts running its scripts and has full control over it. This is a very good way to go when only one player can interact with a certain object at a given time.

For more info, check the lesson about transferring authority in the First Steps project.

However, there are cases when we don't want to change who has authority on an entity. In the case of an object that many players can interact with at the same time, it wouldn't make sense to continuously move authority between nodes.

The interaction with such remote entities then needs to happen entirely through Network Commands.

In this project, it is the case of the chairs placed in the scene. The first Client or Simulator to connect will take authority over them, and it will keep it until they disconnect.

When a player wants to sit down on a chair, they inform the Authority that they are doing so. The client holding authority will then set the chair as busy, which prevents other players from sitting on it next time they try.

However, for the sake of simplicity and to illustrate the point, we intentionally left this interaction a bit flaky. Can you guess why? What could go wrong with this setup?

The action originates in SitAction.cs:

SitAction checks if the isBusy property of the chair is set to true (by the authority, of course). If so, it means someone else is already sat on the chair. If false, we can sit. So it invokes Chair.Occupy().

And further down, the essence of the interaction:

So both when occupying a chair (Occupy()) or standing up (Free()), the player executing the action invokes the ChangeState method, either directly or as a Network Command - depending if they are the one with authority.

So one way or the other, ChangeState gets executed on the authority, who sets the isBusy property to its new value. On the next coherence update, the property will be sent to the other Clients.

The answer: Clients are using the isBusy property as a check for whether they can sit or not. It is possible that two players will approach a chair at the same time, check if isBusy is false (and yes, it will be false), at which point they will inform the authority that want to sit down on it.

The authority performs no additional checks, so you will see both players successfully sitting on the chair, overlapping on each other.

Thankfully we also coded the rest of the interaction so that this doesn't break the game. So while this incidence and the consequences for this interaction are low-risk, if you're looking to create a more robust system it could make sense to implement a check on the authority, and have the Client wait for an answer before they sit down.

We do this in other parts of the demo, like when chopping a tree or when picking up an object. Check the following section on chopping trees to explore this similar but more complex use case.

Authority | Authority transfer | Network Commands

We saw in the previous section about sitting on chairs how sometimes it makes sense not to move authority around between Clients. At this point, Network Commands are the way to interact with a remote object.

Now let's take a look at another case of remote object, where the interactions with it need to be validated by the one holding authority, to avoid nasty cases of concurrency.

In this project, it is the case of the trees that are placed in the scene. The first Client or Simulator to connect will take authority over them, and it will keep it until they disconnect.

When a player wants to chop a tree, they request the Authority to subtract 1 unit of energy. When the energy runs out, it's the Authority that spawns a new Log instance.

This centralization, as opposed to passing authority around, allows multiple players to chop the same tree at the same time and prevents many race conditions, because the important action (destroying the tree and spawning the log) is all resolved on the Client with Authority.

Conceptually, we can imagine the event flow to go like this:

(1) Chop action happens on a Client -> (2) Authority is notified, elaborates new state -> (3) Authority sends result to all others -> (4) All other Clients play out animation and effects

You can find this flow in practice in the ChoppableTree.cs script. In this script, only one variable is synchronized, the energy of the tree:

The flow goes like this:

(1) A player presses the button to chop down the tree.